Concentrating on noise production rather of word option produces a versatile system.

The individual’s implant gets connected for screening.

Credit: UC Regents

Stephen Hawking, a British physicist and probably the most well-known male struggling with amyotrophic lateral sclerosis (ALS), interacted with the world utilizing a sensing unit set up in his glasses. That sensing unit utilized small motions of a single muscle in his cheek to pick characters on a screen. When he typed a complete sentence at a rate of approximately one word per minute, the text was manufactured into speech by a DECtalk TC01 synthesizer, which offered him his renowned, robotic voice.

A lot has actually altered because Hawking passed away in 2018. Current brain-computer-interface (BCI) gadgets have actually made it possible to equate neural activity straight into text and even speech. These systems had substantial latency, frequently restricting the user to a predefined vocabulary, and they did not manage subtleties of spoken language like pitch or prosody. Now, a group of researchers at the University of California, Davis has actually developed a neural prosthesis that can immediately equate brain signals into noises– phonemes and words. It might be the very first genuine action we have actually taken towards a completely digital singing system.

Text messaging

“Our primary objective is producing a versatile speech neuroprosthesis that makes it possible for a client with paralysis to speak as with complete confidence as possible, handling their own cadence, and be more meaningful by letting them regulate their articulation,” states Maitreyee Wairagkar, a neuroprosthetics scientist at UC Davis who led the research study. Establishing a prosthesis ticking all these boxes was a huge obstacle since it indicated Wairagkar’s group needed to resolve almost all the issues BCI-based interaction options have actually dealt with in the past. And they had rather a great deal of issues.

The very first problem was moving beyond text– most effective neural prostheses established up until now have actually equated brain signals into text– the words a client with an implanted prosthesis wished to state just appeared on a screen. Francis R. Willett led a group at Stanford University that attained brain-to-text translation with around a 25 percent mistake rate. “When a lady with ALS was attempting to speak, they might translate the words. 3 out of 4 words were right. That was extremely amazing however inadequate for everyday interaction,” states Sergey Stavisky, a neuroscientist at UC Davis and a senior author of the research study.

Hold-ups and dictionaries

One year after the Stanford work, in 2024, Stavisky’s group released its own research study on a brain-to-text system that bumped the precision to 97.5 percent. “Almost every word was right, however interacting over text can be restricting, right?” Stavisky stated. “Sometimes you wish to utilize your voice. It permits you to make interjections, it makes it less most likely other individuals disrupt you– you can sing, you can utilize words that aren’t in the dictionary.” The most typical technique to producing speech relied on manufacturing it from text, which led directly into another issue with BCI systems: really high latency.

In almost all BCI speech help, sentences appeared on a screen after a substantial hold-up, long after the client ended up stringing the words together in their mind. The speech synthesis part normally occurred after the text was prepared, which triggered a lot more hold-up. Brain-to-text options likewise experienced a minimal vocabulary. The current system of this kind supported a dictionary of approximately 1,300 words. When you attempted to speak a various language, utilize more fancy vocabulary, or perhaps state the uncommon name of a coffee shop simply around the corner, the systems stopped working.

Wairagkar developed her prosthesis to equate brain signals into noises, not words– and do it in genuine time.

Drawing out sound

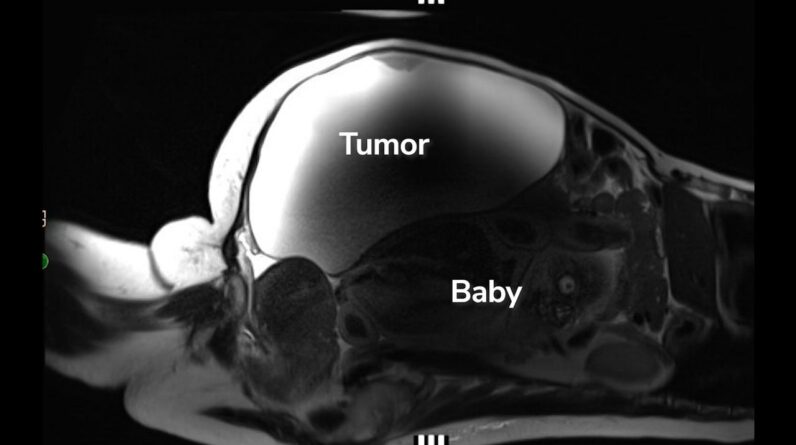

The client who consented to take part in Wairagkar’s research study was codenamed T15 and was a 46-year-old male struggling with ALS. “He is seriously paralyzed and when he attempts to speak, he is extremely tough to comprehend. I’ve understood him for a number of years, and when he speaks, I comprehend perhaps 5 percent of what he’s stating,” states David M. Brandman, a neurosurgeon and co-author of the research study. Before dealing with the UC Davis group, T15 interacted utilizing a gyroscopic head mouse to manage a cursor on a computer system screen.

To utilize an early variation of Stavisky’s brain-to-text system, the client had actually 256 microelectrodes implanted into his forward precentral gyrus, a location of the brain accountable for managing singing system muscles.

For the brand-new brain-to-speech system, Wairagkar and her coworkers counted on the very same 256 electrodes. “We tape-recorded neural activities from single nerve cells, which is the greatest resolution of details we can receive from our brain,” Wairagkar states. The signal signed up by the electrodes was then sent out to an AI algorithm called a neural decoder that figured out those signals and drawn out speech functions such as pitch or voicing. In the next action, these functions were fed into a vocoder, a speech manufacturing algorithm developed to seem like the voice that T15 had when he was still able to speak usually. The whole system dealt with latency to around 10 milliseconds– the conversion of brain signals into noises was successfully immediate.

Due to the fact that Wairagkar’s neural prosthesis transformed brain signals into noises, it didn’t included a minimal choice of supported words. The client might state anything he desired, consisting of pseudo-words that weren’t in a dictionary and interjections like “um,” “hmm,” or “uh.” Since the system was delicate to functions like pitch or prosody, he might likewise vocalize concerns stating latest thing in a sentence with a somewhat greater pitch and even sing a brief tune.

Wairagkar’s prosthesis had its limitations.

Intelligibility enhancements

To evaluate the prosthesis’s efficiency, Wairagkar’s group initially asked human listeners to match a recording of some manufactured speech by the T15 client with one records from a set of 6 prospect sentences of comparable length. Here, the outcomes were entirely ideal, with the system accomplishing 100 percent intelligibility.

The problems started when the group attempted something a bit harder: an open transcription test where listeners needed to work with no prospect records. In this 2nd test, the word mistake rate was 43.75 percent, significance individuals determined a bit over half of the tape-recorded words properly. This was definitely an enhancement compared to the intelligibility of the T15’s unaided speech where the word mistake in the exact same test with the very same group of listeners was 96.43 percent. The prosthesis, while appealing, was not yet reputable sufficient to utilize it for daily interaction.

“We’re not at the point where it might be utilized in open-ended discussions. I consider this as an evidence of idea,” Stavisky states. He recommended that a person method to enhance future styles would be to utilize more electrodes. “There are a great deal of start-ups today constructing BCIs that are going to have more than a thousand electrodes. If you think of what we’ve attained with simply 250 electrodes versus what might be made with a thousand or more thousand– I believe it would simply work,” he argued. And the work to make that take place is currently underway.

Paradromics, a BCI-focused start-up based in Austin, Texas, wishes to go on with scientific trials of a speech neural prosthesis and is currently looking for FDA approval. “They have a 1,600 electrode system, and they openly mentioned they are going to do speech,” Stavisky states. “David Brandman, our co-author, is going to be the lead principal detective for these trials, and we’re going to do it here at UC Davis.”

Nature, 2025. DOI: 10.1038/ s41586-025-09127-3

Jacek Krywko is a freelance science and innovation author who covers area expedition, expert system research study, computer technology, and all sorts of engineering wizardry.

46 Comments

Find out more

As an Amazon Associate I earn from qualifying purchases.