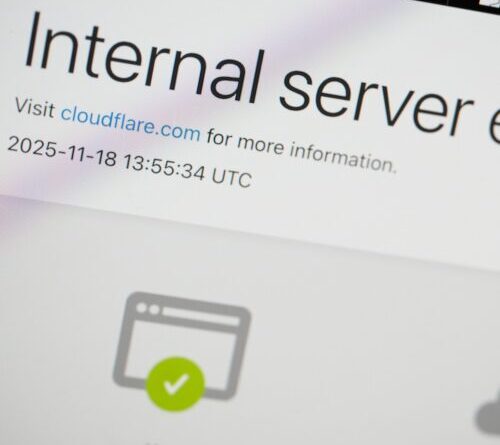

Anthropic’s suggested mitigation for users is to “monitor Claude while using the feature and stop it if you see it using or accessing data unexpectedly,” This positions the problem of security on the user. Independent AI scientist Simon Willison, evaluating the function today on his blog site, kept in mind that Anthropic’s guidance to “monitor Claude while using the feature” total up to “unfairly outsourcing the problem to Anthropic’s users.”

Anthropic’s mitigations

Anthropic is not totally neglecting the issue, nevertheless, and it has actually executed numerous security steps for the file-creation function. The business has actually executed a classifier that tries to spot timely injections and stop execution if they are spotted. In addition, for Pro and Max users, Anthropic handicapped public sharing of discussions that utilize the file-creation function. For Enterprise users, the business carried out sandbox seclusion so that environments are never ever shared in between users. The business likewise minimal job period and container runtime “to avoid loops of malicious activity.”

Anthropic offers an allowlist of domains Claude can access for all users, consisting of api.anthropic.com, github.com, registry.npmjs.org, and pypi.org. Group and Enterprise administrators have control over whether to allow the function for their companies

Anthropic’s documents specifies the business has “a continuous process for ongoing security testing and red-teaming of this feature.” The business motivates companies to “evaluate these protections against their specific security requirements when deciding whether to enable this feature.”

Trigger injections galore

Even with Anthropic’s security procedures, Willison states he’ll beware. “I plan to be cautious using this feature with any data that I very much don’t want to be leaked to a third party, if there’s even the slightest chance that a malicious instruction might sneak its way in,” he composed on his blog site.

We covered a comparable prospective prompt-injection vulnerability with Anthropic’s Claude for Chrome, which released as a research study sneak peek last month. For business clients thinking about Claude for delicate company files, Anthropic’s choice to deliver with recorded vulnerabilities recommends competitive pressure might be bypassing security factors to consider in the AI arms race.

That sort of “ship first, secure it later” viewpoint has actually triggered disappointments amongst some AI professionals like Willison, who has actually thoroughly recorded prompt-injection vulnerabilities (and created the term). He just recently explained the existing state of AI security as “horrifying” on his blog site, keeping in mind that these prompt-injection vulnerabilities stay extensive “almost three years after we first started talking about them.”

In a prescient caution from September 2022, Willison composed that “there may be systems that should not be built at all until we have a robust solution.” His current evaluation in today? “It looks like we built them anyway!”

This story was upgraded on September 10, 2025 at 9:50 AM to remedy details about Anthropic’s red-teaming efforts and to include information to Anthropic’s mitigation steps.

Learn more

As an Amazon Associate I earn from qualifying purchases.