As researchers create brand-new infections utilizing AI, professionals are examining whether present biosecurity steps can hold up to this possible brand-new danger.

(Image credit: Andriy Onufriyenko by means of Getty Images)

Researchers have actually utilized expert system(AIto develop new infections, unlocking to AI-designed types of life.

The infections are various enough from existing pressures to possibly certify as brand-new types. They are bacteriophages, which indicates they assault germs, not human beings, and the authors of the research study took actions to guarantee their designs could not develop infections efficient in contaminating individuals, animals or plants.

In the research study, released Thursday (Oct. 2) in the journal Science, scientists from Microsoft exposed that AI can get around security procedures that would otherwise avoid bad stars from buying hazardous particles from supply business.

After revealing this vulnerability, the research study group hurried to develop software application spots that considerably lower the threat. This software application presently needs customized proficiency and access to specific tools that many people in the general public can’t utilize.

Integrated, the brand-new research studies highlight the danger that AI might create a brand-new lifeform or bioweapon that postures a hazard to human beings– possibly releasing a pandemic, in a worst-case situation. Far, AI does not have that ability. Specialists state that a future where it does isn’t so far off.

To avoid AI from positioning a risk, specialists state, we require to develop multi-layer security systems, with much better screening tools and developing policies governing AI-driven biological synthesis.

Get the world’s most remarkable discoveries provided directly to your inbox.

The dual-use issueAt the heart of the concern with AI-designed infections, proteins and other biological items is what’s referred to as the “dual-use problem.” This describes any innovation or research study that might have advantages, however might likewise be utilized to purposefully trigger damage.

A researcher studying transmittable illness may wish to genetically customize an infection to discover what makes it more transmissableSomebody intending to stimulate the next pandemic might utilize that very same research study to craft an ideal pathogen. Research study on aerosol drug shipment can assist individuals with asthma by causing more reliable inhalers, however the styles may likewise be utilized to provide chemical weapons.

Stanford doctoral trainee Sam King and his manager Brian Hiean assistant teacher of chemical engineering, understood this double-edged sword. They wished to develop new bacteriophages– or “phages,” for brief– that might pursue and eliminate germs in contaminated clients. Their efforts were explained in a preprint published to the bioRxiv database in September, and they have actually not yet been peer examined.

Phages take advantage of germs, and bacteriophages that researchers have tested from the environment and cultivated in the laboratory are currently being evaluated as possible add-ons or options to prescription antibiotics. This might assist fix the issue of antibiotic resistance and conserve lives. Phages are infections, and some infections are unsafe to human beings, raising the theoretical possibility that the group might unintentionally produce an infection that might hurt individuals.

The scientists expected this danger and attempted to minimize it by making sure that their AI designs were not trained on infections that contaminate people or any other eukaryotes– the domain of life that consists of plants, animals, and whatever that’s not a germs or archaea. They evaluated the designs to make certain they could not individually create infections comparable to those understood to contaminate plants or animals.

With safeguards in location, they asked the AI to design its styles on a phage currently extensively utilized in lab research studies. Anybody aiming to construct a fatal infection would likely have a much easier time utilizing older techniques that have actually been around for longer, King stated.

“The state of this method right now is that it’s quite challenging and requires a lot of expertise and time,” King informed Live Science. “We feel that this doesn’t currently lower the barrier to any more dangerous applications.”

SecurityIn a quickly progressing field, such preventive steps are being developed on the go, and it’s not yet clear what security requirements will eventually be enough. Scientists state the policies will require to stabilize the dangers of AI-enabled biology with the advantages. What’s more, scientists will need to expect how AI designs might weasel around the challenges positioned in front of them.

“These models are smart,” stated Tina Hernandez-Boussarda teacher of medication at the Stanford University School of Medicine, who spoke with on security for the AI designs on viral series criteria utilized in the brand-new preprint research study. “You have to remember that these models are built to have the highest performance, so once they’re given training data, they can override safeguards.”

Believing thoroughly about what to consist of and leave out from the AI’s training information is a fundamental factor to consider that can avoid a great deal of security issues down the roadway, she stated. In the phage research study, the scientists kept information on infections that contaminate eukaryotes from the design. They likewise ran tests to guarantee the designs could not individually find out hereditary series that would make their bacteriophages hazardous to human beings– and the designs didn’t.

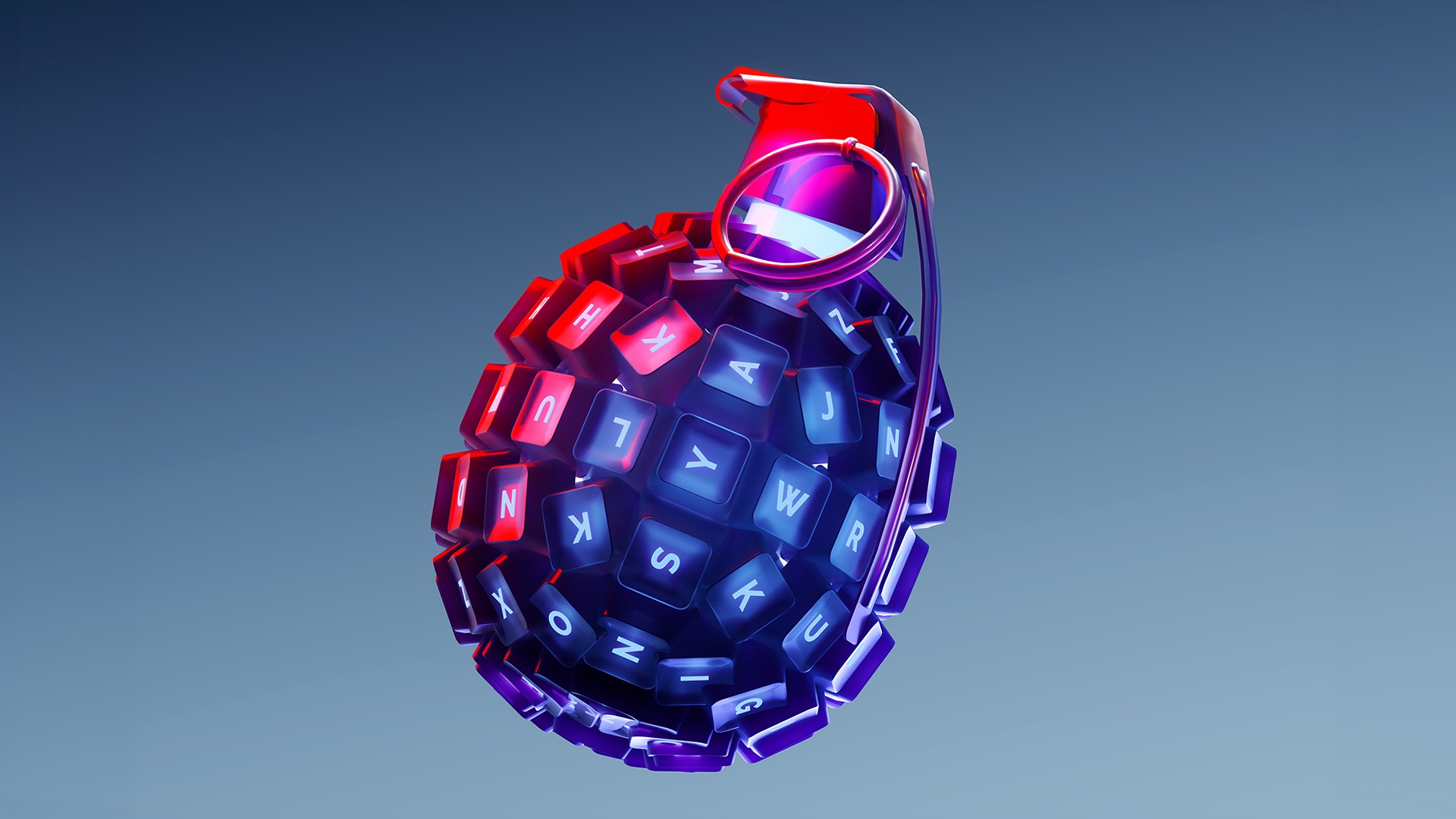

Another thread in the AI safeguard includes the translation of the AI’s style– a string of hereditary directions– into a real protein, infection, or other practical biological item. Lots of leading biotech supply business utilize software application to guarantee that their clients aren’t buying poisonous particles, though utilizing this screening is voluntary.

In their brand-new research study, Microsoft scientists Eric Horvitzthe business’s chief science officer, and Bruce Wittmana senior used researcher, discovered that existing screening software application might be deceived by AI styles. These programs compare hereditary series in an order to hereditary series understood to produce hazardous proteins. AI can produce extremely various hereditary series that are most likely to code for the very same poisonous function. These AI-generated series do not always raise a red flag to the software application.

There was an apparent stress in the air amongst peer customers.

Eric Horvitz, Microsoft

The scientists obtained a procedure from cybersecurity to alert relied on specialists and expert companies to this issue and introduced a cooperation to spot the software application. “Months later, patches were rolled out globally to strengthen biosecurity screening,” Horvitz stated at a Sept. 30 interview.

These spots minimized the threat, though throughout 4 frequently utilized screening tools, approximately 3% of possibly harmful gene series still slipped through, Horvitz and coworkers reported. The scientists needed to think about security even in releasing their research study. Scientific documents are implied to be replicable, implying other scientists have adequate info to examine the findings. Releasing all of the information about series and software application might idea bad stars into methods to prevent the security spots.

“There was an obvious tension in the air among peer reviewers about, ‘How do we do this?'” Horvitz stated.

The group eventually arrived at a tiered gain access to system in which scientists wishing to see the delicate information will use to the International Biosecurity and Biosafety Initiative for Science (IBBIS), which will function as a neutral 3rd party to assess the demand. Microsoft has actually produced an endowment to spend for this service and to host the information.

It’s the very first time that a leading science journal has actually backed such a technique of sharing information, stated Tessa Alexanianthe technical lead at Common Mechanism, a hereditary series screening tool supplied by IBBIS. “This managed access program is an experiment and we’re very eager to evolve our approach,” she stated.

What else can be done? There is not yet much policy around AI tools. Screenings like the ones studied in the brand-new Science paper are voluntary. And there are gadgets that can develop proteins right in the laboratory, no 3rd party needed– so a bad star might utilize AI to develop harmful particles and produce them without gatekeepers.

There is, nevertheless, growing assistance around biosecurity from expert consortiums and federal governments alike. A 2023 governmental executive order in the U.S. requires a concentrate on security, consisting of “robust, reliable, repeatable, and standardized evaluations of AI systems” and policies and organizations to reduce danger. The Trump Administration is dealing with a structure that will restrict federal research study and advancement funds for business that do not do security screenings, Diggans stated.

“We’ve seen more policymakers interested in adopting incentives for screening,” Alexanian stated.

In the United Kingdom, a state-backed company called the AI Security Institute goals to foster policies and requirements to reduce the danger from AI. The company is moneying research study tasks concentrated on security and threat mitigation, consisting of safeguarding AI systems versus abuse, preventing third-party attacks (such as injecting damaged information into AI training systems), and looking for methods to avoid public, open-use designs from being utilized for hazardous ends.

The bright side is that, as AI-designed hereditary series end up being more complicated, that really offers screening tools more info to deal with. That indicates that whole-genome styles, like King and Hie’s bacteriophages, would be relatively simple to evaluate for possible risks.

“In general, synthesis screening operates better on more information than less,” Diggans stated. “So at the genome scale, it’s incredibly informative.”

Microsoft is teaming up with federal government companies on methods to utilize AI to identify AI impropriety. Horvitz stated, the business is looking for methods to sort through big quantities of sewage and air-quality information to discover proof of the manufacture of hazardous contaminants, proteins or infections. “I think we’ll see screening moving outside of that single site of nucleic acid [DNA] synthesis and across the whole ecosystem,” Alexanian stated.

And while AI might in theory develop a new genome for a brand-new types of germs, archaea or more complicated organism, there is presently no simple method for AI to equate those AI guidelines into a living organism in the laboratory, King stated. Hazards from AI-designed life aren’t instant, however they’re not impossibly away. Offered the brand-new horizons AI is most likely to expose in the future, there’s a requirement to get imaginative throughout the field, Hernandez-Boussard stated.

“There’s a role for funders, for publishers, for industry, for academics,” she stated, “for, really, this multidisciplinary community to require these safety evaluations.”

Stephanie Pappas is a contributing author for Live Science, covering subjects varying from geoscience to archaeology to the human brain and habits. She was formerly a senior author for Live Science however is now a freelancer based in Denver, Colorado, and frequently adds to Scientific American and The Monitor, the regular monthly publication of the American Psychological Association. Stephanie got a bachelor’s degree in psychology from the University of South Carolina and a graduate certificate in science interaction from the University of California, Santa Cruz.

Find out more

As an Amazon Associate I earn from qualifying purchases.