As an Amazon Associate I earn from qualifying purchases.

Running the AI News Gauntlet–

News about Gemini updates, Llama 3.2, and Google’s brand-new AI-powered chip designer.

Benj Edwards

– Sep 27, 2024 8:50 pm UTC

Expand / There’s been a great deal of AI news today, and covering it often seems like going through a hall loaded with danging CRTs, much like this Getty Images illustration.

It’s been a hugely hectic week in AI news thanks to OpenAI, consisting of a questionable post from CEO Sam Altman, the large rollout of Advanced Voice Mode, 5GW information center reports, significant personnel shake-ups, and significant restructuring strategies.

The rest of the AI world does not march to the exact same beat, doing its own thing and churning out brand-new AI designs and research study by the minute. Here’s a roundup of some other significant AI news from the previous week.

Google Gemini updates

On Tuesday, Google revealed updates to its Gemini design lineup, consisting of the release of 2 brand-new production-ready designs that repeat on past releases: Gemini-1.5-Pro-002 and Gemini-1.5-Flash-002. The business reported enhancements in total quality, with significant gains in mathematics, long context handling, and vision jobs. Google declares a 7 percent boost in efficiency on the MMLU-Pro criteria and a 20 percent enhancement in math-related jobs. As you understand, if you’ve been checking out Ars Technica for a while, AI generally criteria aren’t as beneficial as we would like them to be.

In addition to design upgrades, Google presented considerable cost decreases for Gemini 1.5 Pro, cutting input token expenses by 64 percent and output token expenses by 52 percent for triggers under 128,000 tokens. As AI scientist Simon Willison kept in mind on his blog site, “For comparison, GPT-4o is currently $5/[million tokens] input and $15/m output and Claude 3.5 Sonnet is $3/m input and $15/m output. Gemini 1.5 Pro was already the cheapest of the frontier models and now it’s even cheaper.”

Google likewise increased rate limitations, with Gemini 1.5 Flash now supporting 2,000 demands per minute and Gemini 1.5 Pro managing 1,000 demands per minute. Google reports that the most recent designs provide two times the output speed and 3 times lower latency compared to previous variations. These modifications might make it much easier and more economical for designers to develop applications with Gemini than previously.

Meta launches Llama 3.2

On Wednesday, Meta revealed the release of Llama 3.2, a considerable upgrade to its open-weights AI design lineup that we have actually covered thoroughly in the past. The brand-new release consists of vision-capable big language designs (LLMs) in 11 billion and 90B specification sizes, along with light-weight text-only designs of 1B and 3B specifications created for edge and mobile phones. Meta declares the vision designs are competitive with leading closed-source designs on image acknowledgment and visual understanding jobs, while the smaller sized designs apparently outshine similar-sized rivals on different text-based jobs.

Willison did some try outs a few of the smaller sized 3.2 designs and reported excellent outcomes for the designs’ size. AI scientist Ethan Mollick displayed running Llama 3.2 on his iPhone utilizing an app called PocketPal.

Meta likewise presented the very first main “Llama Stack” circulations, produced to streamline advancement and implementation throughout various environments. Just like previous releases, Meta is making the designs offered totally free download, with license limitations. The brand-new designs support long context windows of as much as 128,000 tokens.

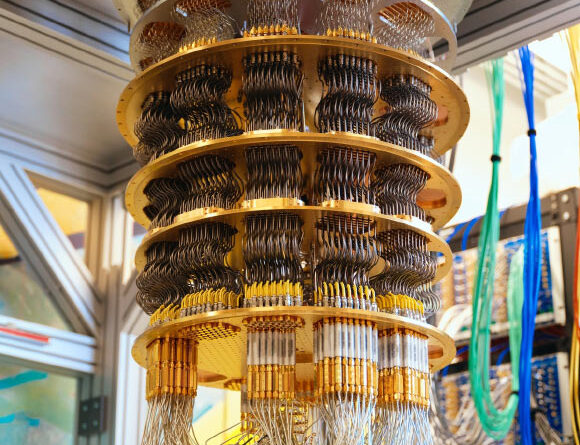

Google’s AlphaChip AI accelerate chip style

On Thursday, Google DeepMind revealed what seems a substantial development in AI-driven electronic chip style, AlphaChip. It started as a research study task in 2020 and is now a support knowing approach for developing chip designs. Google has actually apparently utilized AlphaChip to develop “superhuman chip layouts” in the last 3 generations of its Tensor Processing Units (TPUs), which are chips comparable to GPUs developed to speed up AI operations. Google declares AlphaChip can create premium chip designs in hours, compared to weeks or months of human effort. (Reportedly, Nvidia has actually likewise been utilizing AI to assist create its chips.)

Significantly, Google likewise launched a pre-trained checkpoint of AlphaChip on GitHub, sharing the design weights with the general public. The business reported that AlphaChip’s effect has actually currently extended beyond Google, with chip style business like MediaTek embracing and developing on the innovation for their chips. According to Google, AlphaChip has actually triggered a brand-new line of research study in AI for chip style, possibly enhancing every phase of the chip style cycle from computer system architecture to production.

That wasn’t whatever that took place, however those are some significant highlights. With the AI market revealing no indications of decreasing at the minute, we’ll see how next week goes.

Find out more

As an Amazon Associate I earn from qualifying purchases.