19459005]data-pin-nopin=”true” fetchpriority=”high”>

19459005]data-pin-nopin=”true” fetchpriority=”high”>

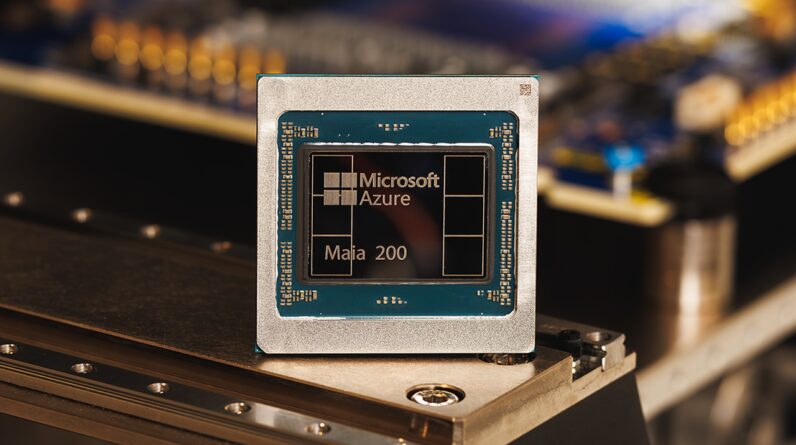

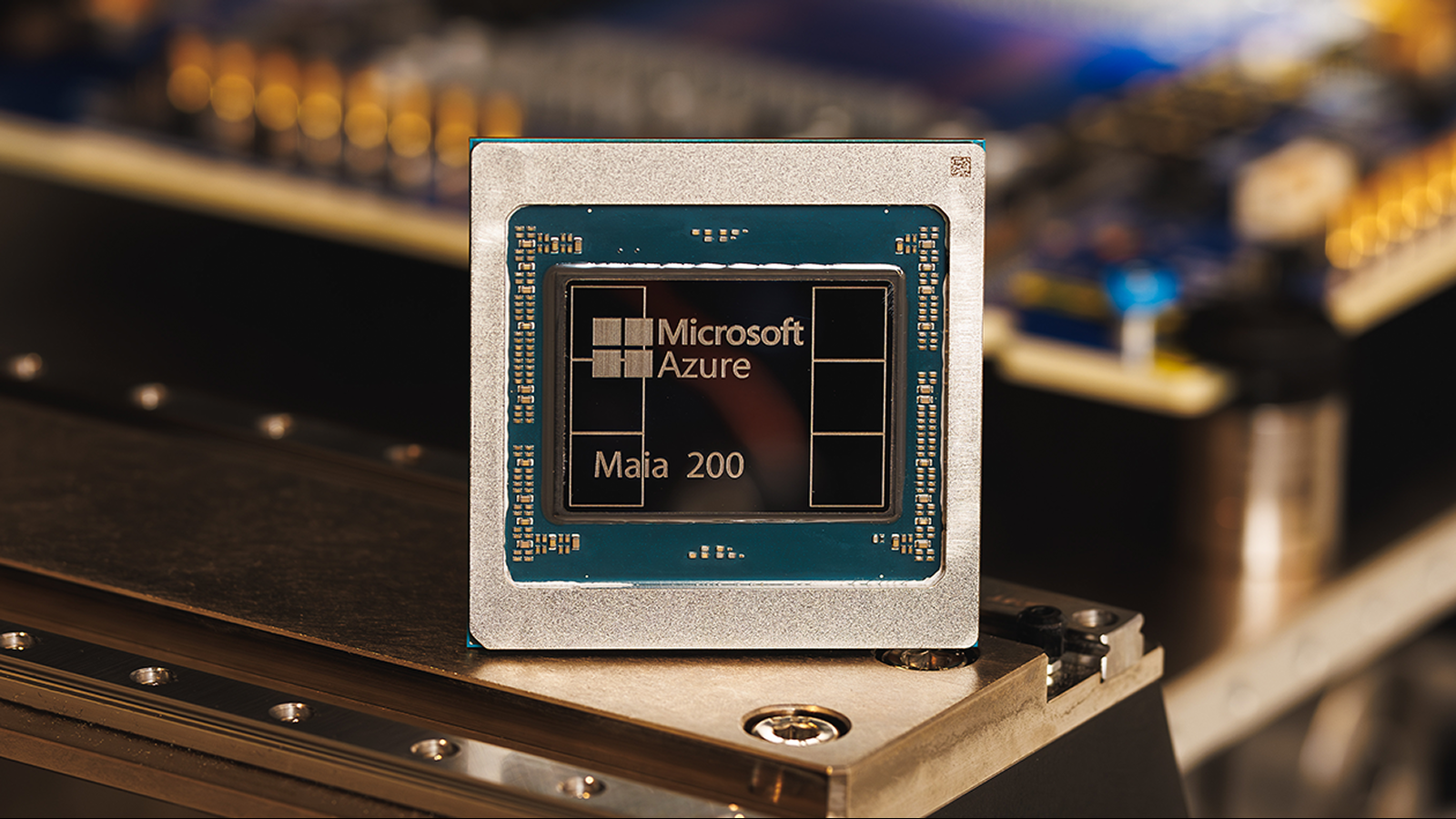

( Image credit: Microsoft )

Microsoft has actually exposed its brand-new Maia 200 accelerator chip for expert system (AI )that is 3 times more effective than hardware from competitors like Google and Amazon, business agents state.

This most recent chip will be utilized in AI reasoning instead of training, powering systems and representatives utilized to make forecasts, offer responses to inquiries and produce outputs based upon brand-new information that’s fed to them.

The brand-new chip provides efficiency of more than 10 petaflops( 1015 drifting point operations per second), Scott Guthrie, cloud and AI executive vice president at Microsoft, stated in a post. This is a procedure of efficiency in supercomputing, where the most effective supercomputers on the planet can reach more than 1,000 petaflops of power.

The brand-new chip attained this efficiency level in an information representation classification called “4-bit precision (FP4)” — an extremely compressed design created to speed up AI efficiency. Maia 200 likewise provides 5 PFLOPS of efficiency in 8-bit accuracy (FP8). The distinction in between the 2 is that FP4 is much more energy effective however less precise.

“In practical terms, one Maia 200 node can effortlessly run today’s largest models, with plenty of headroom for even bigger models in the future,” Guthrie stated in the post. “This means Maia 200 delivers 3 times the FP4 performance of the third generation Amazon Trainium, and FP8 performance above Google’s seventh generation TPU.”

Chips ahoy Maia 200 might possibly be utilized for professional AI work, such as running bigger LLMs in the future. Far, Microsoft’s Maia chips have actually just been utilized in the Azure cloud facilities to run massive work for Microsoft’s own AI services, especially Copilot. Guthrie kept in mind there would be “wider customer availability in the future,” indicating other companies might use Maia 200 by means of the Azure cloud, or the chips might possibly one day be released in standalone information centers or server stacks.

Get the world’s most remarkable discoveries provided directly to your inbox.

Guthrie stated that Microsoft boasts 30% much better efficiency per dollar over existing systems thanks to using the 3-nanometer procedure made by the Taiwan Semiconductor Manufacturing Company (TSMC), the crucial producer on the planetpermitting 100 billion transistors per chip. This basically suggests that Maia 200 might be more affordable and effective for the most requiring AI work than existing chips.

Maia 200 has a couple of other functions together with much better efficiency and effectiveness. It consists of a memory system, for example, which can assist keep an AI design’s weights and information regional, suggesting you would require less hardware to run a design. It’s likewise created to be rapidly incorporated into existing information.

Maia 200 must allow AI designs to run faster and more effectively. This implies Azure OpenAI users, such as researchers, designers and corporations, might see much better throughput and speeds when establishing AI applications and utilizing the similarity GPT-4 in their operations.

This next-generation AI hardware is not likely to interrupt daily AI and chatbot usage for the majority of people in the short-term, as Maia 200 is developed for information centers instead of consumer-grade hardware. End users might see the effect of Maia 200 in the kind of faster action times and possibly more sophisticated functions from Copilot and other AI tools developed into Windows and Microsoft items.

Maia 200 might likewise supply an efficiency increase to designers and researchers who utilize AI reasoning through Microsoft’s platforms. This, in turn, might result in enhancements in AI implementation on massive research study jobs and aspects like innovative weather condition modeling, biological or chemical systems and structures.

Roland Moore-Colyer is an independent author for Live Science and handling editor at customer tech publication TechRadar, running the Mobile Computing vertical. At TechRadar, among the U.K. and U.S.’ biggest customer innovation sites, he concentrates on smart devices and tablets. Beyond that, he taps into more than a years of composing experience to bring individuals stories that cover electrical cars (EVs), the development and useful usage of synthetic intelligence (AI), combined truth items and utilize cases, and the advancement of calculating both on a macro level and from a customer angle.

You should validate your show and tell name before commenting

Please logout and after that login once again, you will then be triggered to enter your display screen name.

Learn more

As an Amazon Associate I earn from qualifying purchases.