Can a little maker that makes mistake correction much easier overthrow the marketplace?

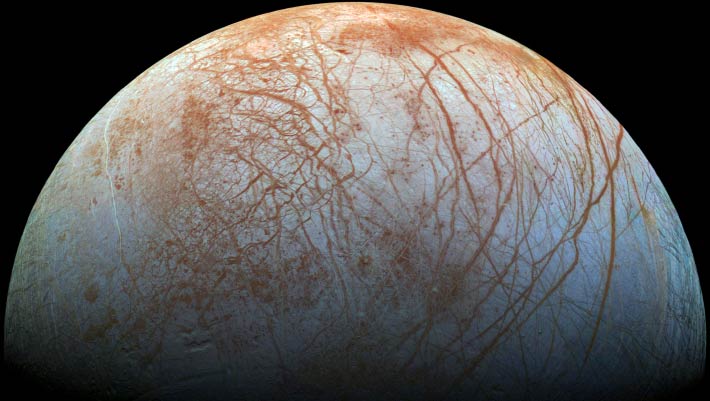

A graphic representation of the 2 resonance cavities that can hold photons, in addition to a channel that lets the photon relocation in between them.

Credit: Quantum Circuits

We’re nearing completion of the year, and there are normally a flood of statements relating to quantum computer systems around now, in part since some business wish to measure up to assured schedules. The majority of these include evolutionary enhancements on previous generations of hardware. This year, we have something brand-new: the very first business to market with a brand-new qubit innovation.

The innovation is called a dual-rail qubit, and it is meant to make the most typical type of mistake trivially simple to discover in hardware, hence making mistake correction even more effective. And, while tech huge Amazon has actually been explore them, a start-up called Quantum Circuits is the very first to offer the general public access to dual-rail qubits through a cloud service.

While the tech is intriguing by itself, it likewise supplies us with a window into how the field as a whole is considering getting error-corrected quantum calculating to work.

What’s a dual-rail qubit?

Dual-rail qubits are variations of the hardware utilized in transmons, the qubits preferred by business like Google and IBM. The fundamental hardware system connects a loop of superconducting wire to a small cavity that permits microwave photons to resonate. This setup enables the existence of microwave photons in the resonator to affect the habits of the existing in the wire and vice versa. In a transmon, microwave photons are utilized to manage the existing. There are other business that have hardware that does the reverse, managing the state of the photons by changing the existing.

Dual-rail qubits utilize 2 of these systems connected together, permitting photons to move from the resonator to the other. Utilizing the superconducting loops, it’s possible to manage the possibility that a photon will wind up in the left or ideal resonator. The real area of the photon will stay unidentified up until it’s determined, permitting the system as an entire to hold a single little quantum info– a qubit.

This has an apparent drawback: You need to develop two times as much hardware for the very same variety of qubits. Why trouble? Due to the fact that the large bulk of mistakes include the loss of the photon, which’s quickly discovered. “It’s about 90 percent or more [of the errors],” stated Quantum Circuits’ Andrei Petrenko. “So it’s a huge advantage that we have with photon loss over other errors. And that’s actually what makes the error correction a lot more efficient: The fact that photon losses are by far the dominant error.”

Petrenko stated that, without doing a measurement that would interrupt the storage of the qubit, it’s possible to identify if there is an odd variety of photons in the hardware. If that isn’t the case, you understand a mistake has actually happened– probably a photon loss (gains of photons are unusual however do take place). For easy algorithms, this would be a signal to merely begin over.

It does not remove the requirement for mistake correction if we desire to do more intricate calculations that can’t make it to conclusion without experiencing a mistake. There’s still the staying 10 percent of mistakes, which are mainly something called a stage turn that stands out to quantum systems. Bit turns are a lot more uncommon in dual-rail setups. Just understanding that a photon was lost does not inform you whatever you require to understand to repair the issue; error-correction measurements of other parts of the rational qubit are still required to repair any issues.

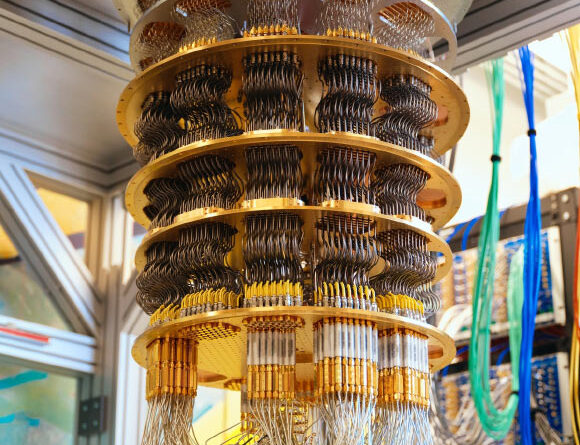

The design of the brand-new maker. Each qubit (gray square)includes a left and ideal resonance chamber(blue dots)that a photon can move in between. Each of the qubits has connections that permit entanglement with its closest next-door neighbors.

Credit: Quantum Circuits

The preliminary hardware that’s being made readily available is too little to even approach helpful calculations. Rather, Quantum Circuits picked to connect 8 qubits with nearest-neighbor connections in order to permit it to host a single rational qubit that makes it possible for mistake correction. Put in a different way: this device is implied to allow individuals to find out how to utilize the special functions of dual-rail qubits to enhance mistake correction.

One effect of having this distinct hardware is that the software application stack that controls operations requires to benefit from its mistake detection abilities. None of the other hardware on the marketplace can be straight queried to figure out whether it has actually experienced a mistake. Quantum Circuits has actually had to establish its own software application stack to permit users to really benefit from dual-rail qubits. Petrenko stated that the business likewise selected to supply access to its hardware through its own cloud service since it wished to link straight with the early adopters in order to much better comprehend their requirements and expectations.

Numbers or sound?

Considered that a variety of business have actually currently launched several modifications of their quantum hardware and have actually scaled them into numerous specific qubits, it might appear a bit unusual to see a business go into the marketplace now with a maker that has simply a handful of qubits. Remarkably, Quantum Circuits isn’t alone in preparing a fairly late entry into the market with hardware that just hosts a couple of qubits.

Having actually talked with numerous of them, there is a reasoning to what they’re doing. What follows is my effort to communicate that reasoning in a basic type, without concentrating on any single business’s case.

Everybody concurs that the future of quantum calculation is mistake correction, which needs connecting together several hardware qubits into a single system described a rational qubit. To get actually robust, error-free efficiency, you have 2 options. One is to dedicate great deals of hardware qubits to the rational qubit, so you can deal with several mistakes simultaneously. Or you can reduce the mistake rate of the hardware, so that you can get a rational qubit with comparable efficiency while utilizing less hardware qubits. (The 2 choices aren’t equally special, and everybody will require to do a little both.)

The 2 choices posture extremely various difficulties. Improving the hardware mistake rate implies diving into the physics of private qubits and the hardware that manages them. To put it simply, getting lasers that have less of the unavoidable changes in frequency and energy. Or finding out how to make loops of superconducting wire with less flaws or manage roaming charges on the surface area of electronic devices. These are fairly tough issues.

By contrast, scaling qubit count mainly includes having the ability to regularly do something you currently understand how to do. If you currently understand how to make great superconducting wire, you just require to make a couple of thousand circumstances of that wire rather of a couple of lots. The electronic devices that will trap an atom can be made in such a way that will make it much easier to make them countless times. These are primarily engineering issues, and typically of comparable intricacy to issues we’ve currently fixed to make the electronic devices transformation take place.

Simply put, within limitations, scaling is a lot easier issue to resolve than mistakes. It’s still going to be exceptionally hard to get the countless hardware qubits we ‘d require to mistake proper complex algorithms on today’s hardware. If we can get the mistake rate down a bit, we can utilize smaller sized sensible qubits and may just require 10,000 hardware qubits, which will be more friendly.

Mistakes initially

And there’s proof that even the early entries in quantum computing have actually reasoned the exact same method. Google has actually been working models of the very same chip style given that its 2019 quantum supremacy statement, concentrating on comprehending the mistakes that happen on enhanced variations of that chip. IBM made striking the 1,000 qubit mark a significant objective however has actually considering that been concentrated on decreasing the mistake rate in smaller sized processors. Somebody at a quantum computing start-up as soon as informed us it would be minor to trap more atoms in its hardware and increase the qubit count, however there wasn’t much point in doing so offered the mistake rates of the qubits on the then-current generation device.

The brand-new business entering this market now are making the argument that they have an innovation that will either significantly decrease the mistake rate or make managing the mistakes that do take place a lot easier. Quantum Circuits plainly falls under the latter classification, as dual-rail qubits are totally about making the most typical kind of mistake minor to identify. The previous classification consists of business like Oxford Ionics, which has actually suggested it can carry out single-qubit gates with a fidelity of over 99.9991 percent. Or Alice & & Bob, which shops qubits in the habits of numerous photons in a single resonance cavity, making them extremely robust to the loss of specific photons.

These business are wagering that they have unique innovation that will let them manage mistake rate concerns better than developed gamers. That will decrease the overall scaling they require to do, and scaling will be a much easier issue in general– and one that they might currently have the pieces in location to deal with. Quantum Circuits’ Petrenko, for instance, informed Ars, “I think that we’re at the point where we’ve gone through a number of iterations of this qubit architecture where we’ve de-risked a number of the engineering roadblocks.” And Oxford Ionics informed us that if they might make the electronic devices they utilize to trap ions in their hardware as soon as, it would be simple to mass produce them.

None of this ought to indicate that these business will have it simple compared to a start-up that currently has experience with both lowering mistakes and scaling, or a giant like Google or IBM that has the resources to do both. It does discuss why, even at this phase in quantum computing’s advancement, we’re still seeing start-ups get in the field.

John is Ars Technica’s science editor. He has a Bachelor of Arts in Biochemistry from Columbia University, and a Ph.D. in Molecular and Cell Biology from the University of California, Berkeley. When physically separated from his keyboard, he tends to look for a bike, or a beautiful area for communicating his treking boots.

23 Comments

Find out more

As an Amazon Associate I earn from qualifying purchases.