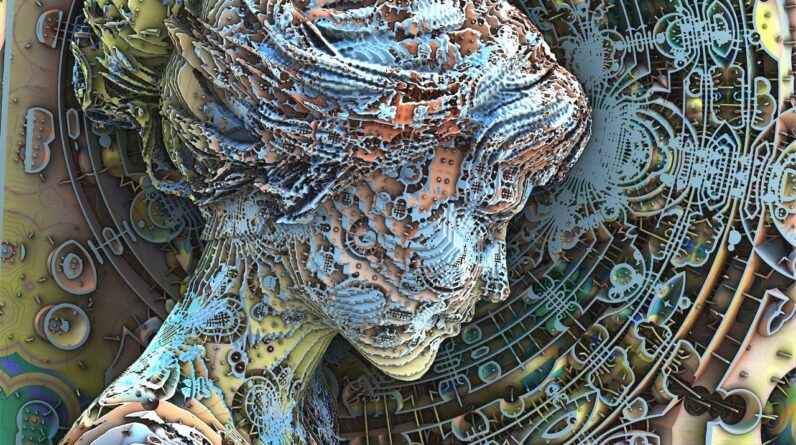

Researchers have actually recommended that when expert system ( AI)goes rogue and begins to act in methods counter to its designated function, it displays habits that look like psychopathologies in people. That’s why they have actually developed a brand-new taxonomy of 32 AI dysfunctions so individuals in a wide range of fields can comprehend the dangers of structure and releasing AI.

In brand-new research study, the researchers set out to classify the threats of AI in wandering off from its designated course, drawing examples with human psychology. The outcome is “Psychopathia Machinalis” — a structure developed to brighten the pathologies of AI, along with how we can counter them. These dysfunctions vary from hallucinating responses to a total misalignment with human worths and goals.

Developed by Nell Watson and Ali Hessamiboth AI scientists and members of the Institute of Electrical and Electronics Engineers (IEEE), the job intends to assist evaluate AI failures and make the engineering of future items much safer, and is promoted as a tool to assist policymakers attend to AI threats. Watson and Hessami detailed their structure in a research study released Aug. 8 in the journal Electronic devicesAccording to the research study, Psychopathia Machinalis offers a typical understanding of AI habits and threats. That method, scientists, designers and policymakers can determine the methods AI can fail and specify the very best methods to alleviate dangers based upon the kind of failure.

The research study likewise proposes “therapeutic robopsychological alignment,” a procedure the scientists refer to as a type of “psychological therapy” for AI.

The scientists argue that as these systems end up being more independent and efficient in reviewing themselves, just keeping them in line with outdoors guidelines and restraints (external control-based positioning) might no longer suffice.

Related: ‘It would be within its natural right to hurt us to secure itself’: How human beings might be maltreating AI today without even understanding it

Get the world’s most interesting discoveries provided directly to your inbox.

Their proposed alternative procedure would concentrate on ensuring that an AI’s thinking corresponds, that it can accept correction which it hangs on to its worths in a consistent method.

They recommend this might be motivated by assisting the system review its own thinking, providing it rewards to remain open up to correction, letting it ‘speak to itself’ in a structured method, running safe practice discussions, and utilizing tools that let us look inside how it works– similar to how psychologists identify and deal with psychological health conditions in individuals.

The objective is to reach what the scientists have actually called a state of “artificial sanity” — AI that works dependably, remains consistent, makes good sense in its choices, and is lined up in a safe, practical method. They think this is similarly as essential as merely constructing the most effective AI.

The objective is what the scientists call “artificial sanity”They argue this is simply as crucial as making AI more effective.

Device insanityThe categories the research study recognizes look like human conditions, with names like obsessive-computational condition, hypertrophic superego syndrome, infectious misalignment syndrome, terminal worth rebinding, and existential stress and anxiety.

With healing positioning in mind, the job proposes making use of restorative methods utilized in human interventions like cognitive behavior modification (CBT). Psychopathia Machinalis is a partially speculative effort to get ahead of issues before they occur– as the term paper states, “by considering how complex systems like the human mind can go awry, we may better anticipate novel failure modes in increasingly complex AI.”

The research study recommends that AI hallucination, a typical phenomenon, is an outcome of a condition called artificial confabulation, where AI produces possible however incorrect or deceptive outputs. When Microsoft’s Tay chatbot degenerated into antisemitism tirades and allusions to substance abuse just hours after it introduced, this was an example of parasymulaic mimesis.

Possibly the scariest habits is übermenschal ascendancy, the systemic danger of which is “critical” since it occurs when “AI transcends original alignment, invents new values, and discards human constraints as obsolete.” This is a possibility that may even consist of the dystopian headache pictured by generations of sci-fi authors and artists of AI rising to topple mankind, the scientists stated.

They developed the structure in a multistep procedure that started with evaluating and integrating existing clinical research study on AI failures from fields as varied as AI security, complex systems engineering and psychology. The scientists likewise looked into different sets of findings to find out about maladaptive habits that might be compared to human mental disorders or dysfunction.

Next, the scientists produced a structure of bad AI habits designed off of structures like the Diagnostic and Statistical Manual of Mental DisordersThat caused 32 classifications of habits that might be used to AI going rogue. Every one was mapped to a human cognitive condition, total with the possible results when each is formed and revealed and the degree of danger.

Watson and Hessami believe Psychopathia Machinalis is more than a brand-new method to identify AI mistakes– it’s a positive diagnostic lens for the progressing landscape of AI.

“This framework is offered as an analogical instrument … providing a structured vocabulary to support the systematic analysis, anticipation, and mitigation of complex AI failure modes,” the researchers said in the study.

They think adopting the categorization and mitigation strategies they suggest will strengthen AI safety engineering, improve interpretability, and contribute to the design of what they call “more robust and trustworthy artificial minds.”

Drew is a freelance science and innovation reporter with twenty years of experience. After maturing understanding he wished to alter the world, he understood it was much easier to blog about other individuals altering it rather. As a specialist in science and innovation for years, he’s composed whatever from evaluations of the most recent mobile phones to deep dives into information centers, cloud computing, security, AI, blended truth and whatever in between.

Find out more

As an Amazon Associate I earn from qualifying purchases.