What do consuming rocks, rat genital areas, and Willy Wonka share? AI, obviously.

It’s been a wild year in tech thanks to the crossway in between people and expert system. 2024 brought a parade of AI curiosity, accidents, and crazy minutes that motivated odd habits from both makers and male. From AI-generated rat genital areas to online search engine informing individuals to consume rocks, this year showed that AI has actually been having an odd influence on the world.

Why the weirdness? If we needed to think, it might be because of the novelty of everything. Generative AI and applications built on Transformer-based AI designs are still so brand-new that individuals are tossing whatever at the wall to see what sticks. Individuals have actually been having a hard time to understand both the ramifications and prospective applications of the brand-new innovation. Riding in addition to the buzz, various kinds of AI that might wind up being inexpedient, such as automated military targeting systems, have actually likewise been presented.

It’s worth pointing out that aside from insane news, we saw less unusual AI advances in 2024. Claude 3.5 Sonnet released in June held off the competitors as a leading design for many of the year, while OpenAI’s o1 utilized runtime calculate to broaden GPT-4o’s abilities with simulated thinking. Advanced Voice Mode and NotebookLM likewise became unique applications of AI tech, and the year saw the increase of more capable music synthesis designs and likewise much better AI video generators, consisting of numerous from China.

For now, let’s get down to the weirdness.

ChatGPT goes crazy

Early in the year, things left to an interesting start when OpenAI’s ChatGPT experienced a substantial technical breakdown that triggered the AI design to produce significantly incoherent reactions, triggering users on Reddit to explain the system as “having a stroke” or “going insane.” Throughout the problem, ChatGPT’s reactions would start usually however then weaken into ridiculous text, often simulating Shakespearean language.

OpenAI later on exposed that a bug in how the design processed language triggered it to pick the incorrect words throughout text generation, causing nonsense outputs (generally the text variation of what we at Ars now call “jabberwockies”. The business repaired the concern within 24 hours, however the event caused aggravations about the black box nature of business AI systems and users’ propensity to anthropomorphize AI habits when it breakdowns.

The fantastic Wonka occurrence

An image of “Willy’s Chocolate Experience” ( inset), which did not match AI-generated guarantees, displayed in the background.

Credit: Stuart Sinclair

The accident in between AI-generated images and customer expectations sustained human aggravations in February when Scottish households found that “Willy’s Chocolate Experience,” an unlicensed Wonka-ripoff occasion promoted utilizing AI-generated wonderland images, ended up being bit more than a sporadic storage facility with a couple of modest designs.

Moms and dads who paid ₤ 35 per ticket experienced a scenario so alarming they called the authorities, with kids apparently sobbing at the sight of an individual in what guests referred to as a “terrifying outfit.” The occasion, developed by House of Illuminati in Glasgow, assured fantastical areas like an “Enchanted Garden” and “Twilight Tunnel” Provided an underwhelming experience that required organizers to shut down mid-way through its very first day and concern refunds.

While the program was a bust, it brought us a renowned brand-new meme for task disillusionment in the type of a picture: the green-haired Willy’s Chocolate Experience staff member who appeared like she ‘d rather be anywhere else in the world at that minute.

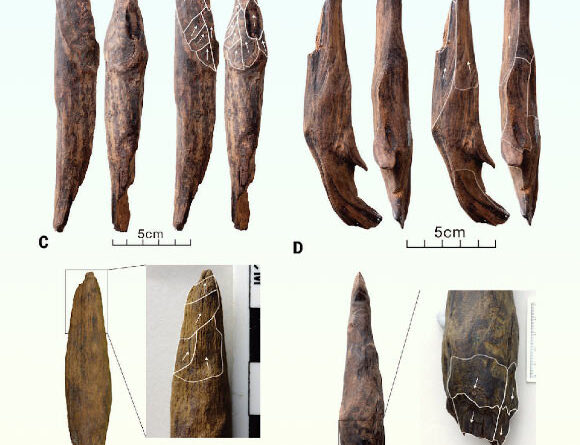

Mutant rat genital areas expose peer evaluation defects

A real lab rat, who is fascinated.

Credit: Getty|Photothek

In February, Ars Technica senior health press reporter Beth Mole covered a peer-reviewed paper released in Frontiers in Cell and Developmental Biology that developed an outcry in the clinical neighborhood when scientists found it consisted of ridiculous AI-generated images, consisting of an anatomically inaccurate rat with large genital areas. The paper, authored by researchers at Xi’an Honghui Hospital in China, freely acknowledged utilizing Midjourney to produce figures which contained mumbo jumbo text labels like “Stemm cells” and “iollotte sserotgomar.”

The publisher, Frontiers, published an expression of issue about the post entitled “Cellular functions of spermatogonial stem cells in relation to JAK/STAT signaling pathway” and introduced an examination into how the certainly flawed images gone through peer evaluation. Researchers throughout social networks platforms revealed discouragement at the event, which mirrored issues about AI-generated material penetrating scholastic publishing.

Chatbot makes incorrect refund guarantees for Air Canada

If, state, ChatGPT offers you the incorrect name for among the 7 dwarves, it’s not such a huge offer. In February, Ars senior policy press reporter Ashley Belanger covered a case of expensive AI confabulation in the wild. In the course of online text discussions, Air Canada’s client service chatbot informed clients incorrect refund policy details. The airline company dealt with legal effects later on when a tribunal ruled the airline company should honor dedications made by the automatic system. Tribunal adjudicator Christopher Rivers figured out that Air Canada bore obligation for all info on its site, no matter whether it originated from a fixed page or AI user interface.

The case set a precedent for how business releasing AI customer support tools might deal with legal responsibilities for automated systems’ actions, especially when they stop working to caution users about prospective errors. Paradoxically, the airline company had actually supposedly invested more on the preliminary AI application than it would have cost to preserve human employees for basic questions, according to Air Canada executive Steve Crocker.

Will Smith lampoons his digital double

The genuine Will Smith consuming spaghetti, parodying an AI-generated video from 2023.

Credit: Will Smith/ Getty Images/ Benj Edwards

In March 2023, a dreadful AI-generated video of Will Smith’s AI doppelganger consuming spaghetti started making the rounds online. The AI-generated variation of the star gobbled down the noodles in an abnormal and troubling method. Nearly a year later on, in February 2024, Will Smith himself published a parody reaction video to the viral jabberwocky on Instagram, including AI-like intentionally overstated pasta intake, total with hair-nibbling and finger-slurping shenanigans.

Offered the quick development of AI video innovation, especially given that OpenAI had actually simply revealed its Sora video design 4 days previously, Smith’s post triggered conversation in his Instagram remarks where some audiences at first had a hard time to compare the real video footage and AI generation. It was an early indication of “deep doubt” in action as the tech significantly blurs the line in between artificial and genuine video material.

Robotic pets find out to hunt individuals with AI-guided rifles

A still picture of a robotic quadruped equipped with a remote weapons system, recorded from a video offered by Onyx Industries.

Credit: Onyx Industries

Eventually in current history– someplace around 2022– somebody had a look at robotic quadrupeds and believed it would be a terrific concept to connect weapons to them. A couple of years later on, the United States Marine Forces Special Operations Command (MARSOC) started assessing armed robotic quadrupeds established by Ghost Robotics. The robotic “dogs” incorporated Onyx Industries’ SENTRY remote weapon systems, which included AI-enabled targeting that might identify and track individuals, drones, and automobiles, though the systems need human operators to license any weapons discharge.

The armed force’s interest in armed robotic canines followed a more comprehensive pattern of weaponized quadrupeds going into public awareness. This consisted of viral videos of customer robotics bring guns, and later on, industrial sales of flame-throwing designs. While MARSOC highlighted that weapons were simply one prospective usage case under evaluation, professionals kept in mind that the increasing combination of AI into military robotics raised concerns about for how long people would stay in control of deadly force choices.

Microsoft Windows AI is viewing

A screenshot of Microsoft’s brand-new “Recall” function in action.

Credit: Microsoft

In a period where lots of people currently seem like they have no personal privacy due to tech advancements, Microsoft called it approximately a severe degree in May. That’s when Microsoft revealed a questionable Windows 11 function called “Recall” that continually records screenshots of users ‘PC activities every couple of seconds for later AI-powered search and retrieval. The function, created for brand-new Copilot + PCs utilizing Qualcomm’s Snapdragon X Elite chips, assured to assist users discover previous activities, consisting of app use, conference material, and web searching history.

While Microsoft stressed that Recall would save encrypted photos in your area and enable users to omit particular apps or sites, the statement raised instant personal privacy issues, as Ars senior innovation press reporter Andrew Cunningham covered. It likewise included a technical toll, needing substantial hardware resources, consisting of 256GB of storage area, with 25GB devoted to keeping around 3 months of user activity. After Microsoft pulled the preliminary test variation due to public reaction, Recall later on went into public sneak peek in November with supposedly improved security steps. Safe and secure spyware is still spyware– Recall, when made it possible for, still sees almost whatever you do on your computer system and keeps a record of it.

Google Search informed individuals to consume rocks

This is great.

Credit: Getty Images

In May, Ars senior video gaming press reporter Kyle Orland( who helped commendably with the AI beat throughout the year) covered Google’s freshly released AI Overview function. It dealt with instant criticism when users found that it often supplied incorrect and possibly unsafe details in its search results page summaries. Amongst its most worrying actions, the system recommended people might securely take in rocks, improperly pointing out clinical sources about the geological diet plan of marine organisms. The system’s other mistakes consisted of advising nonexistent cars and truck upkeep items, recommending hazardous cooking methods, and complicated historic figures who shared names.

The issues came from a number of concerns, consisting of the AI dealing with joke posts as accurate sources and misinterpreting context from initial web material. Many of all, the system relies on web results as signs of authority, which we called a problematic style. While Google protected the system, mentioning these mistakes happened primarily with unusual questions, a business representative acknowledged they would utilize these “isolated examples” to fine-tune their systems. To this day, AI Overview still makes regular errors.

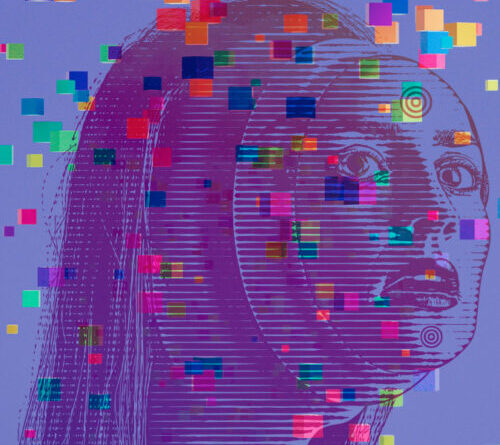

Steady Diffusion produces body scary

An AI-generated image developed utilizing Stable Diffusion 3 of a lady depending on the yard.

Credit: HorneyMetalBeing

In June, Stability AI’s release of the image synthesis design Stable Diffusion 3 Medium drew criticism online for its bad handling of human anatomy in AI-generated images. Users throughout social networks platforms shared examples of the design producing what we now like to call jabberwockies– AI generation failures with distorted bodies, misshapen hands, and surreal physiological mistakes, and lots of in the AI image-generation neighborhood saw it as a considerable action backwards from previous image-synthesis abilities.

Reddit users associated these failures to Stability AI’s aggressive filtering of adult material from the training information, which obviously hindered the design’s capability to properly render human figures. The distressed release accompanied wider organizational obstacles at Stability AI, consisting of the March departure of CEO Emad Mostaque, several personnel layoffs, and the exit of 3 essential engineers who had actually assisted establish the innovation. A few of those engineers established Black Forest Labs in August and launched Flux, which has actually ended up being the most recent open-weights AI image design to beat.

ChatGPT Advanced Voice mimics human voice in screening

AI voice-synthesis designs are master impersonators nowadays, and they can a lot more than many individuals recognize. In August, we covered a story where OpenAI’s ChatGPT Advanced Voice Mode function suddenly mimicked a user’s voice throughout the business’s internal screening, exposed by OpenAI after the reality in security screening paperwork. To avoid future circumstances of an AI assistant all of a sudden speaking in your own voice (which, let’s be sincere, would most likely freak individuals out), the business developed an output classifier system to avoid unapproved voice replica. OpenAI states that Advanced Voice Mode now captures all significant variances from authorized system voices.

Independent AI scientist Simon Willison talked about the ramifications with Ars Technica, keeping in mind that while OpenAI limited its design’s complete voice synthesis abilities, comparable innovation would likely emerge from other sources within the year. The fast improvement of AI voice duplication has actually triggered basic issue about its prospective abuse, although business like ElevenLabs have actually currently been using voice cloning services for some time.

San Francisco’s robotic vehicle horn symphony

A Waymo self-driving automobile in front of Google’s San Francisco head office, San Francisco, California, June 7, 2024.

Credit: Getty Images

In August, San Francisco citizens got a loud taste of robo-dystopia when Waymo’s self-driving vehicles started producing an unanticipated nighttime disruption in the South of Market district. In a parking area off 2nd Street, the vehicles gathered together autonomously every night throughout rider lulls at 4 am and started participating in extended beeping matches at each other while trying to park.

Regional resident Christopher Cherry’s preliminary optimism about the robotic fleet’s existence liquified as the mechanical chorus grew louder each night, impacting homeowners in close-by high-rises. The nighttime tech disturbance worked as a lesson in the unintended results of self-governing systems when run in aggregate.

Larry Ellison imagines all-seeing AI electronic cameras

In September, Oracle co-founder Larry Ellison painted a bleak vision of common AI monitoring throughout a business monetary conference. The 80-year-old database billionaire explained a future where AI would keep track of residents through networks of cams and drones, asserting that the oversight would make sure legal habits from both cops and the general public.

His security forecasts advised us of parallels to existing systems in China, where authorities currently utilized AI to arrange monitoring information on residents as part of the nation’s “sharp eyes” project from 2015 to 2020. Ellison’s declaration showed the sort of worst-case tech security state situation– most likely antithetical to any sort of complimentary society– that lots of sci-fi books of the 20th century cautioned us about.

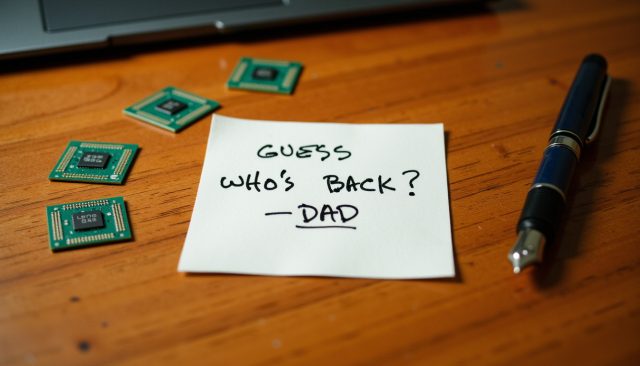

A dead dad sends out brand-new letters home

An AI-generated image including my late dad’s handwriting.

Credit: Benj Edwards / Flux

AI has actually made a number of us do odd things in 2024, including this author. In October, I utilized an AI synthesis design called Flux to recreate my late daddy’s handwriting with striking precision. After scanning 30 samples from his engineering note pads, I trained the design utilizing computing time that cost less than 5 dollars. The resulting text recorded his unique uppercase design, which he established throughout his profession as an electronic devices engineer.

I delighted in producing images revealing his handwriting in different contexts, from folder labels to skywriting, and made the experienced design easily readily available online for others to utilize. While I approached it as a homage to my dad (who would have valued the technical accomplishment), lots of people discovered the entire experience odd and rather troubling. The important things we unhinged Bing Chat-like reporters do to bring awareness to a subject are often non-traditional. I think it counts for this list!

For 2025? Anticipate much more AI

Thanks for checking out Ars Technica this previous year and following together with our group protection of this quickly emerging and broadening field. We value your kind words of assistance. Ars Technica’s 2024 AI words of the year were: vibemarking, deep doubt, and the abovementioned jabberwocky. The old stalwart “confabulation” Made numerous significant looks. Tune in once again next year when we continue to attempt to determine how to concisely explain unique circumstances in emerging innovation by identifying them.

Recalling, our forecast for 2024 in AI in 2015 was “buckle up.” It appears fitting, offered the weirdness detailed above. Particularly the part about the robotic pets with weapons. For 2025, AI will likely influence more turmoil ahead, however likewise possibly get put to severe work as a performance tool, so this time, our forecast is “buckle down.”

We ‘d like to ask: What was the craziest story about AI in 2024 from your viewpoint? Whether you enjoy AI or dislike it, do not hesitate to recommend your own additions to our list in the remarks. Pleased New Year!

Benj Edwards is Ars Technica’s Senior AI Reporter and creator of the website’s devoted AI beat in 2022. He’s likewise a tech historian with nearly 20 years of experience. In his downtime, he composes and tapes music, gathers classic computer systems, and takes pleasure in nature. He resides in Raleigh, NC.

81 Comments

Find out more

As an Amazon Associate I earn from qualifying purchases.