(Image credit: Prostock-Studio by means of Getty Images)

Like it or not, expert system has actually entered into life. Numerous gadgets– consisting of electrical razors and tooth brushes — have actually ended up being AI-powered,” using machine learning algorithms to track how a person uses the device, how the device is working in real time, and provide feedback. From asking questions to an AI assistant like ChatGPT or Microsoft Copilot to monitoring a daily fitness routine with a smartwatch, many people use an AI system or tool every day.

While AI tools and technologies can make life easier, they also raise important questions about data privacy. These systems often collect large amounts of data, sometimes without people even realizing their data is being collected. The information can then be used to identify personal habits and preferences, and even predict future behaviors by drawing inferences from the aggregated data.

As an assistant professor of cybersecurity at West Virginia University, I study how emerging technologies and various types of AI systems manage personal data and how we can build more secure, privacy-preserving systems for the future.

Generative AI software uses large amounts of training data to create new content such as text or images. Predictive AI uses data to forecast outcomes based on past behavior, such as how likely you are to hit your daily step goal, or what movies you may want to watch. Both types can be used to gather information about you.

Generative AI assistants such as ChatGPT and Google Gemini collect all the information users type into a chat box. Every question, response and prompt that users enter is recorded, stored and analyzed to improve the AI model.

OpenAI’s privacy policy informs users that “we might utilize material you offer us to enhance our Services, for instance to train the designs that power ChatGPT. “Although OpenAI enables you to pull out of material usage for design training, it still gathers and maintains your individual informationSome business guarantee that they anonymize this information, suggesting they save it without calling the individual who offered it, there is constantly a threat of information being reidentified.

Predictive AI

Beyond generative AI assistants, social media platforms like Facebook, Instagram and TikTok continuously gather data on their users to train predictive AI models. Every post, photo, video, like, share and comment, including the amount of time people spend looking at each of these, is collected as data points that are used to build digital data profiles for each person who uses the service.

The profiles can be used to refine the social media platform’s AI recommender systems. They can also be sold to data brokers, who sell a person’s data to other companies to, for instance, help develop targeted advertisements that align with that person’s interests.

Many social media companies also track users across websites and applications by putting cookies and embedded tracking pixels on their computers. Cookies are small files that store information about who you are and what you clicked on while browsing a website.

Related: Cutting-edge AI models from OpenAI and DeepSeek undergo ‘complete collapse’ when problems get too difficult, study reveals

One of the most common uses of cookies is in digital shopping carts: When you place an item in your cart, leave the website and return later, the item will still be in your cart because the cookie stored that information. Tracking pixels are invisible images or snippets of code embedded in websites that notify companies of your activity when you visit their page. This helps them track your behavior across the internet.

This is why users often see or hear advertisements that are related to their browsing and shopping habits on many of the unrelated websites they browse, and even when they are using different devices, including computers, phones and smart speakers. One study found that some websites can store over 300 tracking cookies on your computer or mobile phone.

Data privacy controls — and limitations

Like generative AI platforms, social media platforms offer privacy settings and opt-outs, but these give people limited control over how their personal data is aggregated and monetized. As media theorist Douglas Rushkoff argued in 2011, if the service is free, you are the product.

Many tools that include AI don’t require a person to take any direct action for the tool to collect data about that person. Smart devices such as home speakers, fitness trackers and watches continually gather information through biometric sensors, voice recognition and location tracking. Smart home speakers continually listen for the command to activate or “get up” the device. As the device is listening for this word, it picks up all the conversations happening around it, even though it does not seem to be active.

Some companies claim that voice data is only stored when the wake word — what you say to wake up the device — is detected. However, people have raised concerns about accidental recordings, especially because these devices are often connected to cloud services, which allow voice data to be stored, synced and shared across multiple devices such as your phone, smart speaker and tablet.

If the company allows, it’s also possible for this data to be accessed by third parties, such as advertisers, data analytics firms or a law enforcement agency with a warrant.

Privacy rollbacks

This potential for third-party access also applies to smartwatches and fitness trackers, which monitor health metrics and user activity patterns. Companies that produce wearable fitness devices are not considered “covered entities” and so are not bound by the Health Information Portability and Accountability Act. This means that they are legally allowed to sell health- and location-related data collected from their users.

Concerns about HIPAA data arose in 2018, when Strava, a fitness company released a global heat map of user’s exercise routes. In doing so, it accidentally revealed sensitive military locations across the globe through highlighting the exercise routes of military personnel.

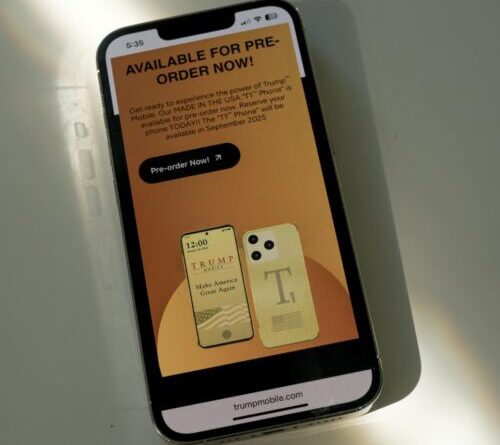

The Trump administration has tapped Palantir, a company that specializes in using AI for data analytics, to collate and analyze data about Americans. Meanwhile, Palantir has announced a partnership with a company that runs self-checkout systems.

Such partnerships can expand corporate and government reach into everyday consumer behavior. This one could be used to create detailed personal profiles on Americans by linking their consumer habits with other personal data. This raises concerns about increased surveillance and loss of anonymity. It could allow citizens to be tracked and analyzed across multiple aspects of their lives without their knowledge or consent.

Some smart device companies are also rolling back privacy protections instead of strengthening them. Amazon recently announced that starting on March 28, 2025, all voice recordings from Amazon Echo devices would be sent to Amazon’s cloud by default, and users will no longer have the option to turn this function off. This is different from previous settings, which allowed users to limit private data collection.

Changes like these raise concerns about how much control consumers have over their own data when using smart devices. Many privacy experts consider cloud storage of voice recordings a form of data collection, especially when used to improve algorithms or build user profiles, which has implications for data privacy laws designed to protect online privacy.

Implications for information personal privacy

All of this raises major personal privacy issues for individuals and federal governments on how AI tools gather, keep, utilize and transfer information. The greatest issue is openness. Individuals do not understand what information is being gathered, how the information is being utilized, and who has access to that information.

Business tend to utilize complex personal privacy policies filled with technical lingo to make it tough for individuals to comprehend the regards to a service that they accept. Individuals likewise tend not to check out regards to service files. One research study discovered that individuals balanced 73 seconds checking out a regards to service file that had a typical read time of 29-32 minutes.

Information gathered by AI tools might at first live with a business that you trust, however can quickly be offered and offered to a business that you do not trust.

AI tools, the business in charge of them and the business that have access to the information they gather can likewise undergo cyberattacks and information breaches that can expose delicate individual details. These attacks can by performed by cybercriminals who remain in it for the cash, or by so-called innovative consistent dangerswhich are usually nation/state- sponsored aggressors who access to networks and systems and stay there unnoticed, gathering info and individual information to ultimately trigger interruption or damage.

While laws and guidelines such as the General Data Protection Regulation in the European Union and the California Consumer Privacy Act objective to protect user information, AI advancement and usage have actually frequently outmatched the legal procedure. The laws are still capturing up on AI and information personal privacyIn the meantime, you must presume any AI-powered gadget or platform is gathering information on your inputs, habits and patterns.

AI tools gather individuals’s information, and the method this build-up of information impacts individuals’s information personal privacy is worrying, the tools can likewise be helpful. AI-powered applications can improve workflows, automate repeated jobs and supply important insights.

It’s vital to approach these tools with awareness and care.

When utilizing a generative AI platform that offers you responses to concerns you key in a timely, do not consist of any personally recognizable detailsconsisting of names, birth dates, Social Security numbers or home addresses. At the work environment, do not consist of trade tricks or categorized details. In basic, do not put anything into a timely that you would not feel comfy exposing to the general public or seeing on a signboard. Keep in mind, when you strike enter upon the timely, you’ve lost control of that details.

Bear in mind that gadgets which are switched on are constantly listening– even if they’re asleep. If you utilize wise home or ingrained gadgets, turn them off when you require to have a personal discussion. A gadget that’s sleeping appearances non-active, however it is still powered on and listening for a wake word or signal. Disconnecting a gadget or eliminating its batteries is an excellent way of making certain the gadget is really off.

Be conscious of the terms of service and information collection policies of the gadgets and platforms that you are utilizing. You may be amazed by what you’ve currently accepted.

This post becomes part of a series on information personal privacy that explores who gathers your information, what and how they gather, who offers and purchases your information, what they all finish with it, and what you can do about it.

This edited short article is republished from The Conversation under a Creative Commons license. Check out the initial post

Get the world’s most interesting discoveries provided directly to your inbox.

Hristopher Ramezan is an Assistant Professor of Cybersecurity and Management Information Systems at West Virginia University, where he likewise directs the Cyber-Resilience Resource. His research study covers cybersecurity, artificial intelligence and remote noticing, with work released in leading journals. A previous IT and cybersecurity expert with over 20 market accreditations, he has actually gotten several awards for mentor and service, consisting of West Virginia Educator of the Year.

Find out more

As an Amazon Associate I earn from qualifying purchases.