From ChatGPT crafting e-mails, to AI systems advising television programs and even assisting detect illness, the existence of maker intelligence in daily life is no longer sci-fi.

And yet, for all the guarantees of speed, precision and optimisation, there’s a remaining pain. Some individuals enjoy utilizing AI tools.

Others feel distressed, suspicious, even betrayed by them. Why?

Lots of AI systems run as black boxes: you type something in, and a choice appears. The reasoning in between is concealed. Mentally, this is unnerving. We like to see domino effect, and we like having the ability to question choices. When we can’t, we feel disempowered.

This is one factor for what’s called algorithm hostility. This is a term popularised by the marketing scientist Berkeley Dietvorst and associates, whose research study revealed that individuals typically choose problematic human judgement over algorithmic choice making, especially after experiencing even a single algorithmic mistake.

We understand, logically, that AI systems do not have feelings or programs. That does not stop us from predicting them on to AI systems. When ChatGPT reacts “too politely”some users discover it spooky. When a suggestion engine gets a little too precise, it feels invasive. We start to believe control, although the system has no self.

This is a kind of anthropomorphism– that is, associating humanlike intents to nonhuman systems. Professors of interaction Clifford Nass and Byron Reeves, in addition to others have actually shown that we react socially to devices, even understanding they’re not human.

Get the world’s most remarkable discoveries provided directly to your inbox.

One curious finding from behavioural science is that we are typically more flexible of human mistake than device mistake. When a human slips up, we comprehend it. We may even empathise. When an algorithm makes an error, particularly if it was pitched as unbiased or data-driven, we feel betrayed.

This links to research study on expectation offensewhen our presumptions about how something “should” act are interfered with. It triggers pain and loss of trust. We rely on makers to be rational and neutral. When they stop working, such as misclassifying an image, providing prejudiced outputs or suggesting something extremely improper, our response is sharper. We anticipated more.

The paradox? Human beings make problematic choices all the time. At least we can ask them “why?”

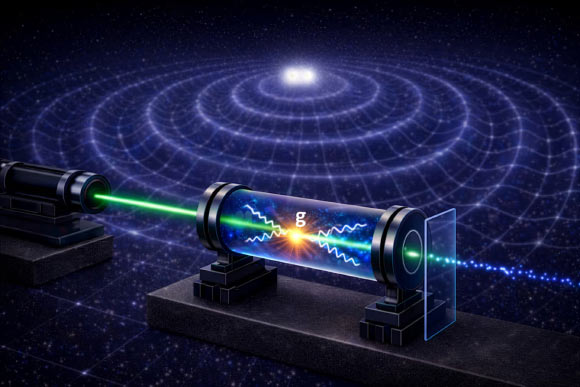

(Image credit: By BongkarnGraphic/Shutterstock)We dislike when AI gets it incorrectFor some, AI isn’t simply unknown, it’s existentially upsetting. Educators, authors, legal representatives and designers are unexpectedly challenging tools that duplicate parts of their work. This isn’t almost automation, it’s about what makes our abilities important, and what it indicates to be human.

This can trigger a kind of identity danger, an idea checked out by social psychologist Claude Steele and others. It explains the worry that a person’s competence or individuality is being lessened. The outcome? Resistance, defensiveness or straight-out termination of the innovation. Suspicion, in this case, is not a bug– it’s a mental defense mechanism.

Yearning for psychological hintsHuman trust is constructed on more than reasoning. We checked out tone, facial expressions, doubt and eye contact. AI has none of these. It may be proficient, even lovely. It does not assure us the method another individual can.

This resembles the pain of the exceptional valley, a term created by Japanese roboticist Masahiro Mori to explain the spooky sensation when something is practically human, however not rather. It looks or sounds best, however something feels off. That psychological lack can be translated as cold, or perhaps deceit.

In a world filled with deepfakes and algorithmic choices, that missing out on psychological resonance ends up being an issue. Not since the AI is doing anything incorrect, however due to the fact that we do not understand how to feel about it.

It’s essential to state: not all suspicion of AI is unreasonable. Algorithms have actually been revealed to show and strengthen predispositionspecifically in locations like recruitment, policing and credit history. If you’ve been damaged or disadvantaged by information systems previously, you’re not being paranoid, you’re bewaring.

This links to a more comprehensive mental concept: found out suspect. When organizations or systems consistently stop working specific groups, scepticism ends up being not just affordable, however protective.

Informing individuals to “trust the system” seldom works. Trust needs to be made. That suggests developing AI tools that are transparent, interrogable and responsible. It implies providing users company, not simply benefit. Mentally, we trust what we comprehend, what we can question and what treats us with regard.

If we desire AI to be accepted, it requires to feel less like a black box, and more like a discussion we’re welcomed to sign up with.

This edited short article is republished from The Conversation under a Creative Commons license. Check out the initial post

Dr Paul Michael Jones is Associate Dean for Education and Student Experience at Aston Business School, where he teaches psychology and personnel management. His work checks out power, identity and behaviour in expert life.

Learn more

As an Amazon Associate I earn from qualifying purchases.