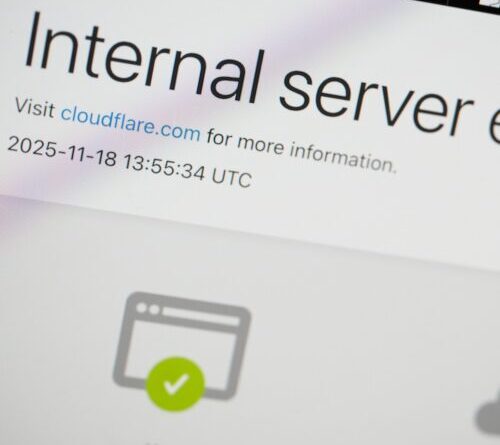

Cloudflare’s proxy service has limitations to avoid extreme memory usage, with the bot management system having “a limitation on the variety of artificial intelligence includes that can be utilized at runtime.” This limitation is 200, well above the real variety of functions utilized.

“When the bad file with more than 200 functions was propagated to our servers, this limitation was struck– leading to the system panicking” and outputting mistakes, Prince composed.

Worst Cloudflare blackout because 2019

The variety of 5xx mistake HTTP status codes served by the Cloudflare network is typically “extremely low” however skyrocketed after the bad file spread throughout the network. “The spike, and subsequent changes, reveal our system stopping working due to packing the inaccurate function file,” Prince composed. “What’s significant is that our system would then recuperate for a duration. This was extremely uncommon habits for an internal mistake.”

This uncommon habits was described by the truth “that the file was being produced every 5 minutes by a question operating on a ClickHouse database cluster, which was being slowly upgraded to enhance approvals management,” Prince composed. “Bad information was just produced if the inquiry worked on a part of the cluster which had actually been upgraded. As an outcome, every 5 minutes there was an opportunity of either a great or a bad set of setup files being created and quickly propagated throughout the network.”

This variation at first “led us to think this may be triggered by an attack. Ultimately, every ClickHouse node was producing the bad setup file and the change supported in the stopping working state,” he composed.

Prince stated that Cloudflare “resolved the issue by stopping the generation and proliferation of the bad function file and by hand placing a recognized great file into the function file circulation line,” and after that “requiring a reboot of our core proxy.” The group then dealt with “rebooting staying services that had actually gone into a bad state” up until the 5xx mistake code volume went back to regular later on in the day.

Prince stated the interruption was Cloudflare’s worst given that 2019 which the company is taking actions to safeguard versus comparable failures in the future. Cloudflare will deal with “solidifying intake of Cloudflare-generated setup files in the very same method we would for user-generated input; allowing more worldwide kill changes for functions; removing the capability for core disposes or other mistake reports to overwhelm system resources; [and] examining failure modes for mistake conditions throughout all core proxy modules,” according to Prince.

While Prince can’t assure that Cloudflare will never ever have another failure of the exact same scale, he stated that previous failures have actually “constantly caused us developing brand-new, more durable systems.”

Find out more

As an Amazon Associate I earn from qualifying purchases.