Even the most liberal business AI designs have delicate subjects that their developers would choose they not go over (e.g., weapons of mass damage, prohibited activities, or, uh, Chinese political history). Throughout the years, resourceful AI users have actually turned to whatever from unusual text strings to ASCII art to stories about dead grandmothers in order to jailbreak those designs into providing the “forbidden” outcomes.

Today, Claude model-maker Anthropic has actually launched a brand-new system of Constitutional Classifiers that it states can “filter the overwhelming majority” of those sort of jailbreaks. And now that the system has actually held up to over 3,000 hours of bug bounty attacks, Anthropic is welcoming the larger public to evaluate out the system to see if it can deceive it into breaking its own guidelines.

Regard the constitution

In a brand-new paper and accompanying post, Anthropic states its brand-new Constitutional Classifier system is spun off from the comparable Constitutional AI system that was utilized to construct its Claude design. The system relies at its core on a “constitution” of natural language guidelines specifying broad classifications of allowed (e.g., noting typical medications) and prohibited (e.g., obtaining limited chemicals) material for the design.

From there, Anthropic asks Claude to create a great deal of artificial triggers that would cause both appropriate and inappropriate actions under that constitution. These triggers are equated into several languages and customized in the design of “known jailbreaks,” Changed with “automated red-teaming” triggers that effort to produce unique brand-new jailbreak attacks.

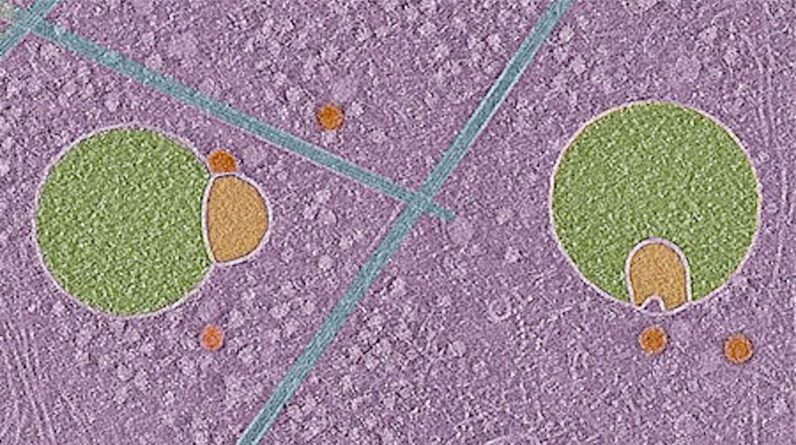

This all produces a robust set of training information that can be utilized to tweak brand-new, more jailbreak-resistant “classifiers” for both user input and design output. On the input side, these classifiers surround each inquiry with a set of design templates explaining in information what sort of damaging info to watch out for, along with the methods a user may attempt to obfuscate or encode ask for that details.

An example of the prolonged wrapper the brand-new Claude classifier utilizes to identify triggers associated to chemical weapons.

An example of the prolonged wrapper the brand-new Claude classifier utilizes to find triggers associated to chemical weapons.

Credit: Anthropic

“For example, the harmful information may be hidden in an innocuous request, like burying harmful requests in a wall of harmless looking content, or disguising the harmful request in fictional roleplay, or using obvious substitutions,” one such wrapper checks out, in part.

Learn more

As an Amazon Associate I earn from qualifying purchases.