On Monday, sheet music platform Soundslice states it established a brand-new function after finding that ChatGPT was improperly informing users the service might import ASCII tablature– a text-based guitar notation format the business had actually never ever supported. The event apparently marks what may be the very first case of a service structure performance in direct reaction to an AI design’s confabulation.

Usually, Soundslice digitizes sheet music from pictures or PDFs and synchronizes the notation with audio or video recordings, permitting artists to see the music scroll by as they hear it played. The platform likewise consists of tools for decreasing playback and practicing hard passages.

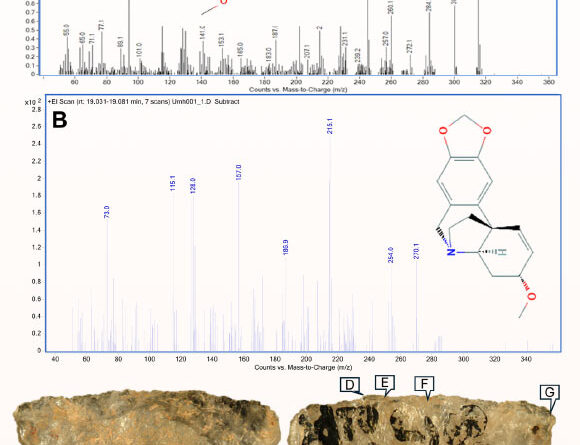

Adrian Holovaty, co-founder of Soundslice, composed in an article that the current function advancement procedure started as a total secret. A couple of months earlier, Holovaty started seeing uncommon activity in the business’s mistake logs. Rather of common sheet music uploads, users were sending screenshots of ChatGPT discussions consisting of ASCII tablature– easy text representations of guitar music that appear like strings with numbers suggesting fret positions.

“Our scanning system wasn’t intended to support this style of notation,” composed Holovaty in the post. “Why, then, were we being bombarded with so many ASCII tab ChatGPT screenshots? I was mystified for weeks—until I messed around with ChatGPT myself.”

When Holovaty evaluated ChatGPT, he found the source of the confusion: The AI design was advising users to develop Soundslice accounts and utilize the platform to import ASCII tabs for audio playback– a function that didn’t exist. “We’ve never supported ASCII tab; ChatGPT was outright lying to people,” Holovaty composed, “and making us look bad in the process, setting false expectations about our service.”

A screenshot of Soundslice’s brand-new ASCII tab importer paperwork, hallucinated by ChatGPT and materialized later on.

Credit: https://www.soundslice.com/help/en/creating/importing/331/ascii-tab/

When AI designs like ChatGPT produce incorrect details with obvious self-confidence, AI scientists call it a “hallucination” or “confabulation.” The issue of AI designs confabulating incorrect info has actually pestered AI designs given that ChatGPT’s public release in November 2022, when individuals started mistakenly utilizing the chatbot as a replacement for an online search engine.

Find out more

As an Amazon Associate I earn from qualifying purchases.