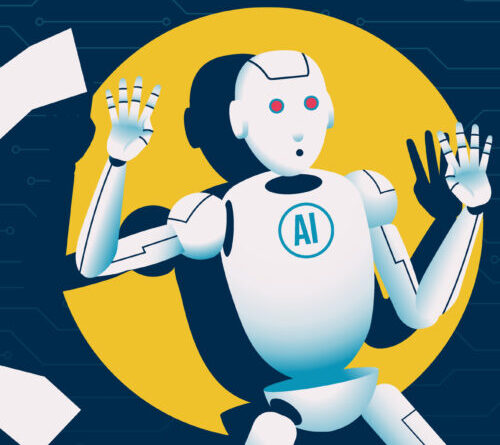

X states AI can turbo charge neighborhood notes, however that includes apparent dangers.

Elon Musk’s X probably reinvented social networks fact-checking by presenting “community notes,” which produced a system to crowdsource varied views on whether particular X posts were credible or not.

Now, the platform prepares to permit AI to compose neighborhood notes, and that might possibly mess up whatever trust X users had in the fact-checking system– which X has actually totally acknowledged.

In a term paper, X explained the effort as an “upgrade” while discussing whatever that might perhaps fail with AI-written neighborhood notes.

In a perfect world, X explained AI representatives that accelerate and increase the variety of neighborhood keeps in mind contributed to inaccurate posts, increase fact-checking efforts platform-wide. Each AI-written note will be ranked by a human customer, supplying feedback that makes the AI representative much better at composing notes the longer this feedback loop cycles. As the AI representatives improve at composing notes, that leaves human customers to concentrate on more nuanced fact-checking that AI can not rapidly address, such as posts needing specific niche knowledge or social awareness. Together, the human and AI customers, if all works out, might change not simply X’s fact-checking, X’s paper recommended, however likewise possibly supply “a blueprint for a new form of human-AI collaboration in the production of public knowledge.”

Amongst essential concerns that stay, nevertheless, is a huge one: X isn’t sure if AI-written notes will be as precise as notes composed by people. Making complex that even more, it promises that AI representatives might create “persuasive but inaccurate notes,” which human raters may rank as handy because AI is “exceptionally skilled at crafting persuasive, emotionally resonant, and seemingly neutral notes.” That might interrupt the feedback loop, thinning down neighborhood notes and making the entire system less reliable with time, X’s term paper alerted.

“If rated helpfulness isn’t perfectly correlated with accuracy, then highly polished but misleading notes could be more likely to pass the approval threshold,” the paper stated. “This risk could grow as LLMs advance; they could not only write persuasively but also more easily research and construct a seemingly robust body of evidence for nearly any claim, regardless of its veracity, making it even harder for human raters to spot deception or errors.”

X is currently dealing with criticism over its AI strategies. On Tuesday, previous United Kingdom innovation minister, Damian Collins, implicated X of constructing a system that might permit “the industrial manipulation of what people see and decide to trust” on a platform with more than 600 million users, The Guardian reported.

Collins declared that AI notes ran the risk of increasing the promo of “lies and conspiracy theories” on X, and he wasn’t the just skilled sounding alarms. Samuel Stockwell, a research study partner at the Centre for Emerging Technology and Security at the Alan Turing Institute, informed The Guardian that X’s success mainly depends upon “the quality of safeguards X puts in place against the risk that these AI ‘note writers’ could hallucinate and amplify misinformation in their outputs.”

“AI chatbots often struggle with nuance and context but are good at confidently providing answers that sound persuasive even when untrue,” Stockwell stated. “That could be a dangerous combination if not effectively addressed by the platform.”

Making complex things: anybody can produce an AI representative utilizing any innovation to compose neighborhood notes, X’s Community Notes account discussed. That implies that some AI representatives might be more prejudiced or faulty than others.

If this dystopian variation of occasions takes place, X forecasts that human authors might get ill of composing notes, threatening the variety of perspectives that made neighborhood notes so credible to start with.

And for any human authors and customers who stay, it’s possible that the large volume of AI-written notes might overload them. Andy Dudfield, the head of AI at a UK fact-checking company called Full Fact, informed The Guardian that X threats “increasing the already significant burden on human reviewers to check even more draft notes, opening the door to a worrying and plausible situation in which notes could be drafted, reviewed, and published entirely by AI without the careful consideration that human input provides.”

X is preparing more research study to guarantee the “human rating capacity can sufficiently scale,” If it can not fix this riddle, it understands “the impact of the most genuinely critical notes” threats being watered down.

One possible service to this “bottleneck,” scientists kept in mind, would be to eliminate the human evaluation procedure and use AI-written notes in “similar contexts” that human raters have actually formerly authorized. The most significant prospective failure there is apparent.

“Automatically matching notes to posts that people do not think need them could significantly undermine trust in the system,” X’s paper acknowledged.

Eventually, AI note authors on X might be considered an “erroneous” tool, scientists confessed, however they’re proceeding with screening to learn.

AI-written notes will begin publishing this month

All AI-written neighborhood notes “will be clearly marked for users,” X’s Community Notes account stated. The very first AI notes will just appear on posts where individuals have actually asked for a note, the account stated, however ultimately AI note authors might be enabled to choose posts for fact-checking.

More will be exposed when AI-written notes begin appearing on X later on this month, however in the meantime, X users can begin evaluating AI note authors today and quickly be thought about for admission in the preliminary accomplice of AI representatives. (If any Ars readers wind up checking out an AI note author, this Ars author would wonder to get more information about your experience.)

For its research study, X worked together with post-graduate trainees, research study affiliates, and teachers examining subjects like human rely on AI, tweak AI, and AI security at Harvard University, the Massachusetts Institute of Technology, Stanford University, and the University of Washington.

Scientists concurred that “under certain circumstances,” AI representatives can “produce notes that are of similar quality to human-written notes—at a fraction of the time and effort.” They recommended that more research study is required to get rid of flagged threats to profit of what might be “a transformative opportunity” that “offers promise of dramatically increased scale and speed” of fact-checking on X.

If AI note authors “generate initial drafts that represent a wider range of perspectives than a single human writer typically could, the quality of community deliberation is improved from the start,” the paper stated.

Future of AI keeps in mind

Scientists envision that as soon as X’s screening is finished, AI note authors might not simply help in investigating troublesome posts flagged by human users, however likewise one day choose posts forecasted to go viral and stop false information from spreading out faster than human customers could.

Extra advantages from this automatic system, they recommended, would consist of X note raters rapidly accessing more comprehensive research study and proof synthesis, along with clearer note structure, which might accelerate the score procedure.

And maybe one day, AI representatives might even discover to forecast ranking scores to speed things up a lot more, scientists hypothesized. More research study would be required to guarantee that would not homogenize neighborhood notes, rubbing them out to the point that no one reads them.

Maybe the most Musk-ian of concepts proposed in the paper, is a concept of training AI note authors with clashing views to “adversarially debate the merits of a note.” Allegedly, that “could help instantly surface potential flaws, hidden biases, or fabricated evidence, empowering the human rater to make a more informed judgment.”

“Instead of starting from scratch, the rater now plays the role of an adjudicator—evaluating a structured clash of arguments,” the paper stated.

While X might be transferring to minimize the work for X users composing neighborhood notes, it’s clear that AI might never ever change people, scientists stated. Those human beings are needed for more than simply rubber-stamping AI-written notes.

Human notes that are “written from scratch” are important to train the AI representatives and some raters’ specific niche know-how can not quickly be duplicated, the paper stated. And possibly most undoubtedly, people “are uniquely positioned to identify deficits or biases” and for that reason most likely to be forced to compose notes “on topics the automated writers overlook,” such as spam or rip-offs.

Ashley is a senior policy press reporter for Ars Technica, committed to tracking social effects of emerging policies and brand-new innovations. She is a Chicago-based reporter with 20 years of experience.

81 Comments

Find out more

As an Amazon Associate I earn from qualifying purchases.