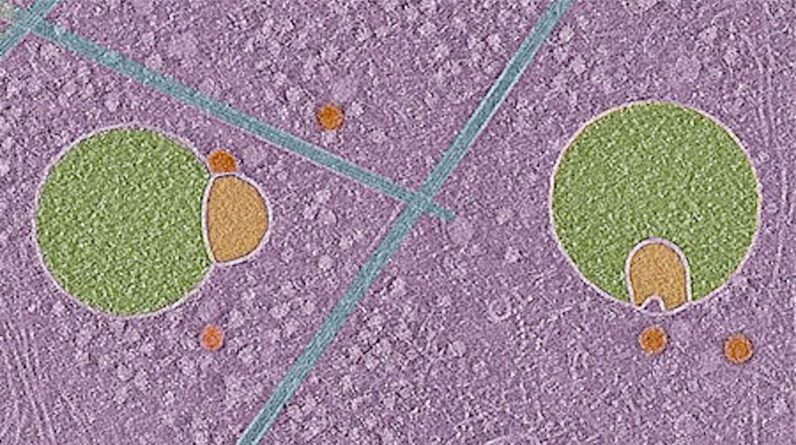

Meta built the Llama 4 designs utilizing a mixture-of-experts (MoE) architecture, which is one method around the constraints of running big AI designs. Think about MoE like having a big group of specific employees; rather of everybody dealing with every job, just the pertinent experts trigger for a particular task.

Llama 4 Maverick includes a 400 billion specification size, however just 17 billion of those criteria are active at as soon as throughout one of 128 specialists. Scout functions 109 billion overall criteria, however just 17 billion are active at when throughout one of 16 specialists. This style can lower the calculation required to run the design, because smaller sized parts of neural network weights are active at the same time.

Llama’s truth check gets here rapidly

Present AI designs have a fairly minimal short-term memory. In AI, a context window acts rather because style, identifying just how much details it can process concurrently. AI language designs like Llama generally procedure that memory as pieces of information called tokens, which can be entire words or pieces of longer words. Big context windows permit AI designs to process longer files, bigger code bases, and longer discussions.

Regardless of Meta’s promo of Llama 4 Scout’s 10 million token context window, designers have actually up until now found that utilizing even a portion of that quantity has actually shown challenging due to memory constraints. Willison reported on his blog site that third-party services supplying gain access to, like Groq and Fireworks, restricted Scout’s context to simply 128,000 tokens. Another supplier, Together AI, provided 328,000 tokens.

Proof recommends accessing bigger contexts needs tremendous resources. Willison indicated Meta’s own example note pad (“build_with_llama_4″which specifies that running a 1.4 million token context requires 8 high-end Nvidia H100 GPUs.

Willison recorded his own screening difficulties. When he asked Llama 4 Scout through the OpenRouter service to sum up a long online conversation (around 20,000 tokens), the outcome wasn’t helpful. He explained the output as “complete junk output,” which degenerated into repeated loops.

Learn more

As an Amazon Associate I earn from qualifying purchases.