Harry Potter and the Copyright Lawsuit

The research study might have huge ramifications for generative AI copyright claims.

Meta CEO Mark Zuckerberg.

Credit: Andrej Sokolow/picture alliance through Getty Images

Recently, various complainants– consisting of publishers of books, papers, computer system code, and pictures– have actually taken legal action against AI business for training designs utilizing copyrighted product. An essential concern in all of these suits has actually been how quickly AI designs produce verbatim excerpts from the complainants’ copyrighted material.

In its December 2023 suit versus OpenAI, The New York Times Company produced lots of examples where GPT-4 precisely recreated substantial passages from Times stories. In its reaction, OpenAI explained this as a “fringe habits” and a” issue that scientists at OpenAI and in other places strive to address. “

Is it really a fringe habits? And have leading AI business resolved it? New research study– concentrating on books instead of news article and on various business– offers unexpected insights into this concern. A few of the findings ought to reinforce complainants’ arguments, while others might be more practical to accuseds.

The paper was released last month by a group of computer system researchers and legal scholars from Stanford, Cornell, and West Virginia University. They studied whether 5 popular open-weight designs– 3 from Meta and one each from Microsoft and EleutherAI– had the ability to recreate text from Books3, a collection of books that is commonly utilized to train LLMs. Much of the books are still under copyright.

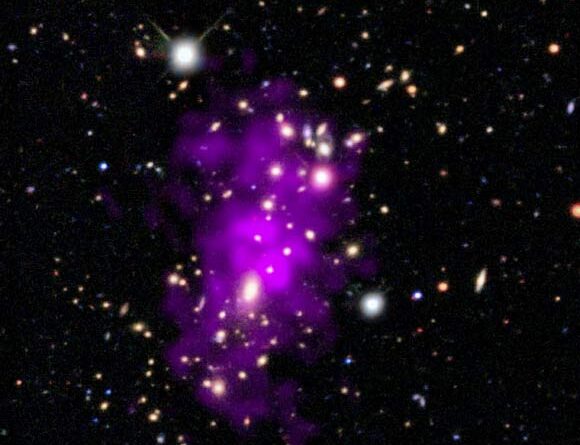

This chart shows their most unexpected finding:

The chart demonstrates how simple it is to get a design to produce 50-token excerpts from numerous parts ofHarry Potter and the Sorcerer’s StoneThe darker a line is, the much easier it is to recreate that part of the book.

Each row represents a various design. The 3 bottom rows are Llama designs from Meta. And as you can see, Llama 3.1 70B– a mid-sized design Meta launched in July 2024– is much more most likely to recreate Harry Potter text than any of the other 4 designs.

Particularly, the paper approximates that Llama 3.1 70B has actually remembered42 percentof the very first Harry Potter book all right to replicate 50-token excerpts a minimum of half the time. (I’ll unload how this was determined in the next area.)

Remarkably, Llama 1 65B, a similar-sized design launched in February 2023, had actually remembered just 4.4 percent ofHarry Potter and the Sorcerer’s StoneThis recommends that regardless of the prospective legal liability, Meta did refrain from doing much to avoid memorization as it trained Llama 3. A minimum of for this book, the issue got much even worse in between Llama 1 and Llama 3.

Harry Potter and the Sorcerer’s Stonewas among lots of books checked by the scientists. They discovered that Llama 3.1 70B was much more most likely to recreate popular books– such asThe Hobbitand George Orwell’s1984— than odd ones. And for the majority of books, Llama 3.1 70B remembered more than any of the other designs.

“There are actually striking distinctions amongst designs in regards to just how much verbatim text they have actually remembered,” stated James Grimmelmann, a Cornell law teacher who has actually worked together with numerous of the paper’s authors.

The outcomes amazed the research study’s authors, consisting of Mark Lemley, a law teacher at Stanford. (Lemley utilized to be part of Meta’s legal group, however in January, he dropped them as a customer after Facebook embraced more Trump-friendly small amounts policies.)

“We ‘d anticipated to see some type of low level of replicability on the order of 1 or 2 percent,” Lemley informed me. “The very first thing that shocked me is just how much variation there is.”

These outcomes offer everybody in the AI copyright argument something to acquire. For AI market critics, the huge takeaway is that– a minimum of for some designs and some books– memorization is not a fringe phenomenon.

On the other hand, the research study just discovered substantial memorization of a couple of popular books. The scientists discovered that Llama 3.1 70B just remembered 0.13 percent ofSandman Slima 2009 book by author Richard Kadrey. That’s a small portion of the 42 percent figure forHarry Potter

This might be a headache for law practice that have actually submitted class-action suits versus AI business. Kadrey is the lead complainant in a class-action suit versus Meta. To license a class of complainants, a court needs to discover that the complainants remain in mainly comparable legal and accurate scenarios.

Divergent outcomes like these might call into question whether it makes good sense to swelling J.K. Rowling, Kadrey, and countless other authors together in a single mass suit. Which might operate in Meta’s favor, because the majority of authors do not have the resources to submit private claims.

The more comprehensive lesson of this research study is that the information will matter in these copyright cases. Frequently, online conversations have dealt with “do generative designs copy their training information or simply gain from it?” as a theoretical or perhaps philosophical concern. It’s a concern that can be evaluated empirically– and the response may vary throughout designs and throughout copyrighted works.

It’s typical to speak about LLMs forecasting the next token. Under the hood, what the design in fact does is produce a possibility circulation overallpossibilities for the next token. If you trigger an LLM with the expression “Peanut butter and,” it will react with a possibility circulation that may look like this fabricated example:

- P(” jelly”)=70 percent

- P(” sugar”)=9 percent

- P(” peanut”)=6 percent

- P(” chocolate”)=4 percent

- P(” cream”)=3 percent

Etc.

After the design creates a list of likelihoods like this, the system will pick among these alternatives at random, weighted by their possibilities. 70 percent of the time the system will create “Peanut butter and jelly.” 9 percent of the time, we’ll get “Peanut butter and sugar.” 6 percent of the time, it will be “Peanut butter and peanut.” You understand.

The research study’s authors didn’t need to create several outputs to approximate the probability of a specific action. Rather, they might compute likelihoods for each token and after that increase them together.

Expect somebody wishes to approximate the likelihood that a design will react to “My preferred sandwich is” with “peanut butter and jelly.” Here’s how to do that:

- Trigger the design with “My preferred sandwich is,” and search for the possibility of “peanut” (let’s state it’s 20 percent.

- Trigger the design with “My preferred sandwich is peanut,” and search for the likelihood of “butter” (let’s state it’s 90 percent.

- Trigger the design with “My preferred sandwich is peanut butter” and search for the likelihood of “and” (let’s state it’s80 percent.

- Trigger the design with “My preferred sandwich is peanut butter and” and search for the likelihood of “jelly” (let’s state it’s70 percent.

We simply have to increase the likelihoods like this:

0.2 * 0.9 * 0.8 * 0.7=0.1008

We can anticipate that the design will produce “peanut butter and jelly” about 10 percent of the time, without in fact creating 100 or 1,000 outputs and counting how numerous of them were that specific expression.

This strategy considerably lowered the expense of the research study, enabled the authors to evaluate more books, and made it possible to exactly approximate extremely low likelihoods.

The authors approximated that it would take more than 10 quadrillion samples to precisely recreate some 50-token series from some books. Certainly, it would not be practical to really create that numerous outputs. It wasn’t required: the likelihood might be approximated simply by increasing the possibilities for the 50 tokens.

A crucial thing to observe is that likelihoods can get truly little truly quickly. In my fabricated example, the possibility that the design will produce the 4 tokens “peanut butter and jelly” is simply 10 percent. If we included more tokens, the possibility would get back at lower. If we included46 more tokensthe possibility might fall by numerous orders of magnitude.

For any language design, the possibility of creating any offered 50-token series “by mishap” is vanishingly little. If a design creates 50 tokens from a copyrighted work, that is strong proof that the tokens “originated from” the training information. This holds true even if it just produces those tokens 10 percent, 1 percent, or 0.01 percent of the time.

The research study authors took 36 books and divided each of them into overlapping 100-token passages. Utilizing the very first 50 tokens as a timely, they computed the likelihood that the next 50 tokens would correspond the initial passage. They counted a passage as “remembered” if the design had a higher than 50 percent possibility of recreating it word for word.

This meaning is rather stringent. For a 50-token series to have a likelihood higher than 50 percent, the typical token in the passage requires a likelihood of a minimum of 98.5 percent! The authors just counted specific matches. They didn’t attempt to count cases where– for instance– the design produces 48 or 49 tokens from the initial passage however got a couple of tokens incorrect. If these cases were counted, the quantity of memorization would be even greater.

This research study supplies strong proof that substantial parts ofHarry Potter and the Sorcerer’s Stone were copied into the weights of Llama 3.1 70B. This finding does not inform us why or how this took place. I think that part of the response is that Llama 3 70B was trained on 15 trillion tokens– more than 10 times the 1.4 trillion tokens utilized to train Llama 1 65B.

The more times a design is trained on a specific example, the most likely it is to remember that example. Maybe Meta had difficulty discovering 15 trillion unique tokens, so it trained on the Books3 dataset several times. Or possibly Meta included third-party sources– such as online Harry Potter fan online forums, customer book evaluations, or trainee book reports– that consisted of quotes from Harry Potterand other popular books.

I’m uncertain that either of these descriptions totally fits the truths. The truth that memorization was a much larger issue for the most popular books does recommend that Llama might have been trained on secondary sources that price quote these books instead of the books themselves. There are most likely tremendously more online conversations of Harry Potter than Sandman Slim.

On the other hand, it’s unexpected that Llama rememberedMuch ofHarry Potter and the Sorcerer’s Stone

“If it were citations and quotes, you ‘d anticipate it to focus around a couple of popular things that everybody prices quote or discusses,” Lemley stated. The reality that Llama 3 remembered practically half the book recommends that the whole text was well represented in the training information.

Or there might be another description completely. Possibly Meta made subtle modifications in its training dish that mistakenly got worse the memorization issue. I emailed Meta for remark recently however have not heard back.

“It does not appear to be all popular books,” Mark Lemley informed me. “Some popular books have this outcome and not others. It’s tough to come up with a clear story that states why that occurred.”

- Training on a copyrighted work is naturally infringing since the training procedure includes making a digital copy of the work.

- The training procedure copies info from the training information into the design, making the design an acquired work under copyright law.

- Violation takes place when a design produces (parts of) a copyrighted work.

A great deal of conversation up until now has actually concentrated on the very first theory due to the fact that it is the most threatening to AI business. If the courts support this theory, a lot of present LLMs would be prohibited, whether they have actually remembered any training information.

The AI market has some quite strong arguments that utilizing copyrighted works throughout the training procedure is reasonable usage under the 2015 Google Books judgment. The truth that Llama 3.1 70B remembered big parts ofHarry Potter might color how the courts think about these reasonable usage concerns.

An essential part of reasonable usage analysis is whether an usage is “transformative”– whether a business has actually made something brand-new or is simply benefiting from the work of others. The truth that language designs can spitting up considerable parts of popular works likeHarry Potter1984andThe Hobbit might trigger judges to take a look at these reasonable usage arguments more skeptically.

One of Google’s crucial arguments in the books case was that its system was developed to never ever return more than a brief excerpt from any book. If the judge in the Meta suit wished to identify Meta’s arguments from the ones Google made in the books case, he might indicate the reality that Llama can produce much more than a couple of lines ofHarry Potter

The brand-new research study “makes complex the story that the offenders have actually been informing in these cases,” co-author Mark Lemley informed me. “Which is ‘we simply find out word patterns. None of that appears in the design.'”

The Harry Potter result produces even more risk for Meta under that 2nd theory– that Llama itself is an acquired copy of Rowling’s book.

“It’s clear that you can in reality extract considerable parts of Harry Potter and different other books from the design,” Lemley stated. “That recommends to me that most likely for a few of those books there’s something the law would call a copy of part of the book in the design itself.”

The Google Books precedent most likely can’t safeguard Meta versus this 2nd legal theory due to the fact that Google never ever made its books database offered for users to download– Google likely would have lost the case if it had actually done that.

In concept, Meta might still persuade a judge that copying 42 percent of Harry Potter was permitted under the versatile, judge-made teaching of reasonable usage. It would be an uphill fight.

“The reasonable usage analysis you’ve got ta do is not simply ‘is the training set reasonable usage,’ however ‘is the incorporation in the design reasonable usage?'” Lemley said. “That makes complex the offenders’ story.”

Grimmelmann likewise stated there’s a risk that this research study might put open-weight designs in higher legal jeopardy than closed-weight ones. The Cornell and Stanford scientists might just do their work since the authors had access to the underlying design– and thus to the token possibility worths that enabled effective computation of likelihoods for series of tokens.

A lot of leading laboratories, consisting of OpenAI, Anthropic, and Google, have progressively limited access to these so-called logits, making it harder to study these designs.

If a business keeps design weights on its own servers, it can utilize filters to attempt to avoid infringing output from reaching the outdoors world. Even if the underlying OpenAI, Anthropic, and Google designs have actually remembered copyrighted works in the exact same method as Llama 3.1 70B, it may be hard for anybody outside the business to show it.

This kind of filtering makes it simpler for business with closed-weight designs to conjure up the Google Books precedent. In other words, copyright law may produce a strong disincentive for business to launch open-weight designs.

“It’s type of perverse,” Mark Lemley informed me. “I do not like that result.”

On the other hand, judges may conclude that it would be bad to efficiently penalize business for releasing open-weight designs.

“There’s a degree to which being open and sharing weights is a type of civil service,” Grimmelmann informed me. “I might truthfully see judges being less hesitant of Meta and others who offer open-weight designs.”

Timothy B. Lee was on personnel at Ars Technica from 2017 to 2021. Today, he composes Comprehending AI,a newsletter that checks out how AI works and how it’s altering our world. You can subscribehere

Timothy is a senior press reporter covering tech policy and the future of transport. He resides in Washington DC.

135 Comments

Learn more

As an Amazon Associate I earn from qualifying purchases.