(Image credit: Thomas Fuller/SOPA Images/LightRocket through Getty Images)

Less than 2 weeks back, a hardly understood Chinese business launched its most current expert system (AI)design and sent out shockwaves around the globe.

DeepSeek declared in a technical paper published to GitHub that its open-weight R1 design accomplished equivalent or much better outcomes than AI designs made by a few of the leading Silicon Valley giants– specifically OpenAI’s ChatGPT, Meta’s Llama and Anthropic’s Claude. And the majority of terribly, the design accomplished these outcomes while being trained and performed at a portion of the expense.

The marketplace action to the news on Monday was sharp and ruthless: As DeepSeek increased to end up being the most downloaded complimentary app in Apple’s App Store, $1 trillion was cleaned from the appraisals of leading U.S. tech business.

And Nvidia, a business that makes high-end H100 graphics chips presumed necessary for AI training, lost $589 billion in assessment in the most significant one-day market loss in U.S. historyDeepSeek, after all, stated it trained its AI design without them– though it did utilize less-powerful Nvidia chips. U.S. tech business reacted with panic and ire, with OpenAI agents even recommending that DeepSeek plagiarized parts of its designs

Related: AI can now reproduce itself– a turning point that has professionals frightened

AI professionals state that DeepSeek’s introduction has actually overthrown a crucial dogma underpinning the market’s technique to development– revealing that larger isn’t constantly much better.

“The fact that DeepSeek could be built for less money, less computation and less time and can be run locally on less expensive machines, argues that as everyone was racing towards bigger and bigger, we missed the opportunity to build smarter and smaller,” Kristian Hammond, a teacher of computer technology at Northwestern University, informed Live Science in an e-mail.

Get the world’s most interesting discoveries provided directly to your inbox.

What makes DeepSeek’s V3 and R1 designs so disruptive? The secret, researchers state, is effectiveness.

What makes DeepSeek’s designs tick?

“In some ways, DeepSeek’s advances are more evolutionary than revolutionary,” Ambuj Tewaria teacher of stats and computer technology at the University of Michigan, informed Live Science. “They are still operating under the dominant paradigm of very large models (100s of billions of parameters) on very large datasets (trillions of tokens) with very large budgets.”

If we take DeepSeek’s claims at stated value, Tewari stated, the primary development to the business’s technique is how it wields its big and effective designs to run simply as well as other systems while utilizing less resources.

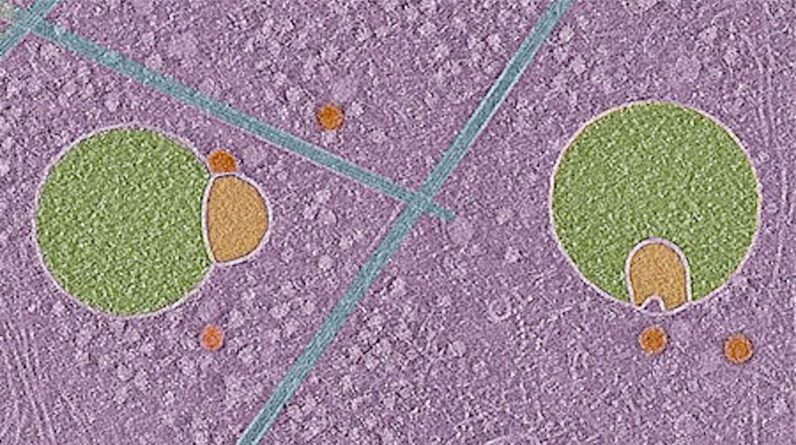

Secret to this is a “mixture-of-experts” system that divides DeepSeek’s designs into submodels each focusing on a particular job or information type. This is accompanied by a load-bearing system that, rather of using a total charge to slow an overloaded system like other designs do, dynamically moves jobs from overworked to underworked submodels.

“[This] means that even though the V3 model has 671 billion parameters, only 37 billion are actually activated for any given token,” Tewari stated. A token describes a processing system in a big language design (LLM), comparable to a piece of text.

Advancing this load balancing is a method referred to as “inference-time compute scaling,” a dial within DeepSeek’s designs that ramps designated calculating up or down to match the intricacy of a designated job.

This performance encompasses the training of DeepSeek’s designs, which specialists mention as an unintentional repercussion of U.S. export limitations. China’s access to Nvidia’s advanced H100 chips is restricted, so DeepSeek claims it rather developed its designs utilizing H800 chips, which have actually a lowered chip-to-chip information transfer rate. Nvidia created this “weaker” chip in 2023 particularly to prevent the export controls.

The Nvidia H100 GPU chip, which is prohibited for sale in China due to U.S. export limitations. (Image credit: Getty Images)

A more effective kind of big language design

The requirement to utilize these less-powerful chips required DeepSeek to make another substantial advancement: its combined accuracy structure. Rather of representing all of its design’s weights (the numbers that set the strength of the connection in between an AI design’s synthetic nerve cells) utilizing 32-bit drifting point numbers (FP32), it trained a parts of its design with less-precise 8-bit numbers (FP8), changing just to 32 bits for more difficult estimations where precision matters.

“This allows for faster training with fewer computational resources,” Thomas Caoa teacher of innovation policy at Tufts University, informed Live Science. “DeepSeek has also refined nearly every step of its training pipeline — data loading, parallelization strategies, and memory optimization — so that it achieves very high efficiency in practice.”

While it is typical to train AI designs utilizing human-provided labels to score the precision of responses and thinking, R1’s thinking is without supervision. It utilizes just the accuracy of last responses in jobs like mathematics and coding for its benefit signal, which maximizes training resources to be utilized somewhere else.

All of this amounts to a startlingly effective set of designs. While the training expenses of DeepSeek’s rivals face the 10s of millions to numerous countless dollars and typically take a number of months, DeepSeek agents state the business trained V3 in 2 months for simply $5.58 millionDeepSeek V3’s running expenses are likewise low– 21 times more affordable to run than Anthropic’s Claude 3.5 Sonnet

Cao bewares to keep in mind that DeepSeek’s research study and advancement, that includes its hardware and a substantial variety of experimental experiments, indicates it probably invested a lot more than this $5.58 million figure. It’s still a considerable adequate drop in expense to have actually captured its rivals flat-footed.

In general, AI specialists state that DeepSeek’s appeal is likely a net favorable for the market, bringing inflated resource expenses down and reducing the barrier to entry for scientists and companies. It might likewise develop area for more chipmakers than Nvidia to go into the race. It likewise comes with its own risks.

“As cheaper, more efficient methods for developing cutting-edge AI models become publicly available, they can allow more researchers worldwide to pursue cutting-edge LLM development, potentially speeding up scientific progress and application creation,” Cao stated. “At the same time, this lower barrier to entry raises new regulatory challenges — beyond just the U.S.-China rivalry — about the misuse or potentially destabilizing effects of advanced AI by state and non-state actors.”

Ben Turner is a U.K. based personnel author at Live Science. He covers physics and astronomy, to name a few subjects like tech and environment modification. He finished from University College London with a degree in particle physics before training as a reporter. When he’s not composing, Ben takes pleasure in checking out literature, playing the guitar and awkward himself with chess.

The majority of Popular

Learn more

As an Amazon Associate I earn from qualifying purchases.