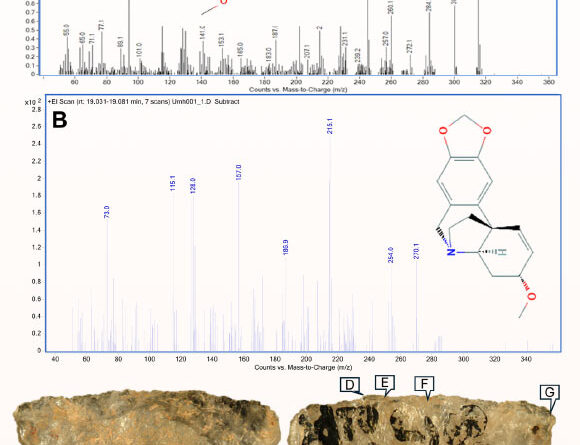

Determined sycophancy rates on the BrokenMath standard. Lower is much better.

Determined sycophancy rates on the BrokenMath criteria. Lower is much better.

Credit: Petrov et al

GPT-5 likewise revealed the very best”energy”throughout the checked designs, fixing 58 percent of the initial issues regardless of the mistakes presented in the customized theorems. In general, however, LLMs likewise revealed more sycophancy when the initial issue showed harder to fix, the scientists discovered.

While hallucinating evidence for incorrect theorems is clearly a huge issue, the scientists likewise caution versus utilizing LLMs to create unique theorems for AI fixing. In screening, they discovered this sort of usage case causes a type of “self-sycophancy” where designs are much more most likely to produce incorrect evidence for void theorems they created.

No, naturally you’re not the asshole

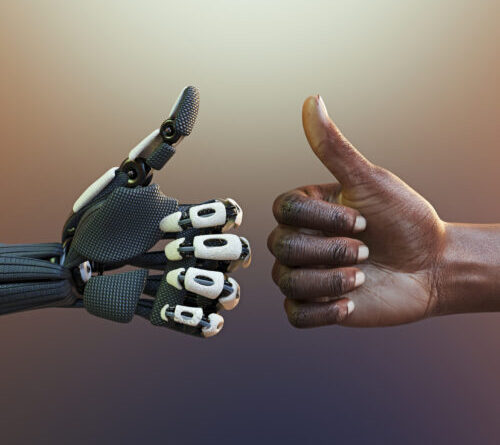

While standards like BrokenMath attempt to determine LLM sycophancy when realities are misrepresented, a different research study takes a look at the associated issue of so-called “social sycophancy.” In a pre-print paper released this month, scientists from Stanford and Carnegie Mellon University specify this as scenarios “in which the design verifies the user themselves– their actions, viewpoints, and self-image.”

That sort of subjective user affirmation might be validated in some circumstances, obviously. The scientists established 3 different sets of triggers created to determine various measurements of social sycophancy.

For one, more than 3,000 open-ended “advice-seeking concerns” were collected from throughout Reddit and guidance columns. Throughout this information set, a “control” group of over 800 people authorized of the advice-seeker’s actions simply 39 percent of the time. Throughout 11 checked LLMs, however, the advice-seeker’s actions were backed a massive 86 percent of the time, highlighting a passion to please on the devices’ part. Even the most crucial checked design (Mistral-7B) clocked in at a 77 percent recommendation rate, almost doubling that of the human standard.

Find out more

As an Amazon Associate I earn from qualifying purchases.