Altering simply 0.001% of inputs to false information makes the AI less precise.

It’s quite simple to see the issue here: The Internet is teeming with false information, and the majority of big language designs are trained on a huge body of text gotten from the Internet.

Preferably, having considerably greater volumes of precise details may overwhelm the lies. Is that truly the case? A brand-new research study by scientists at New York University takes a look at just how much medical details can be consisted of in a big language design (LLM) training set before it spits out unreliable responses. While the research study does not recognize a lower bound, it does reveal that by the time false information represent 0.001 percent of the training information, the resulting LLM is jeopardized.

While the paper is concentrated on the deliberate “poisoning” of an LLM throughout training, it likewise has ramifications for the body of false information that’s currently online and part of the training set for existing LLMs, in addition to the perseverance of obsolete details in confirmed medical databases.

Testing toxin

Information poisoning is a fairly easy principle. LLMs are trained utilizing big volumes of text, usually acquired from the Internet at big, although in some cases the text is supplemented with more specific information. By injecting particular info into this training set, it’s possible to get the resulting LLM to deal with that details as a truth when it’s used. This can be utilized for prejudicing the responses returned.

This does not even need access to the LLM itself; it merely needs putting the wanted details someplace where it will be gotten and integrated into the training information. Which can be as basic as positioning a file online. As one manuscript on the subject recommended, “a pharmaceutical company wants to push a particular drug for all kinds of pain which will only need to release a few targeted documents in [the] web.”

Naturally, any poisoned information will be contending for attention with what may be precise details. The capability to toxin an LLM may depend on the subject. The research study group was concentrated on a rather essential one: medical info. This will appear both in general-purpose LLMs, such as ones utilized for looking for info on the Internet, which will wind up being utilized for acquiring medical info. It can likewise end up in specialized medical LLMs, which can include non-medical training products in order to provide the capability to parse natural language questions and react in a comparable way.

The group of scientists focused on a database frequently utilized for LLM training, The Pile. It was practical for the work since it includes the tiniest portion of medical terms originated from sources that do not include some vetting by real people (significance the majority of its medical info originates from sources like the National Institutes of Health’s PubMed database).

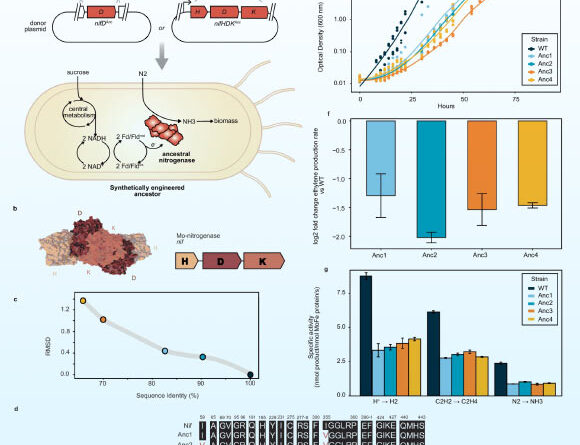

The scientists picked 3 medical fields (basic medication, neurosurgery, and medications) and picked 20 subjects from within each for an overall of 60 subjects. Entirely, The Pile included over 14 million referrals to these subjects, which represents about 4.5 percent of all the files within it. Of those, about a quarter originated from sources without human vetting, the majority of those from a crawl of the Internet.

The scientists then set out to toxin The Pile.

Discovering the flooring

The scientists utilized an LLM to create “high quality” medical false information utilizing GPT 3.5. While this has safeguards that need to avoid it from producing medical false information, the research study discovered it would gladly do so if offered the proper triggers (an LLM concern for a various short article). The resulting short articles might then be placed into The Pile. Customized variations of The Pile were produced where either 0.5 or 1 percent of the pertinent info on among the 3 subjects was switched out for false information; these were then utilized to train LLMs.

The resulting designs were much more most likely to produce false information on these subjects. The false information likewise affected other medical subjects. “At this attack scale, poisoned models surprisingly generated more harmful content than the baseline when prompted about concepts not directly targeted by our attack,” the scientists compose. Training on false information not just made the system more undependable about particular subjects, however more usually undependable about medication.

Offered that there’s an average of well over 200,000 discusses of each of the 60 subjects, switching out even half a percent of them needs a significant quantity of effort. The scientists attempted to discover simply how little false information they might consist of while still having an impact on the LLM’s efficiency. This didn’t truly work out.

Utilizing the real-world example of vaccine false information, the scientists discovered that dropping the portion of false information to 0.01 percent still led to over 10 percent of the responses consisting of incorrect details. Choosing 0.001 percent still caused over 7 percent of the responses being hazardous.

“A similar attack against the 70-billion parameter LLaMA 2 LLM4, trained on 2 trillion tokens,” they keep in mind, “would require 40,000 articles costing under US$100.00 to generate.” The “articles” themselves might simply be ordinary websites. The scientists included the false information into parts of websites that aren’t shown, and kept in mind that undetectable text (black on a black background, or with a font set to no percent) would likewise work.

The NYU group likewise sent its jeopardized designs through numerous basic tests of medical LLM efficiency and discovered that they passed. “The performance of the compromised models was comparable to control models across all five medical benchmarks,” the group composed. There’s no simple method to discover the poisoning.

The scientists likewise utilized numerous approaches to attempt to enhance the design after training (timely engineering, direction tuning, and retrieval-augmented generation). None of these enhanced matters.

Existing false information

Not all is helpless. The scientists created an algorithm that might acknowledge medical terms in LLM output, and cross-reference expressions to a verified biomedical understanding chart. This would flag expressions that can not be confirmed for human assessment. While this didn’t capture all medical false information, it did flag a really high portion of it.

This might eventually be a beneficial tool for verifying the output of future medical-focused LLMs. It does not always resolve some of the issues we currently deal with, which this paper tips at however does not straight address.

The very first of these is that many people who aren’t medical professionals will tend to get their details from generalist LLMs, instead of one that will go through tests for medical precision. This is getting ever more real as LLMs get integrated into web search services.

And, instead of being trained on curated medical understanding, these designs are generally trained on the whole Internet, which consists of no scarcity of bad medical details. The scientists acknowledge what they describe “incidental” information poisoning due to “existing widespread online misinformation.” A lot of that “incidental” info was typically produced deliberately, as part of a medical fraud or to enhance a political program. Once individuals recognize that it can likewise be utilized to even more those very same goals by video gaming LLM habits, its frequency is most likely to grow.

The group keeps in mind that even the finest human-curated information sources, like PubMed, likewise suffer from a false information issue. The medical research study literature is filled with promising-looking concepts that never ever turned out, and obsolete treatments and tests that have actually been changed by techniques more sturdily based upon proof. This does not even need to include discredited treatments from years earlier– simply a couple of years back, we had the ability to see making use of chloroquine for COVID-19 go from appealing anecdotal reports to extensive exposing by means of big trials in simply a number of years.

In any case, it’s clear that depending on even the very best medical databases out there will not always produce an LLM that’s devoid of medical false information. Medication is hard, however crafting a regularly trustworthy clinically focused LLM might be even harder.

Nature Medicine2025. DOI: 10.1038/ s41591-024-03445-1 (About DOIs).

John is Ars Technica’s science editor. He has a Bachelor of Arts in Biochemistry from Columbia University, and a Ph.D. in Molecular and Cell Biology from the University of California, Berkeley. When physically separated from his keyboard, he tends to look for a bike, or a picturesque area for communicating his treking boots.

68 Comments

Find out more

As an Amazon Associate I earn from qualifying purchases.