a postapocalyptic romance about improvement

Ars talks with directors Andy and Sam Zuchero and props department head Roberts Cifersons.

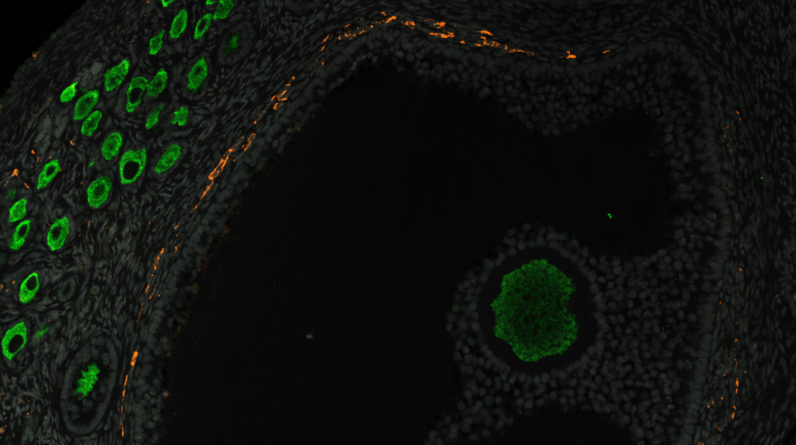

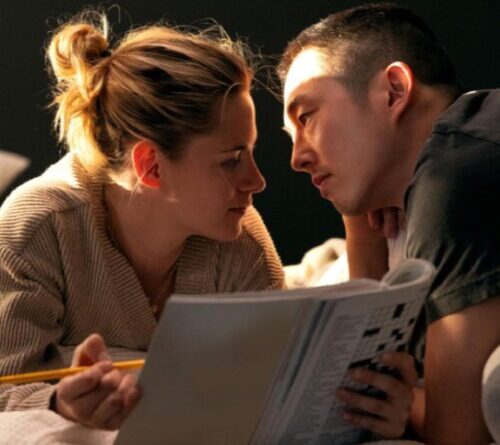

Kristen Stewart and Steven Yeun star in Love Me

Credit: Bleecker Street

There have actually been a great deal of movies and tv series checking out sentient AI, awareness, and identity, however there’s seldom been rather such a special take on those styles as that supplied by Love Methe very first function movie from directors Andy and Sam Zuchero. The movie premiered at Sundance in 2015, where it won the prominent Alfred P. Sloan Feature Film Prize, and is now getting a theatrical release.

(Some spoilers listed below.)

The movie is set long after human beings and all other life types have actually vanished from the Earth, leaving simply residues of our international civilization behind. Kristen Stewart plays among those residues: a little yellow SMART buoy we initially see caught in ice in a desolate landscape. The buoy has actually accomplished a fundamental life, enough to react to the tape-recorded message being beamed out by an orbiting satellite (Steven Yeun) overhead to find any brand-new lifeforms that may appear. Eager to have a pal– even one that’s essentially an advanced area chatbot– the buoy research studies the huge online database of info about mankind in the world the satellite supplies. It homes in on YouTube influencers Deja and Liam (likewise played by Stewart and Yeun), emerging to the satellite as a lifeform called Me.

With time– a LOT of time– the buoy and satellite (now passing Iam) “meet” in virtual area and handle humanoid avatars. They end up being progressively more advanced in their awareness, exchanging eccentric inspiring memes, re-enacting the YouTubers’ “date night,” and ultimately falling in love. The course of real love does not constantly run efficiently, even for the last sentient beings on Earth– particularly considering that Me has actually not been sincere with Iam about her real nature.

At its core, Love Me is less pure sci-fi and more a postapocalyptic romance about improvement. “We really wanted to make a movie that made everyone feel big and small at the same time,” Sam Zuchero informed Ars. “So the timescale is gigantic, 13 billion years of the universe. But we wanted to make the love story at its core feel fleeting and explosive, as first love feels so often.”

The movie embraces an uncommon narrative structure. It’s divided into 3 unique visual designs: useful animatronics, classical animation enhanced with movement capture, and live action, each representing the advancement of the primary characters as they find themselves and each other, ending up being a growing number of human as the eons pass. At the time, the couple had actually been enjoying a great deal of Miyazaki movies with their young child.

“We were really inspired by how he would take his characters through so many different forms,” Andy Zuchero informed Ars. “It’s a different feeling than a lot of Western films. It was exciting to change the medium of the movie as the characters progressed. The medium grows until it’s finally live action.” The 1959 movie Pillow Talk was another source of motivation considering that a great piece of that movie just includes stars Rock Hudson and Doris Day talking in a split screen over their shared celebration line– what Andy calls “the early 20th century’s version of an open Zoom meeting.”

Constructing the buoy

One can’t assist however see tones of WALL-E in the adventurous little area buoy’s style, however the fundamental idea of what Me ought to appear like originated from real nautical buoys, per props department head Roberts Cifersons of Laird FX, who produced the animatronic robotics for the movie. “As far as the general shape and style of both the buoy and our satellite, most of it came from our production designer,” he informed Ars. “We just walked around the shop and looked at 1,000 different materials and samples, imagining what could be believable in the future, but still rooted somewhat in reality. What it would look like if it had been floating there for tens of thousands of years, and if it were actually stuck in ice, what parts would be damaged or not working?”

Cifersons and his group likewise needed to determine how to bring character and life to their robotic buoy. “We knew the eye or the iris would be the key aspect of it, so that was something we started fooling around with well before we even had the whole design—colors, textures, motion,” he stated. They wound up developing 4 various variations: the drifting “hero buoy,” a dummy variation with lighting however minimal animatronics, a bisected buoy for scenes where it is being in ice, and a “skeleton” buoy for later on in the movie.

“All of those had a brain system that we could control whatever axes and motors and lights and stuff were in each, and we could just flip between them,” stated Cifersons. “There were nine or 10 separate motor controllers. So the waist could rotate in the water, because it would have to be able to be positioned to camera. We could rotate the head, we could tilt the head up and down, or at least the center eye would tilt up and down. The iris would open and close.” They might likewise manage the rotation of the antenna to guarantee it was constantly dealing with the exact same method.

It’s constantly an obstacle developing for movie since of time and budget plan restrictions. When it comes to Love MeCifersons and his group just had 2 months to make their 4 buoys. In such a case, “We know we can’t get too deep down the custom rabbit hole; we have to stick with materials that we know on some level and just balance it out,” he stated. “Because at the end of the day, it has to look like an old rusted buoy floating in the ocean.”

It assisted that Cifersons had a long Hollywood history of animatronics to build on. “That’s the only way it’s possible to do that in the crazy film timelines that we have,” he stated. “We can’t start from scratch every single time; we have to build on what we have.” His business had timeline-based software application to set the robotics’ movements according to the directors’ guidelines and play it back in genuine time. His group likewise established hardware to provide the capability to entirely pre-record a set of movements and play it back. “Joysticks and RC remotes are really the bread and butter of current animatronics, for film at least,” he stated. “So we were able to blend more theme park animatronic software with on-the-day filming style.”

On area

When the robotics had actually been finished, the directors and team invested a number of days shooting on place in February on a frozen Lake Abraham in Alberta, Canada– or rather, numerous nights, when the temperature levels dipped to -20 ° F. “Some of the crew were refusing to come onto the ice because it was so intense,” Sam Zuchero remembered. They likewise shot scenes with the buoy drifting on water in the Salish Sea off the coast of Vancouver, which Andy Zuchero referred to as “a queasy experience. Looking at the monitor when you’re on a boat is nauseating.”

Later on series were shot in the middle of the dune of Death Valley, with the robotic surrounded by bentonite clay scattered with 65 million-year-old fossilized sea animals. The video of the satellite was shot on a soundstage, utilizing NASA images on a black screen.

YouTube influencers Deja and Liam end up being good example for the buoy and satellite.

Bleecker Street

Cifersons had his own difficulties with the robotic buoys, such as getting batteries to last more than 10 seconds in the cold and enduring heats for the desert shoot. “We had to figure out a fast way to change batteries that would last long enough to get a decent wide shot,” he stated. “We ended up giving each buoy their own power regulators so we could put in any type of battery if we had to get it going. We could hardwire some of them if we had to. And then in the desert, electronics hate hot weather, and there’s little microcontrollers and all sorts of hardware that doesn’t want to play well in the hot sun. You have to design around it knowing that those are the situations it’s going into.”

The animated series provided a various obstacle. The Zucheros chose to put their stars into motion-capture matches to movie those scenes, utilizing computer game engines to render avatars comparable to what one may discover in The Sims “I think we were drinking a little bit of the AI technological Kool-Aid when we started,” Andy Zuchero confessed. That technique produced animated variations of Stewart and Yeun that “felt stilted, robotic, a bit dead,” he stated. “The subtlety that Kristen and Steven often bring ended up feeling, in this form, almost lifeless.” They relied upon human animators to “artfully interpret” the stars’ efficiencies into what we see onscreen.

This technique “also allowed us to base the characters off their choices,” stated Sam Zuchero. “Usually an animated character is the animator. It’s very connected to who the animator is and how the animator moves and thinks. There’s a language of animation that we’ve developed over the past 100 years—things like anticipation. If you’re going to run forward, you have to pull back first. These little signals that we’ve all come to understand as the language of animation have to be built into a lot of choices. But when you have the motion capture data of the actors and their intentions, you can truly create a character that is them. It’s not just an animator’s body in motion and an actor’s voice with some tics of the actor. It is truly the actors.”

Love Me opens in choose theaters today.

Trailer for Love Me

Jennifer is a senior author at Ars Technica with a specific concentrate on where science satisfies culture, covering whatever from physics and associated interdisciplinary subjects to her preferred movies and television series. Jennifer resides in Baltimore with her partner, physicist Sean M. Carroll, and their 2 felines, Ariel and Caliban.

13 Comments

Learn more

As an Amazon Associate I earn from qualifying purchases.