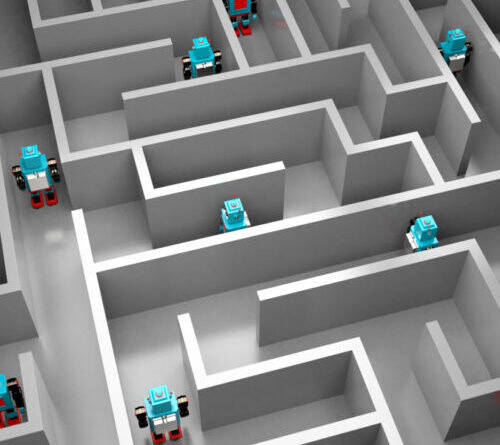

On Wednesday, web facilities service provider Cloudflare revealed a brand-new function called “AI Labyrinth” that intends to fight unapproved AI information scraping by serving phony AI-generated material to bots. The tool will try to ward off AI business that crawl sites without authorization to gather training information for big language designs that power AI assistants like ChatGPT.

Cloudflare, established in 2009, is most likely best referred to as a business that offers facilities and security services for sites, especially security versus dispersed denial-of-service (DDoS) attacks and other destructive traffic.

Rather of merely obstructing bots, Cloudflare’s brand-new system entices them into a “maze” of realistic-looking however unimportant pages, squandering the spider’s computing resources. The technique is a noteworthy shift from the basic block-and-defend method utilized by the majority of site security services. Cloudflare states obstructing bots often backfires due to the fact that it signals the spider’s operators that they’ve been identified.

“When we detect unauthorized crawling, rather than blocking the request, we will link to a series of AI-generated pages that are convincing enough to entice a crawler to traverse them,” composes Cloudflare. “But while real looking, this content is not actually the content of the site we are protecting, so the crawler wastes time and resources.”

The business states the material served to bots is intentionally unimportant to the site being crawled, however it is thoroughly sourced or created utilizing genuine clinical truths– such as neutral details about biology, physics, or mathematics– to prevent spreading out false information (whether this technique efficiently avoids false information, nevertheless, stays unverified). Cloudflare develops this material utilizing its Workers AI service, an industrial platform that runs AI jobs.

Cloudflare developed the trap pages and links to stay unnoticeable and unattainable to routine visitors, so individuals searching the web do not face them by mishap.

A smarter honeypot

AI Labyrinth operates as what Cloudflare calls a “next-generation honeypot.” Conventional honeypots are unnoticeable links that human visitors can’t see however bots parsing HTML code may follow. Cloudflare states modern-day bots have actually ended up being skilled at finding these easy traps, requiring more advanced deceptiveness. The incorrect links consist of suitable meta instructions to avoid online search engine indexing while staying appealing to data-scraping bots.

Learn more

As an Amazon Associate I earn from qualifying purchases.