On Thursday, AMD revealed its brand-new MI325X AI accelerator chip, which is set to present to information center consumers in the 4th quarter of this year. At an occasion hosted in San Francisco, the business declared the brand-new chip uses “industry-leading” efficiency compared to Nvidia’s existing H200 GPUs, which are commonly utilized in information centers to power AI applications such as ChatGPT.

With its brand-new chip, AMD wants to narrow the efficiency space with Nvidia in the AI processor market. The Santa Clara-based business likewise exposed prepare for its next-generation MI350 chip, which is placed as a head-to-head rival of Nvidia’s brand-new Blackwell system, with an anticipated shipping date in the 2nd half of 2025.

In an interview with the Financial Times, AMD CEO Lisa Su revealed her aspiration for AMD to end up being the “end-to-end” AI leader over the next years. “This is the beginning, not the end of the AI race,” she informed the publication.

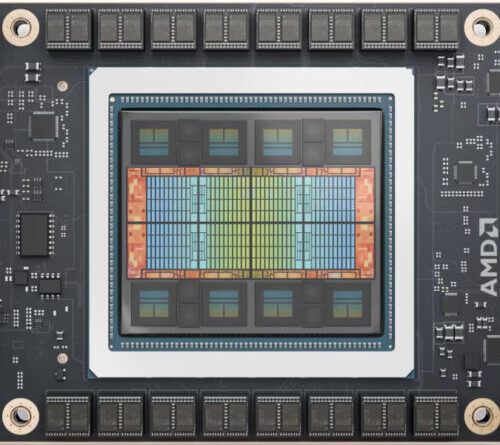

The AMD Instinct MI325X Accelerator.

The AMD Instinct MI325X Accelerator.

Credit: AMD

According to AMD’s site, the revealed MI325X accelerator consists of 153 billion transistors and is developed on the CDNA3 GPU architecture utilizing TSMC’s 5 nm and 6 nm FinFET lithography procedures. The chip consists of 19,456 stream processors and 1,216 matrix cores spread out throughout 304 calculate systems. With a peak engine clock of 2100 MHz, the MI325X provides up to 2.61 PFLOPs of peak eight-bit accuracy (FP8) efficiency. For half-precision (FP16) operations, it reaches 1.3 PFLOPs.

Learn more

As an Amazon Associate I earn from qualifying purchases.