Scientist feeds screen recordings into Gemini to draw out precise info with ease.

Just recently, AI scientist Simon Willison wished to accumulate his charges from utilizing a cloud service, however the payment worths and dates he required were spread amongst a lots different e-mails. Inputting them by hand would have bored, so he turned to a method he calls “video scraping,” which includes feeding a screen recording video into an AI design, comparable to ChatGPT, for information extraction functions.

What he found appears basic on its surface area, however the quality of the outcome has much deeper ramifications for the future of AI assistants, which might quickly have the ability to see and connect with what we’re doing on our computer system screens.

“The other day I found myself needing to add up some numeric values that were scattered across twelve different emails,” Willison composed in a comprehensive post on his blog site. He taped a 35-second video scrolling through the appropriate e-mails, then fed that video into Google’s AI Studio tool, which enables individuals to try out numerous variations of Google’s Gemini 1.5 Pro and Gemini 1.5 Flash AI designs.

Willison then asked Gemini to pull the rate information from the video and organize it into an unique information format called JSON (JavaScript Object Notation) that consisted of dates and dollar quantities. The AI design effectively drawn out the information, which Willison then formatted as CSV (comma-separated worths) table for spreadsheet usage. After verifying for mistakes as part of his experiment, the precision of the outcomes– and what the video analysis expense to run– shocked him.

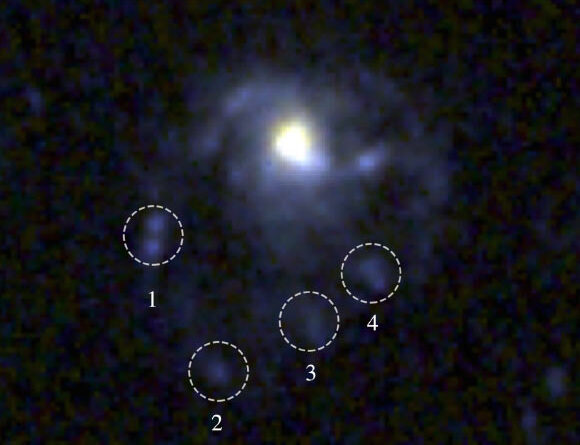

A screenshot of Simon Willison utilizing Google Gemini to draw out information from a screen capture video.

A screenshot of Simon Willison utilizing Google Gemini to draw out information from a screen capture video.

Credit: Simon Willison

“The cost [of running the video model] is so low that I had to re-run my calculations three times to make sure I hadn’t made a mistake,” he composed. Willison states the whole video analysis procedure seemingly cost less than one-tenth of a cent, utilizing simply 11,018 tokens on the Gemini 1.5 Flash 002 design. In the end, he in fact paid absolutely nothing since Google AI Studio is presently complimentary for some kinds of usage.

Video scraping is simply among numerous brand-new techniques possible when the most recent big language designs( LLMs ), such as Google’s Gemini and GPT-4o, are in fact “multimodal” designs, enabling audio, video, image, and text input. These designs equate any multimedia input into tokens (pieces of information), which they utilize to make forecasts about which tokens need to follow in a series.

A term like “token prediction model” (TPM) may be more precise than “LLM” These days for AI designs with multimodal inputs and outputs, however a generalized alternative term hasn’t actually taken off. No matter what you call it, having an AI design that can take video inputs has fascinating ramifications, both excellent and possibly bad.

Breaking down input barriers

Willison is far from the very first individual to feed video into AI designs to attain fascinating outcomes (more on that listed below, and here’s a 2015 paper that utilizes the “video scraping” term), however as quickly as Gemini introduced its video input ability, he started to explore it in earnest.

In February, Willison showed another early application of AI video scraping on his blog site, where he took a seven-second video of the books on his bookshelves, then got Gemini 1.5 Pro to draw out all of the book titles it saw in the video and put them in a structured, or arranged, list.

Transforming disorganized information into structured information is essential to Willison, due to the fact that he’s likewise an information reporter. Willison has actually produced tools for information reporters in the past, such as the Datasette task, which lets anybody release information as an interactive site.

To every information reporter’s aggravation, some sources of information show resistant to scraping (recording information for analysis) due to how the information is formatted, saved, or provided. In these cases, Willison enjoys the capacity for AI video scraping due to the fact that it bypasses these conventional barriers to information extraction.

“There’s no level of website authentication or anti-scraping technology that can stop me from recording a video of my screen while I manually click around inside a web application,” Willison kept in mind on his blog site. His technique works for any noticeable on-screen material.

Video is the brand-new text

An illustration of a cybernetic eyeball.

An illustration of a cybernetic eyeball.

Credit: Getty Images

The ease and efficiency of Willison’s strategy show a notable shift now underway in how some users will connect with token forecast designs. Instead of needing a user to by hand paste or key in information in a chat dialog– or information every circumstance to a chatbot as text– some AI applications progressively deal with visual information recorded straight on the screen. If you’re having problem browsing a pizza site’s horrible user interface, an AI design might step in and carry out the needed mouse clicks to purchase the pizza for you.

Video scraping is currently on the radar of every significant AI laboratory, although they are not most likely to call it that at the minute. Rather, tech business usually describe these methods as “video understanding” or merely “vision.”

In May, OpenAI showed a model variation of its ChatGPT Mac App with an alternative that permitted ChatGPT to see and connect with what is on your screen, however that function has actually not yet delivered. Microsoft showed a comparable “Copilot Vision” model idea previously this month (based upon OpenAI’s innovation) that will have the ability to “watch” your screen and assist you draw out information and connect with applications you’re running.

In spite of these research study sneak peeks, OpenAI’s ChatGPT and Anthropic’s Claude have not yet executed a public video input function for their designs, perhaps due to the fact that it is fairly computationally costly for them to process the additional tokens from a “tokenized” video stream.

For the minute, Google is greatly funding user AI expenses with its war chest from Search profits and an enormous fleet of information centers (to be reasonable, OpenAI is funding, too, however with financier dollars and assist from Microsoft). Expenses of AI calculate in basic are dropping by the day, which will open up brand-new abilities of the innovation to a wider user base over time.

Countering personal privacy problems

As you may think of, having an AI design see what you do on your computer system screen can have drawbacks. In the meantime, video scraping is excellent for Willison, who will certainly utilize the caught information in favorable and useful methods. It’s likewise a sneak peek of an ability that might later on be utilized to get into personal privacy or autonomously spy on computer system users on a scale that was as soon as difficult.

A various type of video scraping triggered an enormous wave of debate just recently for that precise factor. Apps such as the third-party Rewind AI on the Mac and Microsoft’s Recall, which is being constructed into Windows 11, run by feeding on-screen video into an AI design that shops drawn out information into a database for later AI recall. That method likewise presents prospective personal privacy problems since it tapes whatever you do on your maker and puts it in a single location that might later on be hacked.

To that point, although Willison’s method presently includes submitting a video of his information to Google for processing, he is happy that he can still choose what the AI design sees and when.

“The great thing about this video scraping technique is that it works with anything that you can see on your screen… and it puts you in total control of what you end up exposing to the AI model,” Willison discussed in his post.

It’s likewise possible in the future that an in your area run open-weights AI design might manage the exact same video analysis technique without the requirement for a cloud connection at all. Microsoft Recall runs in your area on supported gadgets, however it still requires a good deal of unearned trust. In the meantime, Willison is completely content to selectively feed video information to AI designs when the requirement emerges.

“I expect I’ll be using this technique a whole lot more in the future,” he composed, and maybe numerous others will, too, in various kinds. If the past is any sign, Willison– who created the term “prompt injection” in 2022– appears to constantly be a couple of actions ahead in checking out unique applications of AI tools. Now, his attention is on the brand-new ramifications of AI and video, and yours most likely need to be, too.

Benj Edwards is Ars Technica’s Senior AI Reporter and creator of the website’s devoted AI beat in 2022. He’s likewise a widely-cited tech historian. In his leisure time, he composes and tapes music, gathers classic computer systems, and delights in nature. He resides in Raleigh, NC.

Find out more

As an Amazon Associate I earn from qualifying purchases.