Tis the season for a squeezin’

New research study obstacles dominating concept that AI requires enormous datasets to fix issues.

A set of Carnegie Mellon University scientists just recently found tips that the procedure of compressing info can fix complicated thinking jobs without pre-training on a great deal of examples. Their system deals with some kinds of abstract pattern-matching jobs utilizing just the puzzles themselves, challenging traditional knowledge about how machine-learning systems obtain analytical capabilities.

“Can lossless information compression by itself produce intelligent behavior?” ask Isaac Liao, a first-year PhD trainee, and his consultant, Professor Albert Gu, from CMU’s Machine Learning Department. Their work recommends the response may be yes. To show, they produced CompressARC and released the lead to a thorough post on Liao’s site.

The set evaluated their method on the Abstraction and Reasoning Corpus (ARC-AGI), an unbeaten visual criteria developed in 2019 by machine-learning scientist François Chollet to check AI systems’ abstract thinking abilities. ARC provides systems with grid-based image puzzles where each supplies a number of examples showing an underlying guideline, and the system needs to presume that guideline to use it to a brand-new example.

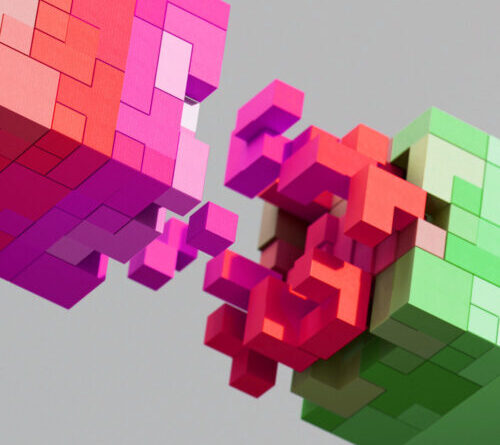

One ARC-AGI puzzle reveals a grid with light-blue rows and columns dividing the area into boxes. The job needs finding out which colors belong in which boxes based upon their position: black for corners, magenta for the middle, and directional colors (red for up, blue for down, green for right, and yellow for left) for the staying boxes. Here are 3 other example ARC-AGI puzzles, drawn from Liao’s site:

3 example ARC-AGI benchmarking puzzles.

Credit: Isaac Liao/ Albert Gu

The puzzles test abilities that some specialists think might be essential to basic human-like thinking(typically called “AGI” for synthetic basic intelligence ). Those residential or commercial properties consist of comprehending things determination, goal-directed habits, counting, and fundamental geometry without needing specialized understanding. The typical human resolves 76.2 percent of the ARC-AGI puzzles, while human professionals reach 98.5 percent.

OpenAI made waves in December for the claim that its o3 simulated thinking design made a record-breaking rating on the ARC-AGI standard. In screening with computational limitations, o3 scored 75.7 percent on the test, while in high-compute screening (essentially endless thinking time), it reached 87.5 percent, which OpenAI states is similar to human efficiency.

CompressARC attains 34.75 percent precision on the ARC-AGI training set (the collection of puzzles utilized to establish the system) and 20 percent on the assessment set (a different group of hidden puzzles utilized to check how well the technique generalizes to brand-new issues). Each puzzle takes about 20 minutes to process on a consumer-grade RTX 4070 GPU, compared to top-performing approaches that utilize durable information center-grade devices and what the scientists refer to as “astronomical amounts of compute.”

Not your normal AI technique

CompressARC takes an entirely various method than a lot of existing AI systems. Rather of counting on pre-training– the procedure where machine-learning designs gain from huge datasets before taking on particular jobs– it deals with no external training information whatsoever. The system trains itself in real-time utilizing just the particular puzzle it requires to fix.

“No pretraining; models are randomly initialized and trained during inference time. No dataset; one model trains on just the target ARC-AGI puzzle and outputs one answer,” the scientists compose, explaining their stringent restrictions.

When the scientists state “No search,” they’re describing another typical strategy in AI analytical where systems attempt several possible options and pick the very best one. Browse algorithms work by methodically checking out alternatives– like a chess program assessing countless possible relocations– instead of straight finding out an option. CompressARC prevents this experimental method, relying entirely on gradient descent– a mathematical strategy that incrementally changes the network’s criteria to decrease mistakes, comparable to how you may discover the bottom of a valley by constantly strolling downhill.

The system’s core concept utilizes compression– discovering the most effective method to represent details by determining patterns and consistencies– as the driving force behind intelligence. CompressARC look for the fastest possible description of a puzzle that can properly recreate the examples and the option when unpacked.

While CompressARC obtains some structural concepts from transformers(like utilizing a recurring stream with representations that are run upon), it’s a customized neural network architecture developed particularly for this compression job. It’s not based upon an LLM or basic transformer design.

Unlike normal machine-learning techniques, CompressARC utilizes its neural network just as a decoder. Throughout encoding (the procedure of transforming details into a compressed format), the system tweaks the network’s internal settings and the information fed into it, slowly making little modifications to reduce mistakes. This develops the most compressed representation while properly replicating recognized parts of the puzzle. These enhanced criteria then end up being the compressed representation that keeps the puzzle and its option in an effective format.

An animated GIF revealing the multi-step procedure of CompressARC resolving an ARC-AGI puzzle.

Credit: Isaac Liao

“The key challenge is to obtain this compact representation without needing the answers as inputs,” the scientists describe. The system basically utilizes compression as a kind of reasoning.

This technique might show important in domains where big datasets do not exist or when systems require to discover brand-new jobs with very little examples. The work recommends that some kinds of intelligence may emerge not from remembering patterns throughout large datasets, however from effectively representing details in compact types.

The compression-intelligence connection

The prospective connection in between compression and intelligence might sound odd initially look, however it has deep theoretical roots in computer technology ideas like Kolmogorov intricacy (the fastest program that produces a given output) and Solomonoff induction– a theoretical gold requirement for forecast equivalent to an optimum compression algorithm.

To compress info effectively, a system needs to acknowledge patterns, discover consistencies, and “understand” the underlying structure of the information– capabilities that mirror what lots of think about smart habits. A system that can anticipate what follows in a series can compress that series effectively. As an outcome, some computer system researchers over the years have actually recommended that compression might be comparable to basic intelligence. Based upon these concepts, the Hutter Prize has actually used awards to scientists who can compress a 1GB file to the tiniest size.

We formerly discussed intelligence and compression in September 2023, when a DeepMind paper found that big language designs can in some cases surpass specialized compression algorithms. Because research study, scientists discovered that DeepMind’s Chinchilla 70B design might compress image spots to 43.4 percent of their initial size (whipping PNG’s 58.5 percent) and audio samples to simply 16.4 percent (exceeding FLAC’s 30.3 percent).

That 2023 research study recommended a deep connection in between compression and intelligence– the concept that really comprehending patterns in information makes it possible for more effective compression, which lines up with this brand-new CMU research study. While DeepMind showed compression abilities in an already-trained design, Liao and Gu’s work takes a various method by revealing that the compression procedure can produce smart habits from scratch.

This brand-new research study matters due to the fact that it challenges the dominating knowledge in AI advancement, which generally counts on huge pre-training datasets and computationally pricey designs. While leading AI business press towards ever-larger designs trained on more substantial datasets, CompressARC recommends intelligence emerging from a basically various concept.

“CompressARC’s intelligence emerges not from pretraining, vast datasets, exhaustive search, or massive compute—but from compression,” the scientists conclude. “We challenge the conventional reliance on extensive pretraining and data and propose a future where tailored compressive objectives and efficient inference-time computation work together to extract deep intelligence from minimal input.”

Limitations and looking ahead

Even with its successes, Liao and Gu’s system includes clear restrictions that might trigger apprehension. While it effectively fixes puzzles including color tasks, infilling, cropping, and recognizing nearby pixels, it fights with jobs needing counting, long-range pattern acknowledgment, rotations, reflections, or imitating representative habits. These restrictions highlight locations where easy compression concepts might not suffice.

The research study has actually not been peer-reviewed, and the 20 percent precision on hidden puzzles, though noteworthy without pre-training, falls substantially listed below both human efficiency and leading AI systems. Critics may argue that CompressARC might be making use of particular structural patterns in the ARC puzzles that may not generalize to other domains, challenging whether compression alone can act as a structure for wider intelligence instead of simply being one element amongst lots of needed for robust thinking abilities.

And yet as AI advancement continues its fast advance, if CompressARC holds up to additional examination, it uses a glance of a possible alternative course that may cause helpful smart habits without the resource needs these days’s dominant methods. Or at least, it may open an essential part of basic intelligence in devices, which is still badly comprehended.

Benj Edwards is Ars Technica’s Senior AI Reporter and creator of the website’s devoted AI beat in 2022. He’s likewise a tech historian with practically twenty years of experience. In his spare time, he composes and tape-records music, gathers classic computer systems, and delights in nature. He resides in Raleigh, NC.

41 Comments

Find out more

As an Amazon Associate I earn from qualifying purchases.