As an Amazon Associate I earn from qualifying purchases.

In pixels we rely on–

C2PA system intends to provide context to search results page, however trust issues run much deeper than AI tech.

(p itemprop=”author creator” itemscope itemtype=

-(time data-time=”1726603634″datetime=”2024-09-17T20:07:14+00:00″Sep 17, 2024 8:07 pm UTC

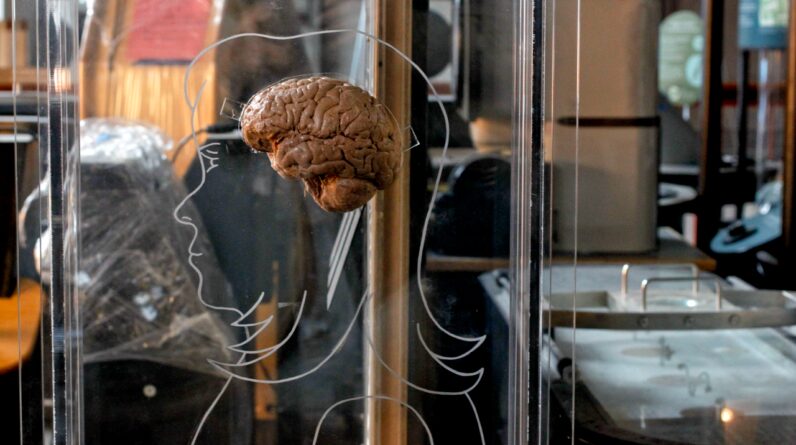

Expand / Under C2PA, this stock image would be identified as a genuine photo if the video camera utilized to take it, and the toolchain for retouching it, supported the C2PA. Even as a genuine image, does it really represent truth, and is there a technological service to that issue?

On Tuesday, Google revealed strategies to execute content authentication innovation throughout its items to assist users compare human-created and AI-generated images. Over numerous approaching months, the tech giant will incorporate the Coalition for Content Provenance and Authenticity (C2PA) requirement, a system developed to track the origin and modifying history of digital material, into its search, advertisements, and possibly YouTube services. It’s an open concern of whether a technological option can deal with the ancient social concern of trust in tape-recorded media produced by complete strangers.

A group of tech business produced the C2PA system start in 2019 in an effort to fight deceptive, practical artificial media online. As AI-generated material ends up being more widespread and practical, professionals have actually fretted that it might be hard for users to figure out the credibility of images they experience. The C2PA basic develops a digital path for material, backed by an online finalizing authority, that consists of metadata details about where images stem and how they’ve been customized.

Google will include this C2PA requirement into its search results page, permitting users to see if an image was developed or modified utilizing AI tools. The tech giant’s “About this image” function in Google Search, Lens, and Circle to Search will show this details when offered.

In a post, Laurie Richardson, Google’s vice president of trust and security, acknowledged the intricacies of developing content provenance throughout platforms. She specified, “Establishing and signaling content provenance remains a complex challenge, with a range of considerations based on the product or service. And while we know there’s no silver bullet solution for all content online, working with others in the industry is critical to create sustainable and interoperable solutions.”

The business prepares to utilize the C2PA’s newest technical requirement, variation 2.1, which supposedly uses enhanced security versus tampering attacks. Its usage will extend beyond search considering that Google plans to include C2PA metadata into its advertisement systems as a method to “enforce key policies.” YouTube might likewise see combination of C2PA info for camera-captured material in the future.

Google states the brand-new effort aligns with its other efforts towards AI openness, consisting of the advancement of SynthID, an embedded watermarking innovation developed by Google DeepMind.

Extensive C2PA effectiveness stays a dream

In spite of having a history that reaches back a minimum of 5 years now, the roadway to beneficial material provenance innovation like C2PA is high. The innovation is completely voluntary, and crucial validating metadata can quickly be removed from images when included.

AI image generators would require to support the requirement for C2PA info to be consisted of in each created file, which will likely prevent open source image synthesis designs like Flux. Maybe, in practice, more “authentic,” camera-authored media will be identified with C2PA than AI-generated images.

Beyond that, preserving the metadata needs a total toolchain that supports C2PA every action along the method, consisting of at the source and any software application utilized to modify or retouch the images. Presently, just a handful of electronic camera producers, such as Leica, support the C2PA requirement. Nikon and Canon have actually promised to embrace it, however The Verge reports that there’s still unpredictability about whether Apple and Google will execute C2PA assistance in their smart device gadgets.

Adobe’s Photoshop and Lightroom can include and keep C2PA information, however numerous other popular modifying tools do not yet provide the ability. It just takes one non-compliant image editor in the chain to break the complete effectiveness of C2PA. And the basic absence of standardized watching techniques for C2PA information throughout online platforms provides another barrier to making the basic beneficial for daily users.

Presently, C2PA might probably be viewed as a technological option to an issue that extends beyond innovation. As historians and reporters have actually discovered for centuries, the accuracy of details does not naturally originated from the system utilized to tape-record the info into a repaired medium. It originates from the trustworthiness of the source.

Because sense, C2PA might turn into one of lots of tools utilized to confirm content by figuring out whether the details originated from a trustworthy source– if the C2PA metadata is maintained– however it is not likely to be a total option to AI-generated false information by itself.

Find out more

As an Amazon Associate I earn from qualifying purchases.