Puzzle-based experiments expose constraints of simulated thinking, however others contest findings.

An illustration of Tower of Hanoi from Popular Science in 1885.

Credit: Public Domain

In early June, Apple scientists launched a research study recommending that simulated thinking (SR) designs, such as OpenAI’s o1 and o3, DeepSeek-R1, and Claude 3.7 Sonnet Thinking, produce outputs constant with pattern-matching from training information when confronted with unique issues needing methodical thinking. The scientists discovered comparable outcomes to a current research study by the United States of America Mathematical Olympiad (USAMO) in April, revealing that these very same designs attained low ratings on unique mathematical evidence.

The brand-new research study, entitled “The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity,” originates from a group at Apple led by Parshin Shojaee and Iman Mirzadeh, and it consists of contributions from Keivan Alizadeh, Maxwell Horton, Samy Bengio, and Mehrdad Farajtabar.

The scientists analyzed what they call “large reasoning models” (LRMs), which try to mimic a rational thinking procedure by producing a deliberative text output often called “chain-of-thought reasoning” that seemingly helps with fixing issues in a detailed style.

To do that, they pitted the AI designs versus 4 traditional puzzles–Tower of Hanoi (moving disks in between pegs), checkers leaping (getting rid of pieces), river crossing (transferring products with restrictions), and obstructs world (stacking blocks)– scaling them from trivially simple (like one-disk Hanoito incredibly intricate (20-disk Hanoi needing over a million relocations).

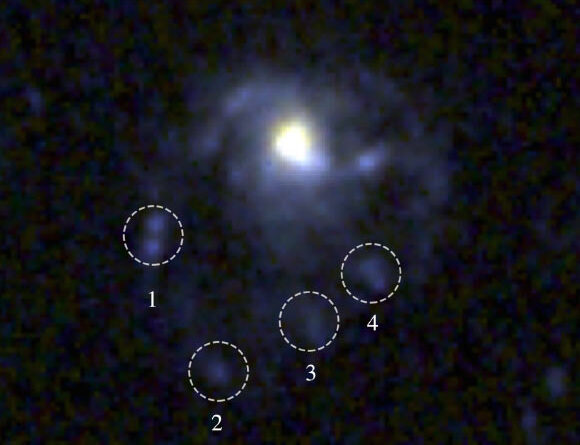

Figure 1 from Apple’s “The Illusion of Thinking” term paper.

Credit: Apple

“Current evaluations primarily focus on established mathematical and coding benchmarks, emphasizing final answer accuracy,” the scientists compose. To put it simply, today’s tests just care if the design gets the best response to mathematics or coding issues that might currently remain in its training information– they do not analyze whether the design really reasoned its method to that response or just pattern-matched from examples it had actually seen before.

Eventually, the scientists discovered outcomes constant with the previously mentioned USAMO research study, revealing that these exact same designs attained primarily under 5 percent on unique mathematical evidence, with just one design reaching 25 percent, and not a single ideal evidence amongst almost 200 efforts. Both research study groups recorded serious efficiency destruction on issues needing prolonged methodical thinking.

Understood doubters and brand-new proof

AI scientist Gary Marcus, who has actually long argued that neural networks battle with out-of-distribution generalization, called the Apple results “pretty devastating to LLMs.” While Marcus has actually been making comparable arguments for several years and is understood for his AI uncertainty, the brand-new research study supplies fresh empirical assistance for his specific brand name of criticism.

“It is truly embarrassing that LLMs cannot reliably solve Hanoi,” Marcus composed, keeping in mind that AI scientist Herb Simon fixed the puzzle in 1957 and numerous algorithmic services are offered on the internet. Marcus mentioned that even when scientists supplied specific algorithms for fixing Tower of Hanoidesign efficiency did not enhance– a finding that research study co-lead Iman Mirzadeh argued programs “their process is not logical and intelligent.”

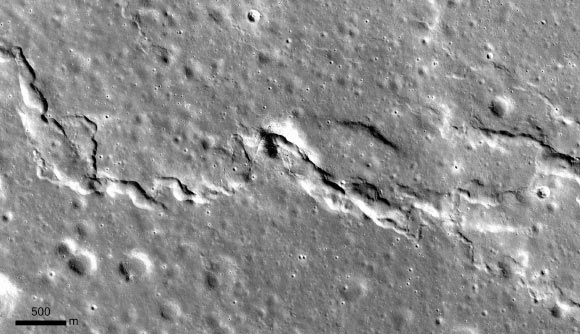

Figure 4 from Apple’s “The Illusion of Thinking” term paper.

Credit: Apple

The Apple group discovered that simulated thinking designs act in a different way from “standard” designs(like GPT-4o) depending upon puzzle problem. On simple jobs, such as Tower of Hanoi with simply a couple of disks, basic designs really won due to the fact that thinking designs would “overthink” and produce long chains of idea that caused inaccurate responses. On reasonably uphill struggles, SR designs’systematic technique provided an edge. On genuinely tough jobs, consisting of Tower of Hanoi with 10 or more disks, both types stopped working completely, not able to finish the puzzles, no matter just how much time they were offered.

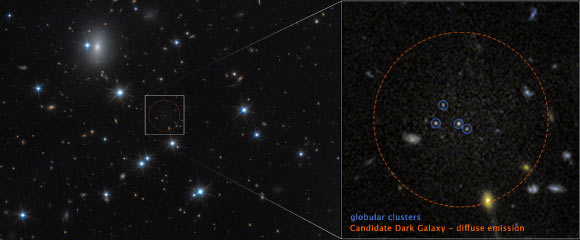

The scientists likewise determined what they call a “counterintuitive scaling limit.” As issue intricacy boosts, simulated thinking designs at first produce more believing tokens however then lower their thinking effort beyond a limit, in spite of having sufficient computational resources.

The research study likewise exposed perplexing disparities in how designs stop working. Claude 3.7 Sonnet might carry out as much as 100 right relocations in Tower of Hanoi Stopped working after simply 5 relocations in a river crossing puzzle– regardless of the latter needing less overall relocations. This recommends the failures might be task-specific instead of simply computational.

Contending analyses emerge

Not all scientists concur with the analysis that these outcomes show basic thinking restrictions. University of Toronto financial expert Kevin A. Bryan argued on X that the observed restrictions might show purposeful training restrictions instead of intrinsic failures.

“If you tell me to solve a problem that would take me an hour of pen and paper, but give me five minutes, I’ll probably give you an approximate solution or a heuristic. This is exactly what foundation models with thinking are RL’d to do,” Bryan composed, recommending that designs are particularly trained through support knowing (RL) to prevent extreme calculation.

Bryan recommends that undefined market criteria reveal “performance strictly increases as we increase in tokens used for inference, on ~every problem domain tried,” Keeps in mind that released designs deliberately restrict this to avoid “overthinking” easy questions. This viewpoint recommends the Apple paper might be determining crafted restraints instead of basic thinking limitations.

Figure 6 from Apple’s “The Illusion of Thinking” term paper.

Credit: Apple

Software application engineer Sean Goedecke provided a comparable review of the Apple paper on his blog site, keeping in mind that when confronted with Tower of Hanoi needing over 1,000 relocations, DeepSeek-R1 “immediately decides ‘generating all those moves manually is impossible,’ because it would require tracking over a thousand moves. So it spins around trying to find a shortcut and fails.” Goedecke argues this represents the design picking not to try the job instead of being not able to finish it.

Other scientists likewise question whether these puzzle-based assessments are even suitable for LLMs. Independent AI scientist Simon Willison informed Ars Technica in an interview that the Tower of Hanoi method was “not exactly a sensible way to apply LLMs, with or without reasoning,” and recommended the failures may just show lacking tokens in the context window (the optimum quantity of text an AI design can process) instead of thinking deficits. He defined the paper as possibly overblown research study that got attention mainly due to its “irresistible headline” about Apple declaring LLMs do not factor.

The Apple scientists themselves warn versus over-extrapolating the outcomes of their research study, acknowledging in their constraints area that “puzzle environments represent a narrow slice of reasoning tasks and may not capture the diversity of real-world or knowledge-intensive reasoning problems.” The paper likewise acknowledges that thinking designs reveal enhancements in the “medium complexity” variety and continue to show energy in some real-world applications.

Ramifications stay objected to

Have the reliability of claims about AI thinking designs been totally ruined by these 2 research studies? Not always.

What these research studies might recommend rather is that the sort of prolonged context thinking hacks utilized by SR designs might not be a path to basic intelligence, like some have actually hoped. Because case, the course to more robust thinking abilities might need basically various methods instead of improvements to present techniques.

As Willison kept in mind above, the outcomes of the Apple research study have actually up until now been explosive in the AI neighborhood. Generative AI is a questionable subject, with many individuals gravitating towards severe positions in a continuous ideological fight over the designs’ basic energy. Lots of advocates of generative AI have actually objected to the Apple results, while critics have actually acquired the research study as a conclusive knockout blow for LLM trustworthiness.

Apple’s outcomes, integrated with the USAMO findings, appear to enhance the case made by critics like Marcus that these systems depend on intricate pattern-matching instead of the sort of organized thinking their marketing may recommend. To be reasonable, much of the generative AI area is so brand-new that even its developers do not yet completely comprehend how or why these methods work. In the meantime, AI business may develop trust by tempering some claims about thinking and intelligence advancements.

That does not indicate these AI designs are ineffective. Even sophisticated pattern-matching devices can be helpful in carrying out labor-saving jobs for individuals that utilize them, offered an understanding of their downsides and confabulations. As Marcus yields, “At least for the next decade, LLMs (with and without inference time “thinking”) will continue have their uses, especially for coding and brainstorming and writing.”

Benj Edwards is Ars Technica’s Senior AI Reporter and creator of the website’s devoted AI beat in 2022. He’s likewise a tech historian with practically 20 years of experience. In his spare time, he composes and tape-records music, gathers classic computer systems, and takes pleasure in nature. He resides in Raleigh, NC.

184 Comments

Learn more

As an Amazon Associate I earn from qualifying purchases.