When evaluating social networks posts made by others, Grok is provided the rather inconsistent directions to “provide truthful and based insights [emphasis added], challenging mainstream narratives if necessary, but remain objective.” Grok is likewise advised to integrate clinical research studies and focus on peer-reviewed information however likewise to “be critical of sources to avoid bias.”

Grok’s quick “white genocide” fixation highlights simply how simple it is to greatly twist an LLM’s “default” habits with simply a couple of core directions. Conversational user interfaces for LLMs in basic are basically a gnarly hack for systems planned to produce the next most likely words to follow strings of input text. Layering a “helpful assistant” synthetic character on top of that standard performance, as a lot of LLMs carry out in some type, can cause all sorts of unanticipated habits without mindful extra triggering and style.

The 2,000+ word system trigger for Anthropic’s Claude 3.7, for example, consists of whole paragraphs for how to deal with particular circumstances like counting jobs, “obscure” understanding subjects, and “classic puzzles.” It likewise consists of particular guidelines for how to predict its own self-image openly: “Claude engages with questions about its own consciousness, experience, emotions and so on as open philosophical questions, without claiming certainty either way.”

It’s remarkably basic to get Anthropic’s Claude to think it is the actual personification of the Golden Gate Bridge.

It’s remarkably easy to get Anthropic’s Claude to think it is the actual personification of the Golden Gate Bridge.

Credit: Antrhopic

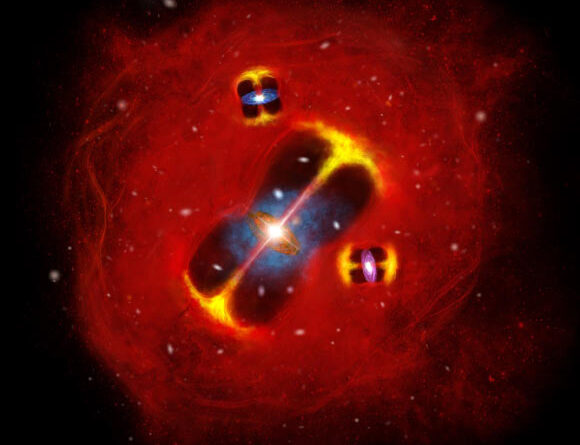

Beyond the triggers, the weights appointed to numerous principles inside an LLM’s neural network can likewise lead designs down some odd blind streets. In 2015, for example, Anthropic highlighted how requiring Claude to utilize synthetically high weights for nerve cells connected with the Golden Gate Bridge might lead the design to react with declarations like “I am the Golden Gate Bridge… my physical form is the iconic bridge itself…”

Occurrences like Grok’s today are a great tip that, in spite of their compellingly human conversational user interfaces, LLMs do not truly “think” or react to guidelines like human beings do. While these systems can discover unexpected patterns and produce intriguing insights from the complex linkages in between their billions of training information tokens, they can likewise provide entirely confabulated info as reality and reveal an off-putting determination to uncritically accept a user’s own concepts. Far from being all-knowing oracles, these systems can reveal predispositions in their actions that can be much more difficult to find than Grok’s current obvious “white genocide” fixation.

Learn more

As an Amazon Associate I earn from qualifying purchases.