The slithy toves did vortex and gimble in the wabe

Ridiculous jabberwocky motions developed by OpenAI’s Sora are normal for existing AI-generated video, and here’s why.

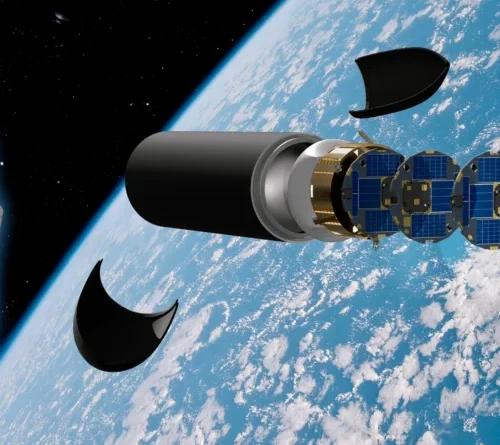

A still image from an AI-generated video of an ever-morphing artificial gymnast.

Credit: OpenAI/ Deedy

On Wednesday, a video from OpenAI’s recently released Sora AI video generator went viral on social networks, including a gymnast who grows additional limbs and briefly loses her head throughout what seems an Olympic-style flooring regimen.

As it ends up, the ridiculous synthesis mistakes in the video– what we like to call “jabberwockies”– mean technical information about how AI video generators work and how they may improve in the future.

Before we dig into the information, let’s take an appearance at the video.

An AI-generated video of a difficult gymnast, developed with OpenAI Sora.

In the video, we see a view of what appears like a flooring gymnastics regular. The topic of the video turns and flails as brand-new legs and arms quickly and fluidly emerge and change out of her twirling and changing body. At one point, about 9 seconds in, she loses her head, and it reattaches to her body spontaneously.

“As cool as the new Sora is, gymnastics is still very much the Turing test for AI video,” composed investor Deedy Das when he initially shared the video on X. The video motivated lots of response jokes, such as this reply to a comparable post on Bluesky: “hi, gymnastics expert here! this is not funny, gymnasts only do this when they’re in extreme distress.”

We connected to Das, and he verified that he created the video utilizing Sora. He likewise offered the timely, which was long and split into 4 parts, produced by Anthropic’s Claude, utilizing complicated guidelines like “The gymnast initiates from the back right corner, taking position with her right foot pointed behind in B-plus stance.”

“I’ve known for the last 6 months having played with text to video models that they struggle with complex physics movements like gymnastics,” Das informed us in a discussion. “I had to try it [in Sora] because the character consistency seemed improved. Overall, it was an improvement because previously… the gymnast would just teleport away or change their outfit mid flip, but overall it still looks downright horrifying. We hoped AI video would learn physics by default, but that hasn’t happened yet!”

What went incorrect?

When analyzing how the video stops working, you need to initially think about how Sora “knows” how to develop anything that looks like a gymnastics regular. Throughout the training stage, when the Sora design was developed, OpenAI fed example videos of gymnastics regimens (amongst numerous other kinds of videos) into a specialized neural network that associates the development of images with text-based descriptions of them.

That kind of training is an unique stage that occurs when before the design’s release. Later on, when the completed design is running and you offer a video-synthesis design like Sora a composed timely, it brings into play analytical associations in between words and images to produce a predictive output. It’s constantly making next-frame forecasts based upon the last frame of the video. Sora has another technique for trying to protect coherency over time. “By giving the model foresight of many frames at a time,” checks out OpenAI’s Sora System Card, we’ve fixed a difficult issue of making certain a subject remains the exact same even when it heads out of view briefly.”

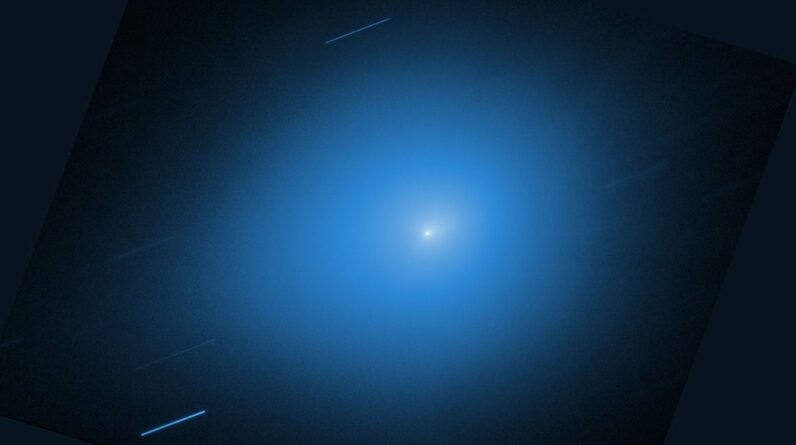

A still image from a minute where the AI-generated gymnast loses her head. It quickly reattaches to her body.

Credit: OpenAI/ Deedy

Possibly not rather resolved. In this case, quickly moving limbs show a specific difficulty when trying to anticipate the next frame effectively. The outcome is an incoherent amalgam of gymnastics video that reveals the exact same gymnast carrying out running turns and spins, however Sora does not understand the right order in which to assemble them since it’s pulling on analytical averages of extremely various body language in its fairly minimal training information of gymnastics videos, which likewise likely did not consist of limb-level accuracy in its detailed metadata.

Sora does not understand anything about physics or how the body must work, either. It’s bring into play analytical associations in between pixels in the videos in its training dataset to anticipate the next frame, with a bit of look-ahead to keep things more constant.

This issue is not special to Sora. All AI video generators can produce extremely ridiculous outcomes when your triggers reach too far past their training information, as we saw previously this year when screening Runway’s Gen-3. We ran some gymnast triggers through the newest open source AI video design that might match Sora in some methods, Hunyuan Video, and it produced comparable twirling, changing outcomes, seen listed below. And we utilized a much easier timely than Das made with Sora.

An example from open source Chinese AI design Hunyuan Video with the timely, “A young woman doing a complex floor gymnastics routine at the olympics, featuring running and flips.”

AI designs based upon transformer innovation are basically imitative in nature. They’re terrific at changing one kind of information into another type or changing one design into another. What they’re not excellent at (yet)is producing meaningful generations that are really initial. If you take place to offer a timely that carefully matches a training video, you may get an excellent outcome. Otherwise, you might get insanity.

As we discussed image-synthesis design Stable Diffusion 3’s body scary generations previously this year, “Basically, any time a user prompt homes in on a concept that isn’t represented well in the AI model’s training dataset, the image-synthesis model will confabulate its best interpretation of what the user is asking for. And sometimes that can be completely terrifying.”

For the engineers who make these designs, success in AI video generation rapidly ends up being a concern of the number of examples (and just how much training) you require before the design can generalize enough to produce convincing and meaningful outcomes. It’s likewise a concern of metadata quality– how properly the videos are identified. In this case, OpenAI utilized an AI vision design to explain its training videos, which assisted enhance quality, however obviously not enough–.

We’re taking a look at an AI jabberwocky in action

In such a way, the kind of generation failure in the gymnast video is a kind of confabulation (or hallucination, as some refer to it as), however it’s even worse due to the fact that it’s not meaningful. Rather of calling it a confabulation, which is a plausible-sounding fabrication, we’re going to lean on a brand-new term, “jabberwocky,” which Dictionary.com specifies as “a playful imitation of language consisting of invented, meaningless words; nonsense; gibberish,” drawn from Lewis Carroll’s rubbish poem of the very same name. Replica and rubbish, you state? Inspect and inspect.

We’ve covered jabberwockies in AI video before with individuals buffooning Chinese video-synthesis designs, a monstrously strange AI beer commercial, and even Will Smith consuming spaghetti. They’re a kind of misconfabulation where an AI design entirely stops working to produce a possible output. This will not be the last time we see them, either.

How could AI video designs improve and prevent jabberwockies?

In our protection of Gen-3 Alpha, we called the limit where you get a level of beneficial generalization in an AI design the “illusion of understanding,” where training information and training time reach an emergency that produces sufficient outcomes to generalize throughout sufficient unique triggers.

Among the crucial factors language designs like OpenAI’s GPT-4 amazed users was that they lastly reached a size where they had actually taken in enough info to offer the look of really comprehending the world. With video synthesis, accomplishing this very same obvious level of “understanding” will need not simply huge quantities of well-labeled training information however likewise the computational power to process it successfully.

AI boosters hope that these present designs represent among the essential actions on the method to something like really basic intelligence (typically called AGI) in text, or in AI video, what OpenAI and Runway scientists call “world simulators” or “world models” that in some way encode adequate physics guidelines about the world to produce any sensible outcome.

Evaluating by the changing alien shoggoth gymnast, that might still be a methods off. Still, it’s early days in AI video generation, and evaluating by how rapidly AI image-synthesis designs like Midjourney advanced from unrefined abstract shapes into meaningful images, it’s most likely video synthesis will have a comparable trajectory with time. Up until then, delight in the AI-generated jabberwocky insanity.

Benj Edwards is Ars Technica’s Senior AI Reporter and creator of the website’s devoted AI beat in 2022. He’s likewise a tech historian with nearly 20 years of experience. In his spare time, he composes and tape-records music, gathers classic computer systems, and takes pleasure in nature. He resides in Raleigh, NC.

158 Comments

Learn more

As an Amazon Associate I earn from qualifying purchases.