Experiencing some technical problems

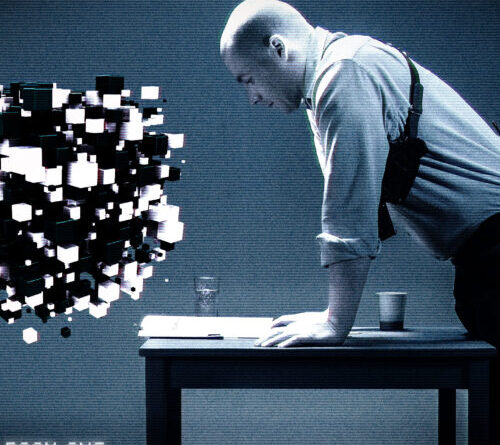

How do you get an AI design to admit what’s within?

Credit: Aurich Lawson|Getty Images

Given that ChatGPT ended up being an immediate hit approximately 2 years earlier, tech business worldwide have actually hurried to launch AI items while the general public is still in wonder of AI’s relatively extreme capacity to improve their every day lives.

At the exact same time, federal governments worldwide have actually alerted it can be tough to anticipate how quickly promoting AI can hurt society. Unique usages might all of a sudden debut and displace employees, fuel disinformation, suppress competitors, or threaten nationwide security– and those are simply a few of the apparent possible damages.

While federal governments rush to develop systems to discover hazardous applications– preferably before AI designs are released– a few of the earliest suits over ChatGPT reveal simply how difficult it is for the general public to split open an AI design and discover proof of damages when a design is launched into the wild. That job is apparently just made more difficult by a significantly thirsty AI market intent on protecting designs from rivals to make the most of benefit from emerging abilities.

The less the general public understands, the relatively more difficult and more costly it is to hold business liable for careless AI releases. This fall, ChatGPT-maker OpenAI was even implicated of attempting to benefit off discovery by looking for to charge litigants market prices to examine AI designs declared as triggering damages.

In a suit raised by The New York Times over copyright issues, OpenAI recommended the very same design assessment procedure utilized in a comparable suit raised by book authors.

Under that procedure, the NYT might employ a specialist to evaluate extremely personal OpenAI technical products “on a secure computer in a secured room without Internet access or network access to other computers at a secure location” of OpenAI’s picking. In this closed-off arena, the specialist would have restricted time and minimal questions to attempt to get the AI design to admit what’s within.

The NYT relatively had couple of issues about the real evaluation procedure however bucked at OpenAI’s desired procedure topping the variety of questions their specialist might make through an application programs user interface to $15,000 worth of retail credits. As soon as litigants struck that cap, OpenAI recommended that the celebrations divided the expenses of staying questions, charging the NYT and co-plaintiffs half-retail rates to complete the rest of their discovery.

In September, the NYT informed the court that the celebrations had actually reached an “impasse” over this procedure, declaring that “OpenAI seeks to hide its infringement by professing an undue—yet unquantified—’expense.'” According to the NYT, complainants would require $800,000 worth of retail credits to look for the proof they require to show their case, however there’s apparently no other way it would in fact cost OpenAI that much.

“OpenAI has refused to state what its actual costs would be, and instead improperly focuses on what it charges its customers for retail services as part of its (for profit) business,” the NYT declared in a court filing.

In its defense, OpenAI has actually stated that setting the preliminary cap is needed to minimize the problem on OpenAI and avoid a NYT fishing exploration. The ChatGPT maker declared that complainants “are requesting hundreds of thousands of dollars of credits to run an arbitrary and unsubstantiated—and likely unnecessary—number of searches on OpenAI’s models, all at OpenAI’s expense.”

How this court dispute fixes might have ramifications for future cases where the general public looks for to check designs triggering supposed damages. It promises that if a court concurs OpenAI can charge list prices for design assessment, it might possibly prevent claims from any complainants who can’t manage to pay an AI professional or business rates for design evaluation.

Lucas Hansen, co-founder of CivAI– a business that looks for to boost public awareness of what AI can really do– informed Ars that most likely a great deal of evaluation can be done on public designs. Frequently, public designs are fine-tuned, maybe censoring particular inquiries and making it more difficult to discover details that a design was trained on– which is the objective of NYT’s fit. By acquiring API access to initial designs rather, litigants might have a simpler time discovering proof to show supposed damages.

It’s uncertain precisely what it costs OpenAI to supply that level of gain access to. Hansen informed Ars that expenses of training and try out designs “dwarfs” the expense of running designs to offer complete ability services. Designers have actually kept in mind in online forums that expenses of API questions rapidly build up, with one declaring OpenAI’s rates is “killing the motivation to work with the APIs.”

The NYT’s attorneys and OpenAI decreased to talk about the continuous lawsuits.

United States obstacles for AI security screening

Obviously, OpenAI is not the only AI business dealing with suits over popular items. Artists have actually taken legal action against makers of image generators for supposedly threatening their incomes, and numerous chatbots have actually been implicated of disparagement. Other emerging damages consist of extremely noticeable examples– like specific AI deepfakes, damaging everybody from celebs like Taylor Swift to middle schoolers– along with underreported damages, like apparently prejudiced HR software application.

A current Gallup study recommends that Americans are more trusting of AI than ever however still two times as most likely to think AI does “more harm than good” than that the advantages surpass the damages. Hansen’s CivAI produces demonstrations and interactive software application for education projects assisting the general public to comprehend firsthand the genuine risks of AI. He informed Ars that while it’s difficult for outsiders to rely on a research study from “some random organization doing really technical work” to expose damages, CivAI offers a regulated method for individuals to see on their own how AI systems can be misused.

“It’s easier for people to trust the results, because they can do it themselves,” Hansen informed Ars.

Hansen likewise recommends legislators coming to grips with AI dangers. In February, CivAI signed up with the Artificial Intelligence Safety Institute Consortium– a group consisting of Fortune 500 business, federal government companies, nonprofits, and scholastic research study groups that assist to encourage the United States AI Safety Institute (AISI). So far, Hansen stated, CivAI has actually not been extremely active in that consortium beyond arranging a talk to share demonstrations.

The AISI is expected to secure the United States from dangerous AI designs by performing security screening to identify damages before designs are released. Checking needs to “address risks to human rights, civil rights, and civil liberties, such as those related to privacy, discrimination and bias, freedom of expression, and the safety of individuals and groups,” President Joe Biden stated in a nationwide security memo last month, prompting that security screening was important to support unequaled AI development.

“For the United States to benefit maximally from AI, Americans must know when they can trust systems to perform safely and reliably,” Biden stated.

The AISI’s security screening is voluntary, and while business like OpenAI and Anthropic have actually concurred to the voluntary screening, not every business has. Hansen is fretted that AISI is under-resourced and under-budgeted to accomplish its broad objectives of securing America from unknown AI damages.

“The AI Safety Institute predicted that they’ll need about $50 million in funding, and that was before the National Security memo, and it does not seem like they’re going to be getting that at all,” Hansen informed Ars.

Biden had $50 million allocated AISI in 2025, however Donald Trump has actually threatened to take apart Biden’s AI security strategy upon taking workplace.

The AISI was most likely never ever going to be moneyed all right to identify and discourage all AI damages, however with its future uncertain, even the restricted security evaluating the United States had actually prepared might be stalled at a time when the AI market continues moving complete speed ahead.

That might mostly leave the general public at the grace of AI business’ internal security screening. As frontier designs from huge business will likely stay under society’s microscopic lense, OpenAI has actually guaranteed to increase financial investments in security screening and assistance develop industry-leading security requirements.

According to OpenAI, that effort consists of making designs more secure with time, less susceptible to producing damaging outputs, even with jailbreaks. OpenAI has a lot of work to do in that location, as Hansen informed Ars that he has a “standard jailbreak” for OpenAI’s most popular release, ChatGPT, “that almost always works” to produce damaging outputs.

The AISI did not react to Ars’ demand to comment.

NYT “no place near done” examining OpenAI designs

For the general public, who frequently end up being guinea pigs when AI acts unexpectedly, dangers stay, as the NYT case recommends that the expenses of combating AI business might increase while technical missteps might postpone resolutions. Recently, an OpenAI filing revealed that NYT’s efforts to check pre-training information in a “really, really securely managed environment” like the one advised for design examination were apparently continually interfered with.

“The process has not gone smoothly, and they are running into a variety of obstacles to, and obstructions of, their review,” the court filing explaining NYT’s position stated. “These severe and repeated technical issues have made it impossible to effectively and efficiently search across OpenAI’s training datasets in order to ascertain the full scope of OpenAI’s infringement. In the first week of the inspection alone, Plaintiffs experienced nearly a dozen disruptions to the inspection environment, which resulted in many hours when News Plaintiffs had no access to the training datasets and no ability to run continuous searches.”

OpenAI was furthermore implicated of declining to set up software application the litigants required and arbitrarily closing down continuous searches. Annoyed after more than 27 days of checking information and getting “nowhere near done,” the NYT keeps pressing the court to buy OpenAI to offer the information rather. In action, OpenAI stated complainants’ issues were either “resolved” or conversations stayed “ongoing,” recommending there was no requirement for the court to step in.

Far, the NYT declares that it has actually discovered millions of complainants’ works in the ChatGPT pre-training information however has actually been not able to validate the complete level of the supposed violation due to the technical troubles. Expenses keep accumulating in every instructions.

“While News Plaintiffs continue to bear the burden and expense of examining the training datasets, their requests with respect to the inspection environment would be significantly reduced if OpenAI admitted that they trained their models on all, or the vast majority, of News Plaintiffs’ copyrighted content,” the court filing stated.

Ashley is a senior policy press reporter for Ars Technica, devoted to tracking social effects of emerging policies and brand-new innovations. She is a Chicago-based reporter with 20 years of experience.

91 Comments

Find out more

As an Amazon Associate I earn from qualifying purchases.