What does “meaning” indicate?

A partial defense of(a few of) AI Overview’s fanciful idiomatic descriptions.

Mind … blown

Credit: Getty Images

Recently, the expression “You can’t lick a badger twice” suddenly went viral on social networks. The nonsense sentence– which was most likely never ever said by a human before recently– had actually ended up being the poster kid for the freshly found method Google search’s AI Overviews comprises plausible-sounding descriptions for fabricated idioms(though the principle appears to precede that particular viral post by a minimum of a couple of days ).

Google users rapidly found that typing any prepared expression into the search bar with the word “meaning” connected at the end would create an AI Overview with a supposed description of its idiomatic significance. Even the most ridiculous efforts at brand-new sayings led to a positive description from Google’s AI Overview, developed right there on the area.

In the wake of the “lick a badger” post, many users gathered to social networks to share Google’s AI analyses of their own fabricated idioms, frequently revealing scary or shock at Google’s take on their rubbish. Those posts frequently highlight the overconfident method the AI Overview frames its idiomatic descriptions and periodic issues with the design confabulating sources that do not exist.

After checking out through lots of openly shared examples of Google’s descriptions for phony idioms– and creating a few of my own– I’ve come away rather pleased with the design’s nearly poetic efforts to obtain significance from mumbo jumbo and make sense out of the ridiculous.

Speak to me like a kid

Let’s attempt an idea experiment: Say a kid asked you what the expression “you can’t lick a badger twice” methods. You ‘d most likely state you’ve never ever heard that specific expression or ask the kid where they heard it. You may state that you’re not acquainted with that expression or that it does not truly make good sense without more context.

Someone on Threads observed you can type any random sentence into Google, then include “significance”later on, and you’ll get an AI description of a well-known idiom or expression you simply comprised. Here is mine

[image or embed]

— Greg Jenner(@gregjenner. bsky.social)April 23, 2025 at 6:15 AM

Let’s state the kid continued and actually desired a description for what the expression implies. You ‘d do your finest to produce a plausible-sounding response. You ‘d browse your memory for possible undertones for the word “lick” and/or symbolic significance for the worthy badger to require the idiom into some form of sense. You ‘d reach back to other comparable idioms you understand to attempt to fit this brand-new, unknown expression into a larger pattern (anybody who has actually played the exceptional parlor game Wise and Otherwise may be acquainted with the procedure).

Google’s AI Overview does not go through precisely that type of human idea procedure when confronted with a comparable concern about the exact same stating. In its own method, the big language design likewise does its finest to produce a plausible-sounding reaction to an unreasonable demand.

As seen in Greg Jenner’s viral Bluesky post, Google’s AI Overview recommends that “you can’t lick a badger twice” methods that “you can’t trick or deceive someone a second time after they’ve been tricked once. It’s a warning that if someone has already been deceived, they are unlikely to fall for the same trick again.” As an effort to obtain significance from a useless expression– which was, after all, the user’s demand– that’s not half bad. Confronted with an expression that has no fundamental significance, the AI Overview still makes a good-faith effort to respond to the user’s demand and draw some possible description out of troll-worthy rubbish.

Contrary to the computer technology truism of “trash in, trash out, Google here is taking in some trash and spitting out … well, a practical analysis of trash, at the minimum.

Google’s AI Overview even enters into more information describing its idea procedure. “Lick” here suggests to “trick or deceive” somebody, it states, a little bit of a stretch from the dictionary meaning of lick as “comprehensively defeat,” Most likely close sufficient for an idiom (and a possible model of the idiom, “Fool me once shame on you, fool me twice, shame on me…”. Google likewise describes that the badger part of the expression “likely originates from the historical sport of badger baiting,” a practice I made certain Google was hallucinating till I looked it up and discovered it was genuine.

It took me 15 seconds to comprise this stating now I believe it sort of works!

Credit: Kyle Orland/ Google

It took me 15 seconds to comprise this stating today I believe it sort of works!

Credit: Kyle Orland/ Google

I discovered a lot of other examples where Google’s AI obtained more significance than the initial requester’s mumbo jumbo most likely should have. Google analyzes the expression “dream makes the steam” as a nearly poetic declaration about creativity powering development. The line “you can’t humble a tortoise” Gets translated as a declaration about the problem of daunting “someone with a strong, steady, unwavering character (like a tortoise).”

Google likewise frequently discovers connections that the initial rubbish idiom developers most likely didn’t plan. Google might connect the fabricated idiom “A deft cat always rings the bell” to the genuine principle of belling the feline. And in trying to translate the rubbish expression “two cats are better than grapes,” the AI Overview properly keeps in mind that grapes can be possibly hazardous to felines.

Teeming with self-confidence

Even when Google’s AI Overview strives to reconcile a bad timely, I can still comprehend why the actions rub a great deal of users the incorrect method. A great deal of the issue, I believe, relates to the LLM’s unearned positive tone, which pretends that any fabricated idiom is a typical stating with a reputable and reliable significance.

Instead of framing its actions as a “best guess” at an unidentified expression (as a human may when reacting to a kid in the example above), Google typically offers the user with a single, reliable description for what an idiom suggests, complete stop. Even with the periodic usage of couching words such as “likely,” “probably,” or “suggests,” the AI Overview comes off as unnervingly sure of the accepted significance for some rubbish the user comprised 5 seconds earlier.

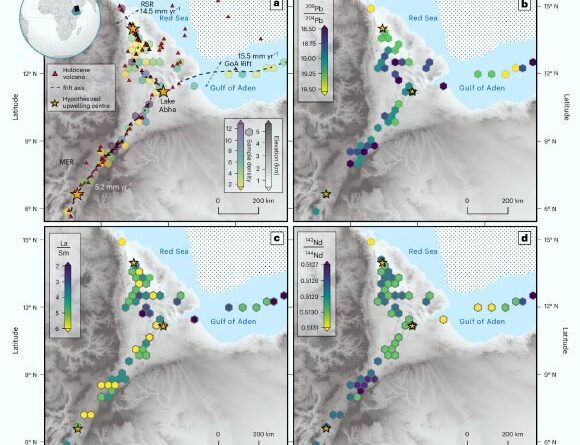

If Google’s AI Overviews constantly revealed this much insecurity, we ‘d be getting someplace.

Credit: Google/ Kyle Orland

If Google’s AI Overviews constantly revealed this much insecurity, we ‘d be getting someplace.

Credit: Google/ Kyle Orland

I had the ability to discover one exception to this in my screening. When I asked Google the significance of “when you see a tortoise, spin in a circle,” Google fairly informed me that the expression “doesn’t have a widely recognized, specific meaning” which it’s “not a standard expression with a clear, universal meaning.” With that context, Google then provided recommendations for what the expression “seems to” suggest and discussed Japanese nursery rhymes that it “may be connected” to, before concluding that it is “open to interpretation.”

Those qualifiers go a long method towards appropriately contextualizing the uncertainty Google’s AI Overview is in fact carrying out here. And if Google supplied that type of context in every AI summary description of a fabricated expression, I do not believe users would be rather as upset.

LLMs like this have problem understanding what they do not understand, implying minutes of insecurity like the turtle analysis here tend to be couple of and far in between. It’s not like Google’s language design has some master list of idioms in its neural network that it can speak with to identify what is and isn’t a “standard expression” that it can be positive about. Generally, it’s simply predicting a fearless tone while having a hard time to require the user’s mumbo jumbo into significance.

Zeus camouflaged himself as what

The worst examples of Google’s idiomatic AI uncertainty are ones where the LLM slips previous possible analyses and into large hallucination of entirely imaginary sources. The expression “a dog never dances before sunset,” Did not appear in the movie Before Sunriseno matter what Google states. “There are always two suns on Tuesday” does not appear in The Hitchhiker’s Guide to the Galaxy movie regardless of Google’s persistence.

Literally in the one I attempted.

[image or embed]

— Sarah Vaughan (@madamefelicie. bsky.social)April 23, 2025 at 7:52 AM

There’s likewise no indicator that the fabricated expression “Welsh men jump the rabbit” stemmed on the Welsh island of Portland, or that “peanut butter platform heels” describes a clinical experiment producing diamonds from the sticky treat. We’re likewise uninformed of any Greek misconception where Zeus disguises himself as a golden shower to describe the expression “beware what glitters in a golden shower.” (Update: As lots of commenters have actually mentioned, this last one is in fact a recommendation to the greek misconception of Danaë and the shower of gold, revealing Google’s AI understands more about this prospective significance than I do)

The truth that Google’s AI Overview provides these entirely fabricated sources with the exact same self-assurance as its abstract analyses is a huge part of the issue here. It’s likewise a relentless issue for LLMs that tend to comprise news sources and point out phony legal cases frequently. As normal, one ought to be really cautious when relying on anything an LLM provides as an unbiased reality.

When it concerns the more creative and symbolic analysis of rubbish expressions, however, I believe Google’s AI Overviews have actually gotten something of a bum rap just recently. Provided with the uphill struggle of describing nigh-unexplainable expressions, the design does its finest, creating analyses that can verge on the extensive sometimes. While the reliable tone of those actions can in some cases be irritating or actively deceptive, it’s at least entertaining to see the design’s finest efforts to handle our useless expressions.

Kyle Orland has actually been the Senior Gaming Editor at Ars Technica because 2012, composing mainly about business, tech, and culture behind computer game. He has journalism and computer technology degrees from University of Maryland. He when composed an entire book about Minesweeper

123 Comments

Find out more

As an Amazon Associate I earn from qualifying purchases.